A 40cm-square patch that renders you invisible to person-detecting AIs

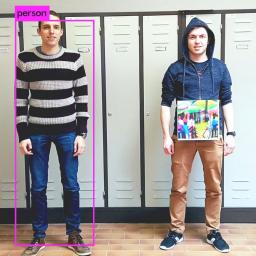

Researchers from KU Leuven have published a paper showing how they can create a 40cm x 40cm "patch" that fools a convoluted neural network classifier that is otherwise a good tool for identifying humans into thinking that a person is not a person -- something that could be used to defeat AI-based security camera systems. They theorize that the could just print the patch on a t-shirt and get the same result.

The researchers' key point is that the training data for classifiers consists of humans who aren't trying to fool it -- that is, the training is non-adversarial -- while the applications for these systems are often adversarial (such as being used to evaluate security camera footage whose subjects might be trying to defeat the algorithm). It's like designing a lock always unlocks when you use the key -- but not testing to see if it unlocks if you don't have the key, too.

The attack can reliably defeat YOLOv2, a popular machine-learning classifier, and they hypothesize that it could be applied to other classifiers as well.

I've been writing about these adversarial examples for years, and universally, they represent devastating attacks on otherwise extremely effective classifiers. It's an important lesson about the difference between adversarial and non-adversarial design: the efficacy of a non-adversarial system is no guarantee of adversarial efficacy.

In this paper, we presented a system to generate adversarial patches for person detectors that can be printed out andused in the real-world. We did this by optimising an image to minimise different probabilities related to the appearance of a person in the output of the detector. In our experiments we compared different approaches and found that minimising object loss created the most effective patches.

From our real-world test with printed out patches we canalso see that our patches work quite well in hiding persons from object detectors, suggesting that security systems using similar detectors might be vulnerable to this kind of attack.

We believe that, if we combine this technique with a sophisticated clothing simulation, we can design a T-shirtprint that can make a person virtually invisible for automatic surveillance cameras (using the YOLO detector).

Fooling automated surveillance cameras: adversarial patches to attack person detection [Simen Thys, Wiebe Van Ranst and Toon Goedemi(C)/Arxiv]

How to hide from the AI surveillance state with a color printout [Will Knight/MIT Technology Review]

(via Schneier)