|

by Rich Brueckner on (#4G9ZB)

The Jülich Supercomputing Centre in Germany is seeking a Computer Scientist in our Job of the Week. "You will directly support and independently advance the code development of existing and new parallel scientific software. The focus is placed on the adaption of existing, complex software to next generation heterogeneous supercomputer architectures."The post Job of the Week: Computer Scientist at the Jülich Supercomputing Centre appeared first on insideHPC.

|

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

Inside HPC & AI News | High-Performance Computing & Artificial Intelligence

| Link | https://insidehpc.com/ |

| Feed | http://insidehpc.com/feed/ |

| Updated | 2026-03-02 22:15 |

|

by staff on (#4G89J)

NCSA is now accepting team applications for the Blue Waters Petascale Computing Hackathon. The event will take place September 9-13, 2019 at NCSA. "The Hackathon will pair research teams with expert mentors with the goal to enable scientific codes to take full advantage of HPC systems. The end goal is to have applications running efficiently at scale, or with a clear roadmap for enhancing code performance."The post Apply Now for the Blue Waters Petascale Computing Hackathon appeared first on insideHPC.

|

|

by Richard Friedman on (#4G89M)

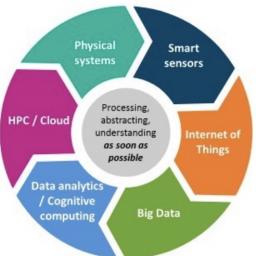

Are we witnessing the convergence of HPC, big data analytics, and AI? Once, these were separate domains, each with its own system architecture and software stack, but the data deluge is driving their convergence. Traditional big science HPC is looking more like big data analytics and AI, while analytics and AI are taking on the flavor of HPC.The post Converging Workflows Pushing Converged Software onto HPC Platforms appeared first on insideHPC.

|

|

by staff on (#4G84P)

In this Big Compute Podcast episode Gabriel Broner interviews Irene Qualters about her career and the evolution of HPC. "Some of the most profound advances that I have seen come from groups of people that have very different perspectives, very different ideas. We have to struggle to collectively bring our different disciplines on our world’s hardest problems, on the world’s most challenging problems."The post Podcast: Irene Qualters from LANL Shares Life Lessons on HPC and Diversity appeared first on insideHPC.

|

|

by Rich Brueckner on (#4G84R)

DK Panda from Ohio State University gave this talk at the Swiss HPC Conference. "We will provide an overview of interesting trends in DNN design and how cutting-edge hardware architectures are playing a key role in moving the field forward. We will also present an overview of different DNN architectures and DL frameworks. Most DL frameworks started with a single-node/single-GPU design."The post Scalable and Distributed DNN Training on Modern HPC Systems appeared first on insideHPC.

|

|

by staff on (#4G63A)

In this special guest feature from the SC19 Blog, Dan Jacobson and Wayne Joubert from ORNL describes how the Summit supercomputer is helping untangle how genetic variants, gleaned from vast datasets, can impact whether an individual is susceptible (or not) to disease, including chronic pain and opioid addiction.The post Supercomputing Takes on the Opioid Crisis appeared first on insideHPC.

|

|

by Rich Brueckner on (#4G5YB)

NEC will host their Aurora Forum at ISC 2019. Designed for developers and users SX-Aurora TSUBA vector computing technology, the The half-day meeting takes place June 17 in Frankfurt, Germany. "The NEC SX-Aurora TSUBASA is the newest in the line of NEC SX Vector Processors with the worlds highest memory bandwidth. Implemented in a PCI-e form factor, the NEC processor can be configured in many flexible configurations together with a standard x86 cluster."The post NEC to Host Aurora Forum on Vector Computing at ISC 2019 appeared first on insideHPC.

|

|

by staff on (#4G5RX)

Today Tachyum announced it has successfully deployed the Linux OS on its Prodigy Universal Processor architecture, a foundation for 64-core, ultra-low power, high-performance processor. “Running an OS directly on the chip, without attaching to another host processor as an accelerator or other workaround, signals to the industry that Tachyum compiler and software is stable, mature, and approaching production-quality.â€The post Tachyum Boots Linux on Universal Processor Chip appeared first on insideHPC.

|

|

by staff on (#4G5RY)

Today Microway announced that the company is now shipping 2nd Generation Intel Xeon Scalable processors in volume, including next-generation NumberSmasher clusters, servers, and WhisperStations. "This new family of processors boosts performance for Microway custom deployments with increased CPU core counts, higher memory capacity and bandwidth, improved clock speeds, and innovative new features for HPC, AI, and Deep Learning. A Microway analysis finds that, without exception, 2nd generation Intel Xeon Scalable CPUs are expected to outperform their predecessors."The post Microway Shipping Intel Cascade Lake Systems in Volume appeared first on insideHPC.

|

|

by Rich Brueckner on (#4G5RZ)

Kevin Roe from the Maui High Performance Computing Center gave this talk at the GPU Technology Conference. "We will characterize the performance of multi-GPU systems in an effort to determine their viability for running physics-based applications using Fast Fourier Transforms (FFTs). Additionally, we'll discuss how multi-GPU FFTs allow available memory to exceed the limits of a single GPU and how they can reduce computational time for larger problem sizes."The post Video: Multi-GPU FFT Performance on Different Hardware Configurations appeared first on insideHPC.

|

|

by Rich Brueckner on (#4G3JT)

The ALCF Data Science Program at Argonne has issued its Call for Proposals. The program aims to accelerate discovery across a broad range of scientific domains which require data-intensive and machine learning algorithms to address challenging research problems. "Ongoing and past ADSP projects span a diverse range of science domains, e.g. Materials, Imaging, Neuroscience, Engineering, Combustion/CFD, Cosmology; and involve large science collaborations."The post Call for Proposals: Get on Big Iron with the ALCF Data Science Program appeared first on insideHPC.

|

|

by staff on (#4G3JV)

Today Univa announced its latest version of Navops Launch, version 1.1, which offers enterprise users the ability to migrate their HPC workloads and processes to the cloud with higher efficiency and agility. "The Univa team designed its newest version of Navops Launch to help make the administration of day-to-day cloud usage simpler than an unrestricted cloud queue as a way to help control costs, while still optimizing on efficiency and agility. We strive to make our customers cloud experience as close to a NoOps model as possible."The post Univa Navops Launch Speeds HPC Workload Migration to the Cloud appeared first on insideHPC.

|

|

by staff on (#4G3D2)

In this special guest feature, Dan Olds from OrionX continues his Epic HPC Road Trip series with a stop at NREL in Golden, Colorado. "When it comes to energy efficient computing, NREL has to be one of the most advanced facilities in the world. It’s the first data center I’ve seen where their current PUE is shown on a LCD panel outside the door. When I was visiting, the PUE of the Day was 1.027 – which is incredibly low."The post Epic HPC Road Trip Continues to NREL appeared first on insideHPC.

|

|

by Rich Brueckner on (#4G3D3)

Sergio Maffioletti from the University of Zurich gave this talk at the hpc-ch forum on Cloud and Containers. "In this talk, we'll provide an overview of the challenges faced by both research infrastructure providers and Science IT units, along with best practices to improve the reproducibility of data analysis using cloud and container technologies."The post Towards Reproducible Data Analysis Using Cloud and Container Technologies appeared first on insideHPC.

|

|

by staff on (#4G3D5)

Today the Texas A&M Engineering Experiment Station announced the creation of the new Hewlett Packard Enterprise Center for Computer Architecture Research. Made possible with a donation from HPE, the center’s mission is to lead the way into this new world of data-driven computing architectures through academic-industry collaboration. “There is no other cleanroom in the state of Texas that has all five of the high-end instruments HPE is donating, and we plan to become a regional hub for next-generation nano- and micro-engineering."The post Texas A&M to launch new HPE Center for Computer Architecture Research appeared first on insideHPC.

|

|

by staff on (#4FYWA)

In the face of unrelenting data growth, rising numbers of high-performance computing (HPC) workloads are memory bound. Caught between the high cost and limited capacity of DRAM and the lower performance of 3D NAND SSDs, HPC users increasingly find that despite workarounds, they’re unable to keep pace with skyrocketing data volumes and increasingly complex challenges. Intel Optane technology is designed to address these challenges.The post Unleashing the Next Wave of HPC Breakthroughs with Intel Optane DC Persistent Memory and Intel Optane DC Solid State Drives appeared first on insideHPC.

|

|

by Rich Brueckner on (#4G19F)

MEGWARE has deployed a new HPC cluster at The Leibniz Centre for Agricultural Landscape Research (ZALF) in Germany. The cluster is powered by 125 compute nodes equipped with latest Intel Xeon Scalable 6230 processor technology. "Thanks to the professional advice and support provided by MEGWARE, ZALF has now access to a powerful HPC cluster system, which makes new modeling methods and simulation techniques for complex agricultural landscape research available."The post MEGWARE Powers HPC Cluster for Agriculture Landscape Research at ZALF appeared first on insideHPC.

|

|

by staff on (#4G14M)

In this special guest feature, Dr. Rosemary Francis reflects on how a recent film about Ruth Bader Ginsberg has a gender equality lesson for us all in high performance computing. "There’s a real argument that creating a more diverse HPC workforce will help us to create machines that make more diverse decisions. By bringing as many minds together as possible – men and women, of different ages, races and ethnicities – we can make sure all problems are considered on the basis of every possible human angle."The post On the Basis of Sex: HPC in the fight for gender equality appeared first on insideHPC.

|

|

by staff on (#4G14P)

Today Excelero and ThinkParQ announced benchmark results from their combined technologies for HPC, AI, ML, and analytics. "With Excelero’s NVMesh, our customers have access to an ultra-low latency, high performance approach to scale-out storage,†said Frank Herold, CEO of ThinkParQ. “We’ve been impressed with NVMesh’s ability to deliver the high IOPS and ultra-low latency of NVMe drives over the network with highly available volumes – as well as options for distributed erasure coding and BeeGFS' unmatched ability to efficiently handle all kinds of access patterns and file sizes.â€The post Excelero Integrates NVMesh and BeeGFS for Accelerated I/O appeared first on insideHPC.

|

|

by Rich Brueckner on (#4G0Z3)

Christian Trott from Sandia gave this talk at the GPU Technology Conference. "The Kokkos C++ Performance Portability EcoSystem is a production-level solution for writing modern C++ applications in a hardware-agnostic way. We'll provide success stories for Kokkos adoption in large production applications on the leading supercomputing platforms in the U.S. We'll focus particularly on early results from two of the world's most powerful supercomputers, Summit and Sierra, both powered by NVIDIA Tesla V100 GPUs."The post Video: The Kokkos C++ Performance Portability EcoSystem for Exascale appeared first on insideHPC.

|

|

by staff on (#4G0Z5)

Today TYAN announced that it is teaming with NVIDIA to integrate the NVIDIA EGX platform with its Thunder SX TN76-B7102 edge server, completing TYAN’s GPU server product line. "NVIDIA EGX provides the missing link for low-latency AI computing at the edge with an advanced, light-compute platform, reducing the amount of data that needs to be pushed to the cloud."The post NVIDIA EGX Accelerates AI on TYAN Edge Server appeared first on insideHPC.

|

|

by staff on (#4FYWC)

Today NVIDIA announced NVIDIA EGX, an accelerated computing platform that enables companies to perform low-latency AI at the edge. “The combination of high-performance, low-latency and accelerated networking provides a new infrastructure tier of computing that is critical to efficiently access and supply the data needed to fuel the next generation of advanced AI solutions on edge platforms such as NVIDIA EGX.â€The post New NVIDIA EGX Platform Brings Real-Time AI to the Edge appeared first on insideHPC.

|

|

by Rich Brueckner on (#4FXC4)

Dell EMC will once again host the HPC Community Meeting at ISC 2019. "The Dell EMC HPC Community is a worldwide technical forum that fosters the exchange of ideas among researchers, computer scientists, technologists, and engineers and promotes the advancement of innovative, powerful HPC solutions. In the Community, members share expertise, insights, observations, suggestions, and experiences to improve current HPC solutions and to influence future technology capabilities & impact."The post Dell HPC Community Meeting returns to Frankfurt for ISC 2019 appeared first on insideHPC.

|

|

by Rich Brueckner on (#4FXC6)

Henry Newman from Seagate Government Solutions gave this talk at the HPC User Forum in Santa Fe. "Cyber attacks and security breaches have become commonplace with explosion of data. More often than not, these breaches could have prevented or greatly reduced if these institutions would have followed prescribed security standards. As we move to the edge and go to 5G networks, there is going to be more distributed data and therefore protection is going to have to go out to the edge as well."The post Cybersecurity and Risk Management for HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#4FVY5)

Kevin Sayers from the University of Basel gave this talk at the hpc-ch forum. "The SIB Swiss Institute of Bioinformatics BioMedIT project is developing the computing infrastructure which will enable biomedical analyses on sensitive human data across multiple sites as part of the Swiss Personalized Health Network. This presentation will focus on our experience assessing Kubernetes to support these biomedical workloads, and the benefits it provides to researchers in the community."The post Video: Kubernetes for Biomedical Analysis appeared first on insideHPC.

|

|

by Rich Brueckner on (#4FVVR)

Milwaukee School of Engineering (MSOE) invites applications for a full-time HPC Systems Administrator to join our Electrical Engineering and Computer Science department. "The MSOE community is guided by six values – collaboration, excellence, inclusion, innovation, integrity and stewardship – that represent the core of our campus culture."The post Job of the Week: HPC System Administrator at the Milwaukee School of Engineering appeared first on insideHPC.

|

|

by staff on (#4FTBY)

Researchers are using supercomputers to introduce and assess the impact of different configurations of defects on the performance of a superconductor. "When people think of targeted evolution, they might think of people who breed dogs or horses,†said Argonne materials scientist Andreas Glatz, the corresponding author of the study. ​“Ours is an example of materials by design, where the computer learns from prior generations the best possible arrangement of defects.â€The post HPC Powers Evolution of Defects for Superconductors appeared first on insideHPC.

|

|

by staff on (#4FT1V)

The European ETP4HPC initiative has published a blueprint for the new Strategic Research Agenda for High Performance Computing. "This blueprint sketches the big picture of the major trends in the deployment of HPC and HPDA methods and systems, driven by the economic and societal needs of Europe, taking into account the changes expected in the underlying technologies and the overall architecture of the expanding underlying HPC infrastructure."The post New Blueprint lays out Strategic Research Agenda for HPC in Europe appeared first on insideHPC.

|

|

by staff on (#4FSXV)

Today ISC 2019 announced the recipients of the ISC Travel Grant this year will be from Columbia and Botswana. The winners will each be awarded a grant of 2500 euros to cover travel expenses and boarding. ISC High Performance will also provide the grant recipients free registration for the entire conference in Frankfurt, Germany.The post Colombian and Botswana Researchers Awarded ISC Travel Grant appeared first on insideHPC.

|

|

by Rich Brueckner on (#4FSXX)

Pol Forn from the Barcelona Supercomputing Centre gave this talk at the BSC Annual Meeting. "QUANTIC is a joint venture between the Barcelona Supercomputing Center and the University of Barcelona. The research directions are focused on performing quantum computation in a laboratory of superconducting quantum circuits and studying new applications for quantum processors."The post Video: The First Catalan Quantum Computer appeared first on insideHPC.

|

|

by staff on (#4FQVD)

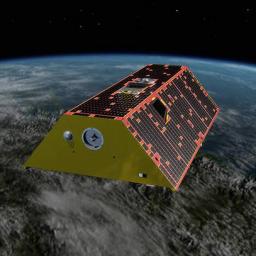

Researchers are using powerful supercomputers at TACC to process data from Gravity Recovery and Climate Experiment (GRACE). "Intended to last just five years in orbit for a limited, experimental mission to measure small changes in the Earth's gravitational fields, GRACE operated for more than 15 years and provided unprecedented insight into our global water resources, from more accurate measurements of polar ice loss to a better view of the ocean currents, and the rise in global sea levels."The post TACC Powers Climate Studies with GRACE Project appeared first on insideHPC.

|

|

by Rich Brueckner on (#4FQJ7)

The HiPEAC 2020 conference has issued its Call for Workshops. The event takes place January 20-22, 2020 in Bologna, Italy. "The HiPEAC conference is the meeting place for computing systems researchers in Europe. Put your research on the map with a paper presentation and get your paper published in the open access journal ACM TACO: Transactions on Architecture and Code Optimization."The post Call for Workshops: HiPEAC 2020 in Bologna appeared first on insideHPC.

|

|

by staff on (#4FQJ9)

Ian Foster has been selected to receive the 2019 IEEE Computer Society (IEEE CS) Charles Babbage Award for his outstanding contributions in the areas of parallel computing languages, algorithms, and technologies for scalable distributed applications. "Foster’s research deals with distributed, parallel, and data-intensive computing technologies, and innovative applications of those technologies to scientific problems in such domains as materials science, climate change, and biomedicine. His Globus software is widely used in national and international cyberinfrastructures."The post Ian Foster to receive IEEE Charles Babbage Award appeared first on insideHPC.

|

|

by Rich Brueckner on (#4FQJB)

In this special guest feature, Dan Olds from OrionX continues his Epic HPC Road Trip series with a stop at NCAR in Boulder. "Their ability to increase model precision/resolution and to increase throughput at the same time is becoming more difficult over time due to core speed slowing down as more cores are added. In other words, new chips aren’t providing the same increase in performance as we’ve become accustomed to over the years."The post Epic HPC Road Trip Continues to NCAR appeared first on insideHPC.

|

|

by Richard Friedman on (#4FQCR)

Often, it’s not enough to parallelize and vectorize an application to get the best performance. You also need to take a deep dive into how the application is accessing memory to find and eliminate bottlenecks in the code that could ultimately be limiting performance. Intel Advisor, a component of both Intel Parallel Studio XE and Intel System Studio, can help you identify and diagnose memory performance issues, and suggest strategies to improve the efficiency of your code.The post Are Memory Bottlenecks Limiting Your Application’s Performance? appeared first on insideHPC.

|

|

by Rich Brueckner on (#4FNCE)

TACC has completed a major upgrade of their Ranch long-term mass data storage system. With thousands of users, Ranch archives are valuable to scientists who want to use the data to help reproduce the measurements and results of prior research. Computational reproducibility is one piece of the larger concept of scientific reproducibility, which forms a cornerstone of […]The post TACC Upgrades Ranch Storage System for Wrangling Big Data appeared first on insideHPC.

|

|

by staff on (#4FNCG)

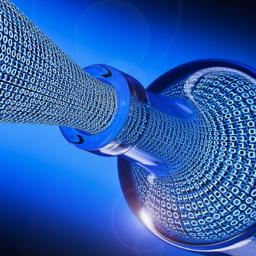

Over at the Intel AI blog, Casimir Wierzynski writes that Optical Neural Networks have exciting potential for power-efficiency in AI computation. "At last week’s CLEO conference, we and our collaborators at UC Berkeley presented new findings around ONNs, including a proposal for how that original work could be extended in the face of real-world manufacturing constraints to bring nanophotonic neural network circuits one step closer to a practical reality."The post New Paper: Nanophotonic Neural Networks coming Closer to Reality appeared first on insideHPC.

|

|

by staff on (#4FN79)

Today Spectra Logic announced the publication of “Digital Data Storage Outlook 2019,†the company’s fourth annual report on the data storage industry. "This report provides a high-level snapshot of the trends that will influence technological advancements in the data storage industry, and the workflows that will impact the way the world uses and preserves its digital information for the long term.â€The post Spectra Logic Report: Digital Data Storage Outlook 2019 appeared first on insideHPC.

|

|

by staff on (#4FN1W)

Today Dell EMC launched a set of new Dell EMC AI Experience Zones in Bangalore, Seoul, Singapore, Sydney, and Tokyo. “The Dell EMC AI Experience Zones provide a unique space where customers and our partners can explore, test out, and learn about the different physical and virtual components that make up an AI ecosystem. This initiative is the focal point of our commitment to fostering knowledge sharing between CIOs, our ready team of technology experts, and industry partners to accelerate AI adoption and innovation for the region.â€The post New Dell EMC AI Experience Zones Launch in Asia appeared first on insideHPC.

|

|

by Rich Brueckner on (#4FN1X)

Nick Wright from Lawrence Berkeley Lab gave this talk at the GPU Technology Conference. "We'll present an overview of the upcoming NERSC9 system architecture, throughput model, and application readiness efforts. Perlmutter, a Cray system based on the Shasta architecture, will be a heterogeneous system comprising both CPU-only and GPU-accelerated nodes, with a performance of more than 3 times Cori, NERSC’s current platform."The post Video: Perlmutter – A 2020 Pre-Exascale GPU-accelerated System for NERSC appeared first on insideHPC.

|

|

by staff on (#4FJSR)

The Gauss Centre for Supercomputing is sponsoring the two German student teams for the Student Cluster Competition at ISC 2019 in Frankfurt. The teams, representing the University of Hamburg and Heidelberg University, are among the 14 student teams from Asia, North America, Africa, and Europe that will go head to head on the exhibition show floor.The post GCS Sponsors University Teams for ISC19 Student Cluster Competition appeared first on insideHPC.

|

|

by staff on (#4FJST)

Registration is now open for the Forum Teratec in France. The event takes place June 11-12 in Palaiseau. "The Forum Teratec is the premier international meeting for all players in HPC, Simulation, Big Data and Machine Learning (AI). It is a unique place of exchange and sharing for professionals in the sector. Come and discover the innovations that will revolutionize practices in industry and in many other fields of activity."The post Forum Teratec to Spotlight HPC, AI, and Digital Transformation in Europe appeared first on insideHPC.

|

|

by staff on (#4FJKH)

NERSC recently hosted its first user hackathon to begin preparing key codes for the next-generation architecture of the Perlmutter system. Over four days, experts from NERSC, Cray, and NVIDIA worked with application code teams to help them gain new understanding of the performance characteristics of their applications and optimize their codes for the GPU processors in Perlmutter. "By starting this process early, the code teams will be well prepared for running on GPUs when NERSC deploys the Perlmutter system in 2020.â€The post GPU Hackathon gears up for Future Perlmutter Supercomputer appeared first on insideHPC.

|

|

by staff on (#4FJKK)

"Clearly Arm will fit in as part of a broader HPC ecosystem, as we move towards systems that involve multiple chip architectures. In terms of scientific research, it may mean we run one stage of a simulation workflow on an Arm processor, while another stage is best carried out on another processor. By building a common fabric with multiple architectures on it, we can allow users to use the most appropriate hardware for each stage of their particular research problem.â€The post Catalyst UK Program Fosters Arm-based HPC Systems appeared first on insideHPC.

|

|

by staff on (#4FJKM)

In this special guest feature from Scientific Computing World, Robert Roe looks at advances in exascale computing and the impact of AI on HPC development. "There is a lot of co-development, AI and HPC are not mutually exclusive. They both need high-speed interconnects and very fast storage. It just so happens that AI functions better on GPUs. HPC has GPUs in abundance, so they mix very well."The post The Pending Age of Exascale appeared first on insideHPC.

|

|

by staff on (#4FGH5)

Today DDN announced the successful completion of the acquisition of Nexenta, the market leader in Software Defined Storage for 5G and Internet of Things (IoT), creating the Nexenta by DDN division. "The acquisition brings three technology powerhouses together; DDN Storage, Tintri by DDN and now Nexenta by DDN, for the benefit of its customers’ AI and multi-cloud data strategies. DDN now holds a suite of products, solutions, and services that enable AI and multi-cloud to deliver the greatest impact to the emerging IoT markets."The post DDN Completes Acquisition of Nexenta appeared first on insideHPC.

|

|

by Rich Brueckner on (#4FGCD)

PASC19 has posted its Full Conference Program. The event takes place June 12-14 in Zurich, Switzerland. "PASC19 is the sixth edition of the PASC Conference series, an international platform for the exchange of competences in scientific computing and computational science, with a strong focus on methods, tools, algorithms, application challenges, and novel techniques and usage of high performance computing."The post Agenda Posted for PASC19 in Zurich appeared first on insideHPC.

|

|

by Rich Brueckner on (#4FGCF)

The Barcelona Supercomputing Center (BSC) and Arm Research have signed a multi-year agreement to establish the Arm-BSC Centre of Excellence. The move reflects a long history of BSC pioneering Arm technologies in HPC with the MontBlanc project. "The establishment of this Centre of Excellence will enable us to continue our close collaboration in order to advance the presence of Arm technologies in High Performance Computing and High Performance Data Analytics in Europe and worldwide."The post Barcelona Supercomputing Centre opens Arm Centre of Excellence for HPC appeared first on insideHPC.

|

|

by Rich Brueckner on (#4FG6C)

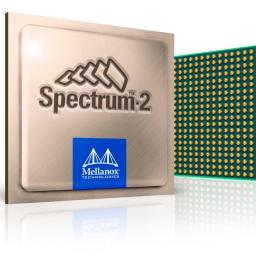

Today Mellanox introduced breakthrough Ethernet Cloud Fabric (ECF) technology based on Spectrum-2, the world’s most advanced 100/200/400 Gb/s Ethernet switches. ECF technology provides the ideal platform to quickly build and simply deploy state of the art public and private cloud data centers with improved efficiency and manageability. Spectrum-2 is at the heart a new family of SN3000 switches that come in leaf, spine, and super-spine form factors.â€The post Mellanox Introduces High Speed Ethernet Cloud Fabric Technology appeared first on insideHPC.

|

|

by Rich Brueckner on (#4FG6E)

Thorsten Kurth Josh Romero gave this talk at the GPU Technology Conference. "We'll discuss how we scaled the training of a single deep learning model to 27,360 V100 GPUs (4,560 nodes) on the OLCF Summit HPC System using the high-productivity TensorFlow framework. This talk is targeted at deep learning practitioners who are interested in learning what optimizations are necessary for training their models efficiently at massive scale."The post Video: Exascale Deep Learning for Climate Analytics appeared first on insideHPC.

|