Mercury and the bandwagon effect

The study of the planet Mercury provides two examples of the bandwagon effect. In her new book Worlds Fantastic, Worlds Familiar, planetary astronomer Bonnie Buratti writes

The study of Mercury " illustrates one of the most confounding bugaboos of the scientific method: the bandwagon effect. Scientists are only human, and they impose their own prejudices and foregone conclusions on their experiments.

Around 1800, Johann Schroeter determined that Mercury had a rotational period of 24 hours. This view held for eight decades.

In the 1880's, Giovanni Schiaparelli determined that Mercury was tidally locked, making one rotation on its axis for every orbits around the sun. This view also held for eight decades.

In 1965, radar measurements of Mercury showed that Mercury completes 3 rotations in every 2 orbits around the sun.

Studying Mercury is difficult since it is only visible near the horizon and around sunrise and sunset, i.e. when the sun's light interferes. And it is understandable that someone would confuse a 3:2 resonance with tidal locking. Still, for two periods of eight decades each, astronomers looked at Mercury and concluded what they expected.

The difficulty of seeing Mercury objectively was compounded by two incorrect but satisfying metaphors. First that Mercury was like Earth, rotating every 24 hours, then that Mercury was like the moon, orbiting the sun the same way the moon orbits Earth.

Buratti mentions the famous Millikan oil drop experiment as another example of the bandwagon effect.

" Millikan's value for the electron's charge was slightly in error-he had used a wrong value for the viscosity of air. But future experimenters all seemed to get Millikan's number. Having done the experiment myself I can see that they just picked those values that agreed with previous results.

Buratti explains that Millikan's experiment is hard to do and "it is impossible to successfully do it without abandoning most data." This is what I like to call acceptance-rejection modeling.

Acceptance-rejection modeling: Throw out data that don't fit with your model, and what's left will.

- Data Science Fact (@DataSciFact) July 2, 2015

The name comes from the acceptance-rejection method of random number generation. For example, the obvious way to generate truncated normal random values is to generate (unrestricted) normal random values and simply throw out the ones that lie outside the interval we'd like to truncate to. This is inefficient if we're truncating to a small interval, but it always works. We're conforming our samples to a pre-determined distribution, which is OK when we do it intentionally. The problem comes when we do it unintentionally.

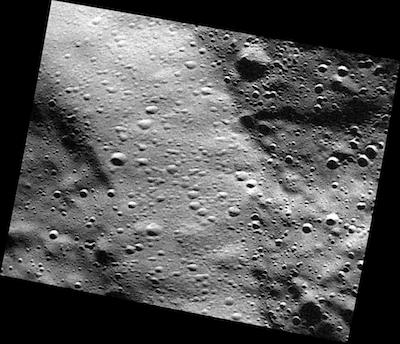

Photo of Mercury above via NASA