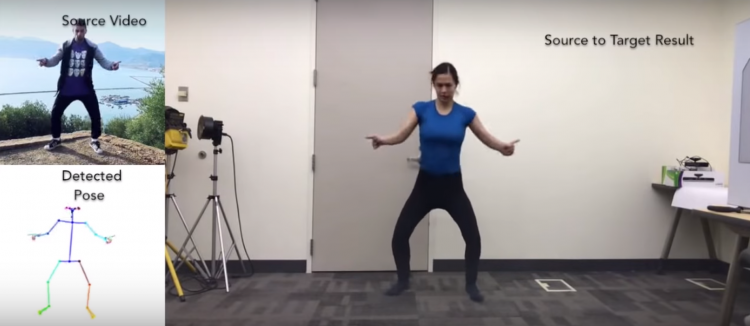

Expert Dance Moves Transferred to an Amateur Using AI Re-Targeted Source Motion Mapping

Researchers at UC Berkeley have ingeniously discovered a way to transfer expert dance moves to an amateur on video, essentially giving them the talent of the dancer without having to practice. This process re-targets AI data mapped from the video source (the dancer) into an intermediary (stick figure keypoint-based pose) that detects the movements from the source and puts them into proper proportion before sending them to the video receiver.

We pose this problem as a per-frame image-to-image translation with spatio-temporal smoothing. Using pose detections as an intermediate representation between source and target, we learn a mapping from pose images to a target subject's appearance. We adapt this setup for temporally coherent video generation including realistic face synthesis.

- Photographer Captures the Ethereal Beauty of Pole Dancers Performing Underwater

- New Technology Allows Projected Images and Video to Move With Their Targeted Surface

- Powder Dance, Photos of Dancers Amidst Clouds of Powder

The post Expert Dance Moves Transferred to an Amateur Using AI Re-Targeted Source Motion Mapping appeared first on Laughing Squid.