There's a literal elephant in machine learning's room

Machine learning image classifiers use context clues to help understand the contents of a room, for example, if they manage to identify a dining-room table with a high degree of confidence, that can help resolve ambiguity about other objects nearby, identifying them as chairs.

The downside of this powerful approach is that it means machine learning classifiers can be confounded by confusing, out-of-context elements in a scene, as is demonstrated in The Elephant in the Room, a paper from a trio of Toronto-based computer science academics.

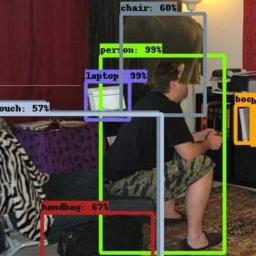

The authors show that computer vision systems that are able to confidently identify a large number of items in a living-room scene (a man, a chair, a TV, a sofa, etc) become fatally confused when they add an elephant to the room. The presence of the unexpected item throws the classifiers into dire confusion: not only do they struggle to identify the elephant, they also struggle with everything else in the scene, including items they were able to confidently identify when the elephant was absent.

It's a new wrinkle on the idea of adversarial examples, those minor, often human-imperceptible changes to inputs that can completely confuse machine-learning systems.

Contextual Reasoning:It is not common for currentobject detectors to explicitly take into account context ona semantic level, meaning that interplay between objectcategories and their relative spatial layout (or possiblyadditional) relations) are encoded in the reasoning processof the network. Though many methods claim to incorporatecontextual reasoning, this is done more in a feature-wiselevel, meaning that global image information is encodedsomehow in each decision. This is in contrast to olderworks, in which explicit contextual reasoning was quitepopular (see [3] for mention of many such works). Still, itis apparent that some implicit form of contextual reasoningdoes seem to take place. One such example is a persondetected near the keyboard (Figure 6, last column, lastrow). Some of the created images contain pairs of objectsthat may never appear together in the same image inthe training set, or otherwise give rise to scenes withunlikely configurations. For example, non co-occurringcategories, such as elephants and books, or unlikely spatial/ functional relations such as a large person (in terms ofimage area) above a small bus. Such scenes could causemisinterpretation due to contextual reasoning, whether itis learned explicitly or not.

The Elephant in the Room [Amir Rosenfeld, Richard Zemel and John K. Tsotsos/Arxiv]

Machine Learning Confronts the Elephant in the Room [Kevin Hartnett/Quanta]