Dual numbers, part 3

Last time on FAIC we discovered that when you "lift" a polynomial on doubles to a polynomial on duals and then evaluate it with x+1I, the "real" part of the returned dual exactly corresponds to the original polynomial at x; that's not a surprise. What is bizarre and fascinating is that the "epsilon" part of the dual exactly corresponds to the derivative of the polynomial at x!

First question: is this actually true for all polynomials, or did I trick you by choosing an example that just happened to work? Let's make a little chart. I'll make a bunch of power functions, and we'll list the function, its derivative, and what happens if we lift the function to dual numbers and evaluate it on an arbitrary dual number:

f(x) f'(x) f(a+bI) 1 0 1+0I x 1 a+bI x2 2x a2+2abI (The higher power I terms vanish) x3 3x2 a3+3a2bI ... xn nxn-1 an+nan-1bI ...

It sure looks like for every power function, f(a+bI) = f(a) + f'(a)bI. (Exercise: prove this by induction on the power.) And moreover, we could pretty easily show that this is true for all finite sums of these things, and all scalar multiplications, and therefore true for all polynomials.

Is it true for other functions? We know that f(x) = ex is defined as the limit of the infinite sum

1 + x + x2/2! + x3/3! ...

and that moreover, f(x) = f'(x). So what then is f(a+bI) ?

ea+bI = 1 + a+bI + (a2+2abI)/2! + (a3+3a2bI)/3! ... = (1 + a + a2/2! + ...) + (1 + a + a2/2! + ...)bI= ea + eabI= f(a) + f'(a)bI

It's nice that this falls right out of the definition; we could also have gotten there faster by using this property of exponentiation:

ea+bI = eaebI= ea(1 + bI) because the higher terms are all zero!= f(a) + f'(a)bI

I will not labour the point; using similar tricks we can show:

ln(a+bI) = ln(a) + (1/a)bIcos(a+bI) = cos(a) - sin(a)bIsin(a+bI) = sin(a) + cos(a)bI

And since yx = ex ln y, we can now compute arbitrary powers of dual numbers as well.

In every case we find that for a function f(x) defined on reals, the "lifted" function on duals is f(a+bI) = f(a) + f'(a)bI. Now, to be strictly accurate, we need to put some limitations on the function. For example, its derivative has to exist! In fact we will be more restrictive than that, and limit the functions we consider to the "analytic" functions, which have the properties necessary to be amenable to calculus: they must be smooth, their first, second, third, " derivatives must exist, and so on. We don't want to consider fractal curves or functions that come to sharp points, or have infinite slope at a point, or whatever. Just normal, everyday curves.

That all said, it still seems a little bit like magic that by lifting an ordinary function on doubles to a function on duals, we get a computation of both the function and its derivative at a point for almost free; there's only an O(1) additional cost in time and memory to compute the derivative. This stands in contrast to the more traditional way of numerically approximating a derivative by repeatedly evaluating nearby points until the slope converges, which can involve many repeated computations and results in an approximation. Is there some intuitive explanation of why this works?

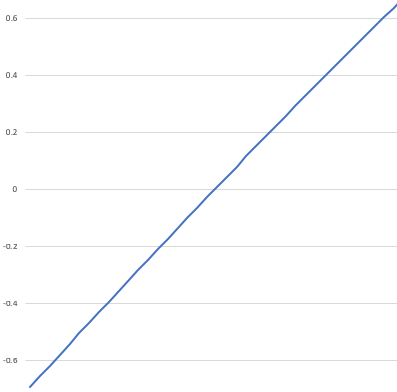

The intuition that works for me is: every analytic function is approximately a straight line if you restrict its range. Here's our polynomial again, but this time graphed from -0.8 to -0.7. It's nigh indistinguishable from a straight line over this range. But what straight line?

Well, the straight line that goes through (-0.75, f(-0.75)) and has slope of f'(-0.75) obviously! Thus it should not be a surprise that f(-0.75 + bI) should be f(-0.75) + f'(-0.75)bI. We're essentially waving our hands here and saying that I is so small that we can approximate the function in the neighbourhood of -0.75 as a straight line.

Next time on FAIC: Can we do some slightly more complicated calculus with this technique?