Open dataset of 1.78b links from the public web, 2016-2019

GDELT, a digital news monitoring service backed by Google Jigsaw, has released a massive, open set of linking data, containing 1.78 billion links in CSV, with four fields for each link: "FromSite,ToSite,NumDays,NumLinks."

The dataset has been purged of boilerplate links from headers and footers and is intended to help researchers analyze trends in linking behavior, in service of GDELT's mission to "support new theories and descriptive understandings of the behaviors and driving forces of global-scale social systems from the micro-level of the individual through the macro-level of the entire planet."

It's 396MB compressed, or 986MB uncompressefd.

One of the most useful ways to use this dataset is to sort by the "NumDays" field to rank the top outlets linking to a given site or the top outlets that linked to another outlet. Using the NumDays field allows you to rank connections based on their longevity and filter out momentary bursts (such as a major story leading an outlet to run dozens and dozens of articles linking to an outside website for several days and then never linking to that website again).

The entire dataset was created with a single line of SQL in Google BigQuery, taking just 64.9 seconds and processing 199GB.

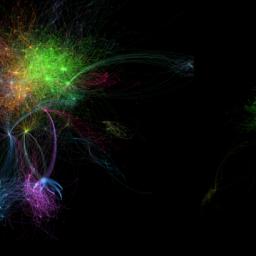

Who Links To Whom? The 30M Edge GKG Outlink Domain Graph April 2016 To Jan 2019 - The GDELT Project [GDELT Project]

(via Naked Capitalism)