Analog computers could bring massive efficiency gains to machine learning

In The Next Generation of Deep Learning Hardware: Analog Computing *Sci-Hub mirror), a trio of IBM researchers discuss how new materials could allow them to build analog computers that vastly improved the energy/computing efficiency in training machine-learning networks.

Training models is incredibly energy-intensive, with a single model imposing the same carbon footprint as the manufacture and lifetime use of five automobiles.

The idea is to perform matrix multiplications by layering "physical array with the same number of rows and columns as the abstract mathematical object" atop each other such that "the intersection of each row and column there will be an element with conductance G that represents the strength of connection between that row and column (i.e.,the weight)."

Though these would be less efficient for general-purpose computing tasks, they would act as powerful hardware accelerators for one of the most compute-intensive parts of the machine learning process.

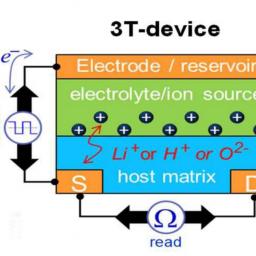

Electrochemical devices are a newcomer in the field of contenders for an analog array element for deep learning.The device idea, however, has been around for a long time and is related to the basic principle of a battery [52]. Compared to the previously discussed switches, which only required two terminals, this switch required three terminals. The device structure, shown in Fig. 11, is as tack of an insulator that forms the channel between two contacts source and drain, an electrolyte, and a top electrode (reference electrode). Proper bias between the reference electrode and the channel contacts will drive a chemical reaction at the host/electrolyte interface in which positive ions in the electrolyte react with the host, effectively doping the host material. Charge neutrality requires the free carriers to enter the channel through the channel contacts. If the connection between the reference electrode and the channel contacts is terminated after the write step the channel will maintain its state of increased conductivity. The read process is simply the current flow between the two channel contacts, source and drain, with the reference electrode floating. It has been shown [53]that almost symmetric switching can be achieved if the reference electrode is controlled with a current source.Regarding the switching requirements, the group obtained similar criteria [54] that are shown above. Voltage control of the reference electrode leads to strongly asymmetric behavior due to the buildup of an open circuit voltage (VCO) that can depend on the charge state of the host.If the reference electrode voltage compensates for VCO, almost symmetric switching can be achieved as well. Fora voltage controlled analog array for deep learning, this device is not suited since every cell would require an individual compensation depending on its conductivity. Possible solutions are low VCO material stacks.

The Next Generation of Deep Learning Hardware: Analog Computing [Wilfried Haensch, Tayfun Gokmen, Ruchir Puri/Proceedings of the IEEE] (Sci-Hub mirror)

(via Four Short Links)