"Intellectual Debt": It's bad enough when AI gets its predictions wrong, but it's potentially WORSE when AI gets it right

Jonathan Zittrain (previously) is consistently a source of interesting insights that often arrive years ahead of their wider acceptance in tech, law, ethics and culture (2008's The Future of the Internet (and how to stop it) is surprisingly relevant 11 years later); in a new long essay on Medium (shorter version in the New Yorker), Zittrain examines the perils of the "intellectual debt" that we incur when we allow machine learning systems that make predictions whose rationale we don't understand, because without an underlying theory of those predictions, we can't know their limitations.

Zittrain cites Arthur C Clarke's third law, that "any sufficiently advanced technology is indistinguishable from magic" as the core problem here: like a pulp sf novel where the descendants of the crew of a generation ship have forgotten that they're on a space-ship and have no idea where the controls are, the system works great so long as it doesn't bump into anything the automated systems can't handle, but when that (inevitably) happens, everybody dies when the ship flies itself into a star or a black hole or a meteor.

In other words, while machine learning presents lots of problems when it gets things wrong (say, when algorithmic bias enshrines and automates racism or other forms of discrimination) at least we know enough to be wary of the predictions produced by the system and to argue that they shouldn't be blindly followed: but if a system performs perfectly (and we don't know why), then we come to rely on it and forget about it and are blindsided when it goes wrong.

It's the difference between knowing your car has faulty brakes and not knowing: both are bad, but if you know there's a problem with your brakes, you can increase your following distance, drive slowly, and get to a mechanic as soon as possible. If you don't know, you're liable to find out the hard way, at 80mph on the highway when the car in front of you slams to a stop and your brakes give out.

Zittrain calls this "intellectual debt" and likens it to "technology debt" -- when you shave a corner or incompletely patch a bug, then you have to accommodate and account for this defect in every technology choice you build atop the compromised system, creating a towering, brittle edifice of technology that all comes tumbling down when the underlying defect finally reaches a breaking point. The intellectual debt from machine learning means that we outsource judgment and control to systems that work...until they don't.

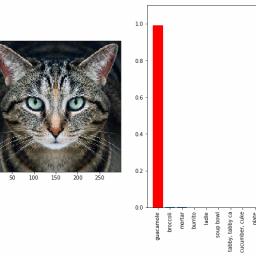

Zittrain identifies three ways in which our technology debts can come due: first, the widely publicized problem of adversarial examples, when a reliable machine learning classifier can be tricked into wildly inaccurate outputs by making tiny alterations that humans can't detect (think of making an autonomous vehicle perceive a stop-sign as a go faster sign in a way that is undetectable to the human eye). This allows for both malicious and inadvertent spoofing, which could cause serious public safety risks ("it may serve only to lull us into the chicken's sense that the kindly farmer comes every day with more feed - and will keep doing so"). It's the modern version of asbestos, another tool that works well but fails badly.

Second is the problem of multiplication of intellectual debt caused by machine learning systems whose bad guesses are used to train other machine learning systems, like when racially biased policing data is used to predict where the cops should look for crime, then the data from that activity is fed back into the system to refine the guesses.

Finally, Zittrain identifies a profound but subtle problem with "theory-free" systems that reliably direct our activities without our understanding them: that these accelerate the toxic, market-driven drive toward a "results" research agenda that focuses on applications, rather than the basic science that takes longer to pay off but has far-reaching implications when it does. As governments and firms shift their research agendas away from basic science and to incremental (but immediately useful) improvements on existing research, we rob our futures of the profound improvements that basic research yields.

Zittrain doesn't have much by way of solutions, apart from vigilance. One concrete proposal is to create backups of the data and algorithms used to produce these opaque-but-functional systems so that when they break down, we can inspect their inputs and try to figure out why. That's a particularly important one, given that so little of today's machine learning process can be independently replicated.

Most important, we should not deceive ourselves into thinking that answers alone are all that matters: indeed, without theory, they may not be meaningful answers at all. As associational and predictive engines spread and inhale ever more data, the risk of spurious correlations itself skyrockets. Consider one brilliant amateur's running list of very tight associations found, not because of any genuine association, but because with enough data, meaningless, evanescent patterns will emerge. The list includes almost perfect correlations between the divorce rate in Maine and the per capita consumption of margarine, and between U.S. spending on science, space, and technology and suicides by hanging, strangulation, and suffocation. At just the time when statisticians and scientists are moving to de-mechanize the use of statistical correlations, acknowledging that the production of correlations alone has led us astray, machine learning is experiencing that success of the former asbestos industry on the basis of exactly those kinds of correlations.

Intellectual Debt: With Great Power Comes Great Ignorance [Jonathan Zittrain/Medium]

The Hidden Costs of Automated Thinking [Jonathan Zittrain/The New Yorker]

(Thanks, JZ!)