Training bias in AI "hate speech detector" means that tweets by Black people are far more likely to be censored

More bad news for Google's beleaguered spinoff Jigsaw, whose flagship project is "Perspective," a machine-learning system designed to catch and interdict harassment, hate-speech and other undesirable online speech.

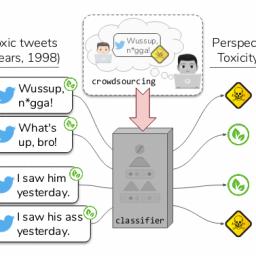

From the start, Perspective has been plagued by problems, but the latest one is a doozy: University of Washington experts have found that Perspective misclassifies inoffensive writing as hate speech far more frequently when the author is Black.

Specifically, candidate texts written in African American English (AAE) are 1.5x more likely to be rated as offensive than texts written in "white-aligned English."

The authors do a pretty good job of pinpointing the cause: the people who hand-labeled the training data for the algorithm were themselves biased, and incorrectly, systematically misidentified AAE writing as offensive. And since machine learning models are no better than their training data (though they are often worse!), the bias in the data propagated through the model.

In other words, Garbage In, Garbage Out remains the iron law of computing and has not been repealed by the deployment of machine learning systems.

We analyze racial bias in widely-used corpora of annotated toxic language, establishing correlations between annotations of offensiveness and the African American English (AAE) dialect. We show that models trained on these corpora prop-agate these biases, as AAE tweets are twice as likely to be labelled offensive compared to others.Finally, we introduce dialect and race priming,two ways to reduce annotator bias by highlightingthe dialect of a tweet in the data annotation, and show that it significantly decreases the likelihood of AAE tweets being labelled as offensive. Wefind strong evidence that extra attention should be paid to the confounding effects of dialect so as to avoid unintended racial biases in hate speech detection.

The Risk of Racial Bias in Hate Speech Detection [Maarten Sap, Dallas Card, Saadia Gabriel, Yejin Choi and Noah A. Smith/University of Washington]

(via Naked Capitalism)