Creating a "coercion resistant" communications system

Eleanor Saitta's (previously) 2016 essay "Coercion-Resistant Design" (which is new to me) is an excellent introduction to the technical countermeasures that systems designers can employ to defeat non-technical, legal attacks: for example, the threat of prison if you don't back-door your product.

Saitta's paper advises systems designers to contemplate ways to arbitrage both the rule of law and technical pre-commitments to make it harder for governments to force you to weaken the security of your product or compromise your users.

A good example of this is Certificate Transparency, a distributed system designed to catch Certificate Authorities that cheat and issue certificates to allow criminals or governments to impersonate popular websites like Google.

Certificate Transparency is embedded in most browsers, which publish an automatic, cryptographically signed stream of observations about the certificates they encounter in the wild, with information about who issued them. These are appended to multiple log-servers in countries around the world, and anyone can monitor these servers to see if their own domain shows up in a certificate they don't recognize.

The upshot of this is that if you run a Certificate Authority and your government (or a criminal) says, "Issue a Google certificate so we can spy on people or we'll put you up against a wall and shoot you," you can say to them, "I will do this, but you should know that the gambit will be discovered within an hour, and within 48 hours, we will be out of business."

For an attacker to subvert this system, they'd need to compromise the browsers of everyone who they send the fake certificate to (if they can do this, they don't need fake certs!), or they need to hack multiple, well-guarded servers around the world, or they need to get the governments of all the countries where those servers are located to order their operators to secretly subvert them.

A related subject is Ulysses Pacts, when you precommit to a course of action in a moment of strength to guard against a future moment of weakness (like throwing away your Oreos when you start your diet so that future-you won't gorge yourself on them at 2AM). For example, you might build "binary transparency" in your update mechanism, so that if you are forced to send a poisoned update to one of your users, the user's own system will detect that they've gotten a different update from other users and sound the alarm. The only way to switch this off is to send them a poisoned update, and when you do that, the alarm goes off.

Other measures are legal: you can avoid putting employees or assets or partners in countries with laws that allow them to coerce you into compromising your security. Or you can put part of the company outside of those countries, and require things like commit-signing and review-signing before new builds go live.

Or you can split authorization -- signing keys, legal authority, both -- across multiple countries, either to arbitrage more favorable human rights regimes in some countries, or just to make a government attacker's job harder (if a government has to convince a hostile foreign government to cooperate in order to attack you, you might be safer).

A common legal form of Ulysses Pact is the warrant canary, in which you precommit to publishing a tally of how many secret warrants have been served on you. You start at zero, but once you get an order to undertake secret spying, you just stop publishing that tally.

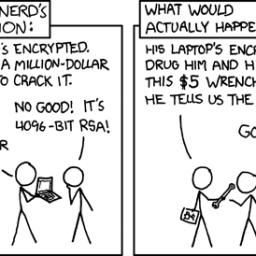

The warrant canary highlights one weakness of coercion resistance: without the rule of law, many of these measures fail (see also: rubber hose cryptanalysis, whereby an attacker ties you to a chair and hits you with a rubber hose until you cough up the keys).

The premise of a warrant canary is that governments often have laws that allow them to silence you, but not laws that give them the power to force you to lie. So they can force you to stop publishing your warrant canary, but they can't force you to publish a false report.

But this is fragile: ostensibly democratic countries like Australia have banned using warrant canaries to reveal secret court orders.

Ultimately, cryptography and information security are important, but not because they allow us to build a separate realm in which corrupt, illegitimate states can never reach us, but because they allow us to keep the forces of reaction and corruption at bay temporarily, while we organize movements to make states pluralistic, just and accountable to the governed.

In practice, a system like this picks up from the segmented binary signing we already discussed. First, instead of a single key per team, we'd like to split each team's signature into an n-of-m threshold signature scheme. This ensures that no single user can provide their team-role's approval for an update. Handling key revocation is also important, and in a 2-of-3 key system, the remaining two keys can generate a trustable revocation for a compromised key and add a replacement key. Without this, you're stuck having a known-unsafe key sign its own replacement, a process that cannot happen securely. It may be advisable to have at least four keys, so that even if both keys involved in one step of a signing operation are judged compromised the system can still recover.

This set of keys (three parties, each with a 2-of-4 threshold signature key set) are used to sign both the update itself and a metadata file for the update (the contents of which are duplicated inside the update package) which contains a timestamp, along with file hashes and download locations. Clients will refuse to install an update with a timestamp older than their current version, preventing replay attacks, and updates can also be expired if this proves necessary. The metadata file is signed offline by all parties, like the build, but the parties also operate a chained secure timestamping service with lower-value, online keys. The timestamping service regularly re-signs the metadata file with a current timestamp and an additional set of signatures, ensuring that clients can determine if the update offer they're presented with is recent and allowing them to detect cases where an adversary is blocking their access to the update service but has not compromised the timestamp keys. The continued availability of the file also acts as an indicator that all three segments of the organization are willing to work together, and functions as a form of warrant canary, although an explicit canary statement could also be added. In some cases, there is precedent for forcing service providers to continue "regular" or automatic operations that, if stopped, could reveal a warrant. Given this, generating some of the contents of the timestamp file by hand and on an irregular but still at least daily basis might be useful"-"call it, say, the organization's blog. The timestamp portion of this scheme does depend on client clock accuracy. A variation is possible which does not require this, but it loses the ability to detect some "freeze" attacks against update systems.

Metadata files are among other things attestations to the existence of signed updates. To this end, it's important that all users see all of them so the set of metadata files forms a record of every piece of code the organization has shipped. Every metadata file should contain the hash of the previous file, and clients should refuse to trust a metadata file that refers to a previous file they haven't seen (the current metadata file chain will need to ship with initial downloads). If a client sees multiple metadata files that point to the same previous version, they should distrust all of them, preventing forks. To ensure there is a global consensus among clients as to the set of metadata files in existence, instead of putting them out on a mirror the development team should upload files into a distributed hash table maintained by the clients for this purpose. This ensures all clients see all attested signatures, and will only install updates they know other clients have also seen. Clients can even refuse to install updates until a quorum of their neighbors have already trusted the update, although some clients will have to go first, possibly randomly. Clients may connect to their DHT neighbors via Tor, to make targeting of Sybil attacks more difficult. If this is done correctly, it will be impossible for the organization to make the update system hide the existence of a signed update.

Coercion-Resistant Design [Eleanor Saitta/Dymaxion]

(via Four Short Links)

(Image: XKCD)