AI is helping scholars restore ancient Greek texts on stone tablets

Machine learning and AI may be deployed on such grand tasks as finding exoplanets and creating photorealistic people, but the same techniques also have some surprising applications in academia: DeepMind has created an AI system that helps scholars understand and recreate fragmentary ancient Greek texts on broken stone tablets.

These clay, stone or metal tablets, inscribed as much as 2,700 years ago, are invaluable primary sources for history, literature and anthropology. They're covered in letters, naturally, but often the millennia have not been kind and there are not just cracks and chips but entire missing pieces that may comprise many symbols.

Such gaps, or lacunae, are sometimes easy to complete: If I wrote "the sp_der caught the fl_," anyone can tell you that it's actually "the spider caught the fly." But what if it were missing many more letters, and in a dead language, to boot? Not so easy to fill in the gaps.

Doing so is a science (and art) called epigraphy, and it involves both intuitive understanding of these texts and others to add context; one can make an educated guess at what was once written based on what has survived elsewhere. But it's painstaking and difficult work - which is why we give it to grad students, the poor things.

Coming to their rescue is a new system created by DeepMind researchers that they call Pythia, after the oracle at Delphi who translated the divine word of Apollo for the benefit of mortals.

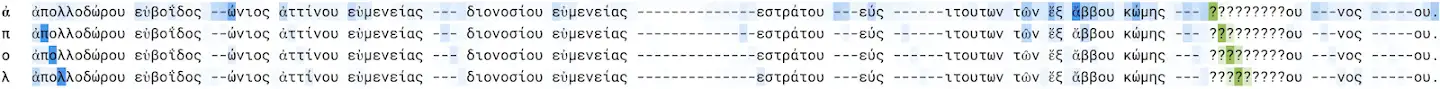

The team first created a "nontrivial" pipeline to convert the world's largest digital collection of ancient Greek inscriptions into text that a machine learning system could understand. From there it was just a matter of creating an algorithm that accurately guesses sequences of letters - just like you did for the spider and the fly.

PhD students and Pythia were both given ground-truth texts with artificially excised portions. The students completed the text with about 43 percent accuracy - not so hot, but this is difficult work and wouldn't normally be tested like this. But Pythia achieved approximately 70 percent accuracy, considerably better than the students - and what's more, the correct interpretation was in its top 20 suggestions 73 percent of the time.

(Update: The previous paragraph originally misstated the tested accuracy; I misread the error rate as the accuracy rate, essentially inverting the results. Entirely my mistake. I thank DeepMind's Yannis Assael for alerting me to this and apologize to the his co-authors for basically saying Pythia was less than half as good as it is.)

The system isn't good enough to do this work entirely on its own, but it doesn't need to. It's based on the efforts of humans (how else could it be trained on what's in those gaps?) and it will augment them, not replace them. And it sounds like grad students could use a hand anyway.

Pythia's suggestions may not always be exactly on target every time, but it could easily help someone struggling with a tricky lacuna by giving them some options to work from. Taking a bit of the cognitive load off these folks may lead to increases in speed and accuracy in taking on remaining unrestored texts.

The project was a joint effort between Oxford University and DeepMind, by Assael, Thea Sommerschield, and Jonathan Prag. Their paper on Pythia is available to read here, and some of the software they developed to create it is in this GitHub repository.