Echodyne steers its high-tech radar beam on autonomous cars with EchoDrive

Echodyne set the radar industry on its ear when it debuted its pocket-sized yet hyper-capable radar unit for drones and aircraft. But these days all the action is in autonomous vehicles - so they reinvented their technology to make a unique sensor that doesn't just see things but can communicate intelligently with the AI behind the wheel.

EchoDrive, the company's new product, is aimed squarely at AVs, looking to complement lidar and cameras with automotive radar that's as smart as you need it to be.

The chief innovation at Echodyne is the use of metamaterials, or highly engineered surfaces, to create a radar unit that can direct its beam quickly and efficiently anywhere in its field of view. That means that it can scan the whole horizon quickly, or repeatedly play the beam over a single object to collect more detail, or anything in between, or all three at once for that matter, with no moving parts and little power.

Echodyne's pocket-sized radar may be the next must-have tech for drones (and drone hunters)

But the device Echodyne created for release in 2017 was intended for aerospace purposes, where radar is more widely used, and its capabilities were suited for that field: a range of kilometers but a slow refresh rate. That's great for detecting and reacting to distant aircraft, but not at all what's needed for autonomous vehicles, which are more concerned with painting a detailed picture of the scene within a hundred meters or so.

"They said they wanted high-resolution, automotive bands [i.e. radiation wavelengths], high refresh rates, wide field of view, and still have that beam-steering capability - can you build a radar like that?," recalled Echodyne co-founder and CEO Eben Frankenberg. "And while it's taken a little longer than I thought it would, the answer is yes, we can!"

The EchoDrive system meets all the requirements set out by the company's automotive partners and testers, with up to 60hz refresh rates, higher resolution than any other automotive radar and all the other goodies.

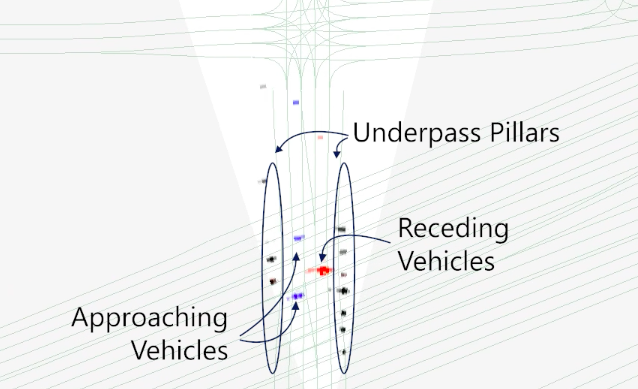

An example of some raw data - note that Doppler information lets the system tell which objects are moving which direction.

The company is focused specifically on level 4-5 autonomy, meaning their radar isn't intended for basic features like intelligent cruise control or collision detection. But radar units on cars today are intended for that, and efforts to juice them up into more serious sensors are dubious, Frankenberg said.

"Most ADAS [advanced driver assist system] radars have relatively low resolution in a raw sense, and do a whole lot of processing of the data to make it clearer and make it more accurate as far as the position of an object," he explained. "The level 4-5 folks say, we don't want all that processing because we don't know what they're doing. They want to know you're not doing something in the processing that's throwing away real information."

More raw data, and less processing - but Echodyne's tech offers something more. Because the device can change the target of its beam on the fly, it can do so in concert with the needs of the vehicle's AI.

Say an autonomous vehicle's brain has integrated the information from its suite of sensors and can't be sure whether an object it sees a hundred meters out is a moving or stationary bicycle. It can't tell its regular camera to get a better image, or its lidar to send more lasers. But it can tell Echodyne's radar to focus its beam on that object for a bit longer or more frequently.

The two-way conversation between sensor and brain, which Echodyne calls cognitive radar or knowledge-aided measurement, isn't really an option yet - but it will have to be if AVs are going to be as perceptive as we'd like them to be.

Some companies, Frankenberg pointed out, are putting on the sensors themselves the responsibility for deciding which objects or regions need more attention - a camera may very well be able to decide where to look next in some circumstances. But on the scale of a fraction of a second, and involving the other resources available to an AV - only the brain can do that.

EchoDrive is currently being tested by Echodyne's partner companies, which it would not name but which Frankenberg indicated are running level 4+ AVs on public roads. Given the growing number of companies that fit those once-narrow criteria, it would be irresponsible to speculate on their identities, but it's hard to imagine an automaker not getting excited by the advantages Echodyne claims.