Bosch Gets Smartglasses Right With Tiny Eyeball Lasers

My priority at CES every year is to find futuristic new technology that I can get excited about. But even at a tech show as large as CES, this can be surprisingly difficult. If I'm very lucky, I'll find one or two things that really blow my mind. And it almost always takes a lot of digging, because the coolest stuff is rarely mentioned in keynotes or put on display. It's hidden away in private rooms because it's still a prototype that likely won't be for ready the CES spotlight for another year or two.

Deep inside Bosch's CES booth, I found something to get excited about. After making a minor nuisance of myself, Bosch agreed to give me a private demo of its next-generation Smartglasses, which promise everything that Google Glass didn't quite manage to deliver.

A few weeks before CES, Bosch teased the new Smartglasses prototype with this concept video:

Lightweight and slim, with a completely transparent display that's brightly visible to you and invisible to anyone else, the smart glasses in this video seem like something that could be really useful without making you feel like a huge dork. But concept videos are just that, and until I got a chance to try them out for myself, it was hard to know how excited I should be.

Photo: Evan Ackerman/IEEE Spectrum Prototype technology demonstrator for Bosch Smartglasses

Photo: Evan Ackerman/IEEE Spectrum Prototype technology demonstrator for Bosch Smartglasses The reason Bosch was somewhat reluctant to squeeze me in for a demo is that you can't just slap the prototype Smartglasses on your face and fire them up- they almost certainly won't work unless you get a custom fitting. Here's Brian Rossini, senior project manager for the Smartglasses project at Bosch, helping me get fitted for my pair:

Photo: Evan Ackerman/IEEE Spectrum Brian Rossini from Bosch adjusts the prototype Smartglasses.

Photo: Evan Ackerman/IEEE Spectrum Brian Rossini from Bosch adjusts the prototype Smartglasses. A custom fitting is necessary is because of how the glasses work. Rather than projecting an image onto the lenses of the glasses themselves, the Bosch "Light Drive" uses a tiny microelectromechanical mirror array to direct a trio of lasers (red, green, and blue) across a transparent holographic element embedded in the right lens, which then reflects the light into your right eye and paints an image directly onto your retina. For this to work, the lasers have to pass cleanly through your pupil, which means that the frame and lenses have to be carefully fitted to the geometry of your face (frames with prescription lenses work fine).

If anything gets misaligned, you won't see the image. The whole fitting process took just a few minutes, and the prototype glasses were reasonably forgiving-you could wiggle them a bit or reposition them slightly without causing the image to disappear. The downside, of course, is that buying a pair will involve extra steps, which presents a minor hurdle to adoption.

As soon as the contextual display is turned on, you see a bright, sharp, colorful image hanging right out in front of you. The concept video doesn't really do it justice-it looks great. It takes up just a small part of your total field of view, so it's not overwhelming, but it's large enough to contain some easily visible text and icons.

The system is not meant to depict Magic Leap-style giant animated whales or whatever, which I'm totally fine with. It's intended to display little bits of helpful information when you need them. A side effect of the retinal projection system that I found useful is that if you look away by moving your eyes so that your retina is no longer aligned with the lasers, the image simply disappears. So, it'll be there if you're looking mostly straight ahead, and it'll vanish when you're not.

The concept video is a quite accurate representation of how the glasses look when you're using them. I also managed to get this photo with my cellphone by holding it up to the glasses and putting the camera more or less where my pupil would be:

Photo: Evan Ackerman/IEEE Spectrum This photo, taken by a smartphone looking through the glasses, shows an AR image.

Photo: Evan Ackerman/IEEE Spectrum This photo, taken by a smartphone looking through the glasses, shows an AR image. It looks way, way better than this when you have the glasses on, but this picture at least shows that you're not being fooled by a concept video.

I won't spend too much time talking about how the Smartglasses can connect to your smartphone, be controlled by touch or through an accelerometer, and all that other practical integration stuff. The concept video mostly covers it, and it's easy to imagine how it could all work together in a production system with a robust content ecosystem behind it.

What I do want to talk about is how this entire system fundamentally screws with your brain in a way that I can barely understand, illustrated by seemingly straightforward questions of "how do I adjust the focus of the image" and "what if I want the image to seem closer or farther away from me?" It took the in-person demo plus a string of follow-up emails, and ultimately a phone call to Germany, for Brian and I to come up with the following explanation.

Here's the trick: because the Smartglasses are using lasers to paint an AR image directly onto your retina, that image is always in focus. There are tiny muscles in our eyes that we use to focus on things, and no matter what those muscles are doing (whether they're focused on something near or far), the AR image doesn't get sharper or blurrier. It doesn't change at all.

Furthermore, since only one eye is seeing the image, there's no way for your eyes to converge on that image to estimate how far in front of you it is. Being able to see something that appears to be out there in the world but that has zero depth cues isn't a situation that our brains are good at dealing with, which causes some weird effects.

For example, text projected by the Smartglasses could be aligned with text that's large and far away, like a billboard, or it can be just as easily aligned with text that's small and close, like a magazine cover. The displayed text will then appear similar in size to either the billboard or the magazine, and you can convince yourself that the displayed text must therefore be at the same distance as whatever you've aligned it with. This is because the displayed text isn't actually being projected at any specific distance and doesn't have any specific size, which again, isn't a thing that our brains are really able to process. But because the image that we see through the Smartglasses is always in focus, the focal plane of the image just ends up being the same as the focal plane of whatever else we happen to be looking at.

Photo: Brian Rossini The author testing out Smartglasses. A slight reflection is visible in photos, but not in person.

Photo: Brian Rossini The author testing out Smartglasses. A slight reflection is visible in photos, but not in person. Humans with two eyes can get a sense of where the focal plane of an object is through what's called convergence-we can estimate how far away something is from how much our eyes have to converge to put it in the center of our vision. When looking at something close to you, your left eye and right eye both nudge inward towards your nose, but while looking at something far away, your eyes are looking straight ahead, parallel to each other.

Since the Smartglasses are only projecting into one eye, your brain can't use convergence to determine the focal plane of the AR image, but what the Smartglasses can do (in software) is nudge the image to the left, which will cause your right eye to have to converge a little bit to keep it centered in your field of view.

By adjusting the image placement in this way, and consequently adjusting how much convergence our eyes experience when we look at the displayed image, the Smartglasses can make it seem like the image is being projected on a focal plane at a specific distance in front of you.

The pair of glasses I tried on, for example, were calibrated so that the displayed image was directly in my right eye's line of sight when my eyes were converging on a focal plane about 1.3 meters in front of me, which is approximately the distance at which someone I was talking to might stand. This calibration allowed me to carry on a conversation with Brian while getting information in the display without having to move my eyes at all.

So, there was no glancing back and forth out into space every time a notification would pop up, which would be a bit unnerving to whomever you're talking to. And of course, it's easy to recalibrate the image's placement for other tasks: if you're riding your bike, you'd likely nudge the image to the right, so your eyes don't have to converge as much when looking at the image, making it seem like it's farther out in front of you and preserving your ability to focus on the world.

"It might be difficult to explain all of this to your readers," Brian remarked toward the end of our 35-minute phone call-and, as might be obvious by now, he's absolutely right. Brian said it's taken him years to understand how technology, brains, and eyeballs collaborate for effective assistive, augmented, or virtual reality experiences.

It's definitely true that for the first 10 or so minutes of wearing the glasses, your brain will be spending a lot of time trying to figure out just what the heck is going on. But after that, it just works, and you stop thinking about it (or that's how it went for me, anyway.) This is just an experience that you and your brain need to have together, and it'll all make sense.

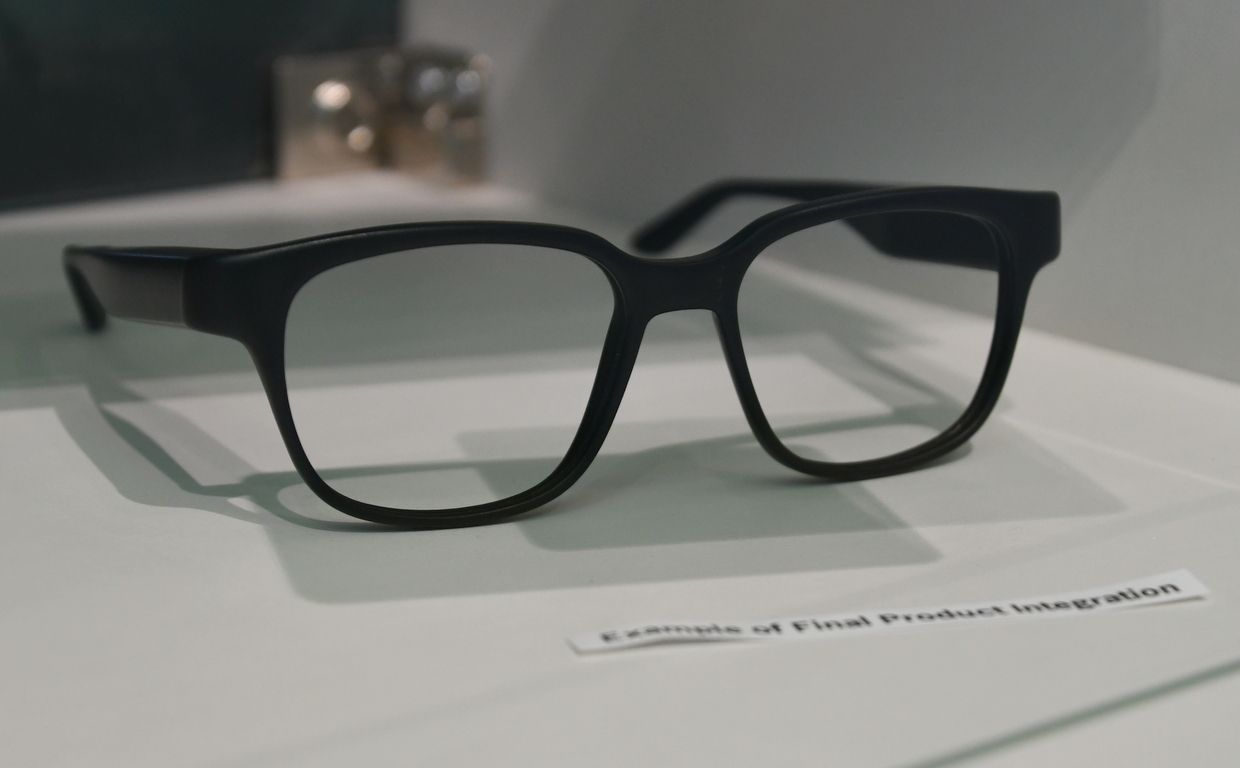

Photo: Evan Ackerman/IEEE Spectrum Example of what the Smartglasses might look like when integrated into a product

Photo: Evan Ackerman/IEEE Spectrum Example of what the Smartglasses might look like when integrated into a product The Bosch Smartglasses use the same kind of technology as the North Focals (which are/were based on Intel's Vaunt hardware). Bosch's light drive is an improvement over other systems in a number of ways, however. It's 30 percent smaller, which may not sound like a lot, but for something that you'll have on your face all day, it makes a big difference. And the whole system weighs less than 10 grams.

When fully integrated, glasses with Bosch's light drive embedded in the frame look almost completely normal, with just a slight bulge on the inside. Bosch also emphasizes the brightness of the display (it's easily visible in bright sunlight), its power efficiency (you get a full day of use with a 350mAh battery embedded in the frame), and how they've been able to bring stray light reflections down to almost nothing.

That last point is particularly important: the lenses are transparent, and the lack of stray reflections means that nobody looking at the glasses can tell that the display is active. It also means you can use the glasses while driving at night, which can be a challenge for other systems. Bosch worked very, very hard to develop a system using a holographic film with this level of optical transparency in the lens, and it's something that sets the Smartglasses apart.

Unfortunately, Bosch isn't really in the business of making glasses like these. They're perfectly happy to make all of the technology that goes inside and put together an operational prototype to show off at CES, but beyond that, they're depending on someone else to take the final step to make something people could buy. The earliest any such product might be available would likely be 2021. The light drive technology is not inherently super expensive, though, so any consumer smart glasses made with it should be available at a cost comparable to (or cheaper than) other smart glasses systems.

Even if that means I have to drag myself back to CES for another year, seeing some actual consumer smart glasses with Bosch's system inside might just make it worthwhile.

Robert Bosch LLC provided travel support for us to attend CES 2020. Bosch Sensortec, responsible for the Smartglasses Light Drive, was not aware of nor involved with the travel support.