Invisible AI uses computer vision to help (but hopefully not nag) assembly line workers

Assembly" may sound like one of the simpler tests in the manufacturing process, but as anyone who's ever put together a piece of flat-pack furniture knows, it can be surprisingly (and frustratingly) complex. Invisible AI is a startup that aims to monitor people doing assembly tasks using computer vision, helping maintain safety and efficiency - without succumbing to the obvious all-seeing-eye pitfalls. A $3.6 million seed round ought to help get them going.

The company makes self-contained camera-computer units that run highly optimized computer vision algorithms to track the movements of the people they see. By comparing those movements with a set of canonical ones (someone performing the task correctly), the system can watch for mistakes or identify other problems in the workflow - missing parts, injuries and so on.

Obviously, right at the outset, this sounds like the kind of thing that results in a pitiless computer overseer that punishes workers every time they fall below an artificial and constantly rising standard - and Amazon has probably already patented that. But co-founder and CEO Eric Danziger was eager to explain that this isn't the idea at all.

The most important parts of this product are for the operators themselves. This is skilled labor, and they have a lot of pride in their work," he said. They're the ones in the trenches doing the work, and catching and correcting mistakes is a big part of it."

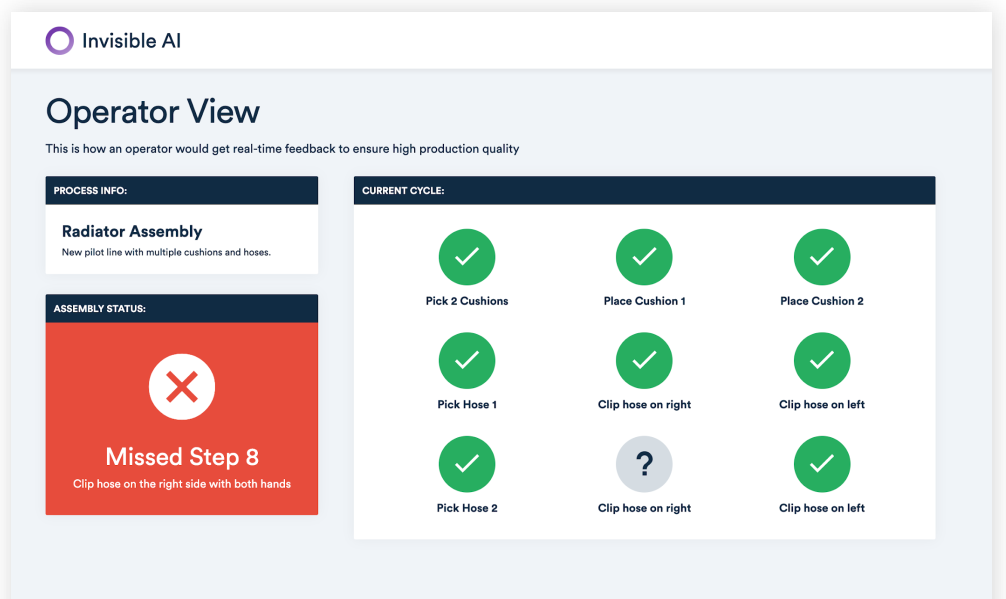

These assembly jobs are pretty athletic and fast-paced. You have to remember the 15 steps you have to do, then move on to the next one, and that might be a totally different variation. The challenge is keeping all that in your head," he continued. The goal is to be a part of that loop in real time. When they're about to move on to the next piece we can provide a double check and say, Hey, we think you missed step 8.' That can save a huge amount of pain. It might be as simple as plugging in a cable, but catching it there is huge - if it's after the vehicle has been assembled, you'd have to tear it down again."

This kind of body tracking exists in various forms and for various reasons; Veo Robotics, for instance, uses depth sensors to track an operator and robot's exact positions to dynamically prevent collisions.

Veo Robotics raises $12 million for its vision of a harmonious human-robot workplace

But the challenge at the industrial scale is less how do we track a person's movements in the first place" than how can we easily deploy and apply the results of tracking a person's movements." After all, it does no good if the system takes a month to install and days to reprogram. So Invisible AI focused on simplicity of installation and administration, with no code needed and entirely edge-based computer vision.

The goal was to make it as easy to deploy as possible. You buy a camera from us, with compute and everything built in. You install it in your facility, you show it a few examples of the assembly process, then you annotate them. And that's less complicated than it sounds," Danziger explained. Within something like an hour they can be up and running."

Once the camera and machine learning system is set up, it's really not such a difficult problem for it to be working on. Tracking human movements is a fairly straightforward task for a smart camera these days, and comparing those movements to an example set is comparatively easy, as well. There's no creativity" involved, like trying to guess what a person is doing or match it to some huge library of gestures, as you might find in an AI dedicated to captioning video or interpreting sign language (both still very much works in progress elsewhere in the research community).

As for privacy and the possibility of being unnerved by being on camera constantly, that's something that has to be addressed by the companies using this technology. There's a distinct possibility for good, but also for evil, like pretty much any new tech.

One of Invisible's early partners is Toyota, which has been both an early adopter and skeptic when it comes to AI and automation. Their philosophy, one that has been arrived at after some experimentation, is one of empowering expert workers. A tool like this is an opportunity to provide systematic improvement that's based on what those workers already do.

It's easy to imagine a version of this system where, like in Amazon's warehouses, workers are pushed to meet nearly inhuman quotas through ruthless optimization. But Danziger said that a more likely outcome, based on anecdotes from companies he's worked with already, is more about sourcing improvements from the workers themselves.

Having built a product day in and day out year after year, these are employees with deep and highly specific knowledge on how to do it right, and that knowledge can be difficult to pass on formally. Hold the piece like this when you bolt it or your elbow will get in the way" is easy to say in training but not so easy to make standard practice. Invisible AI's posture and position detection could help with that.

We see less of a focus on cycle time for an individual, and more like, streamlining steps, avoiding repetitive stress, etc.," Danziger said.

Importantly, this kind of capability can be offered with a code-free, compact device that requires no connection except to an intranet of some kind to send its results to. There's no need to stream the video to the cloud for analysis; footage and metadata are both kept totally on-premise if desired.

Like any compelling new tech, the possibilities for abuse are there, but they are not - unlike an endeavor like Clearview AI - built for abuse.

It's a fine line. It definitely reflects the companies it's deployed in," Danziger said. The companies we interact with really value their employees and want them to be as respected and engaged in the process as possible. This helps them with that."

The $3.6 million seed round was led by 8VC, with participating investors including iRobot Corporation, K9 Ventures, Sierra Ventures and Slow Ventures.

Scaled Robotics keeps an autonomous eye on busy construction sites