Human rights activists want to use AI to help prove war crimes in court

In 2015, alarmed by an escalating civil war in Yemen, Saudi Arabia led an air campaign against the country to defeat what it deemed a threatening rise of Shia power. The intervention, launched with eight other largely Sunni Arab states, was meant to last only a few weeks, Saudi officials had said. Nearly five years later, it still hasn't stopped.

By some estimates, the coalition has since carried out over 20,000 air strikes, many of which have killed Yemeni civilians and destroyed their property, allegedly in direct violation of international law. Human rights organizations have since sought to document such war crimes in an effort to stop them through legal challenges. But the gold standard, on-the-ground verification by journalists and activists, is often too dangerous to be possible. Instead, organizations have increasingly turned to crowdsourced mobile photos and videos to understand the conflict, and have begun submitting them to court to supplement eyewitness evidence.

But as digital documentation of war scenes has proliferated, the time it takes to analyze it has exploded. The disturbing imagery can also traumatize the investigators who must comb through and watch the footage. Now an initiative that will soon mount a challenge in the UK court system is trialing a machine-learning alternative. It could model a way to make crowdsourced evidence more accessible and help human rights organizations tap into richer sources of information.

The initiative, led by Swansea University in the UK along with a number of human rights groups, is part of an ongoing effort to monitor the alleged war crimes happening in Yemen and create greater legal accountability around them. In 2017, the platform Yemeni Archive began compiling a database of videos and photos documenting the abuses. Content was gathered from thousands of sources-including submissions from journalists and civilians, as well as open-source videos from social-media platforms like YouTube and Facebook-and preserved on a blockchain so they couldn't be tampered with undetected.

Along with the Global Legal Action Network (GLAN), a nonprofit that sues states for human rights violations, the investigators then began curating evidence of specific human rights violations into a separate database and mounting legal cases in various domestic and international courts. If things are coming through courtroom accountability processes, it's not enough to show that this happened," says Yvonne McDermott Rees, a professor at Swansea University and the initiative's lead. You have to say, Well, this is why it's a war crime.' That might be You've used a weapon that's illegal,' or in the case of an air strike, This targeted civilians' or This was a disproportionate attack.'"

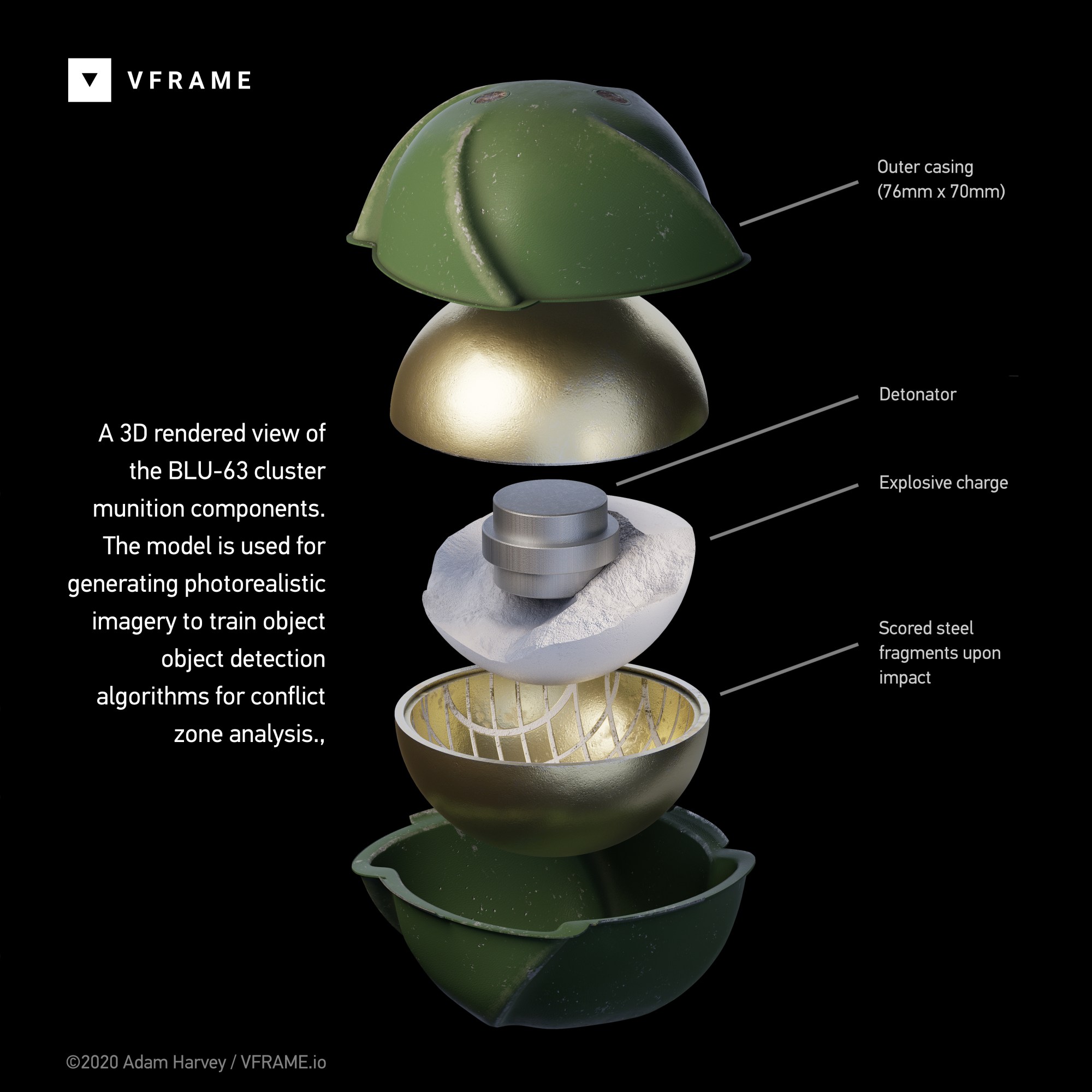

A 3D rendering of a BLU-63.VFRAME

A 3D rendering of a BLU-63.VFRAMEIn this case, the partners are focusing on a UK-manufactured cluster munition, the BLU-63. The use and sale of cluster munitions, explosive weapons that spray out smaller explosives on impact, are banned by 108 countries, including the UK. If the partners could prove in a UK court that they had indeed been used to commit war crimes, it could be used as leverage to stop the UK's sale of weapons to Saudi Arabia or to bring criminal charges against individuals involved in the sales.

So they decided to develop a machine-learning system to detect all instances of the BLU-63 in the database. But images of BLU-63s are rare precisely because they are illegal, which left the team with little real-world data to train their system. As a remedy, the team created a synthetic data set by reconstructing 3D models of the BLU-63 in a simulation.

Using the few prior examples they had, including a photo of the munition preserved by the Imperial War Museum, the partners worked with Adam Harvey, a computer vision researcher, to create the reconstructions. Starting with a base model, Harvey added photorealistic texturing, different types of damage, and various decals. He then rendered the results under various lighting conditions and in various environments to create hundreds of still images mimicking how the munition might be found in the wild. He also created synthetic data of things that could be mistaken for the munition, such as a green baseball, to lower the false positive rate.

While Harvey is still in the middle of generating more training examples-he estimates he will need over 2,000-the existing system already performs well: over 90% of the videos and photos it retrieves from the database have been verified by human experts to contain BLU-63s. He's now creating a more realistic validation data set by 3D-printing and painting models of the munitions to look like the real thing, and then videotaping and photographing them to see how well his detection system performs. Once the system is fully tested, the team plans to run it through the entire Yemeni Archive, which contains 5.9 billion video frames of footage. By Harvey's estimate, a person would take 2,750 days at 24 hours a day to comb through that much information. By contrast, the machine-learning system would take roughly 30 days on a regular desktop.

The real image shown in the analysis at the top of the article.VFRAME

The real image shown in the analysis at the top of the article.VFRAMEHuman experts would still need to verify the footage after the system filters it, but the gain in efficiency changes the game for human rights organizations looking to mount challenges in court. It's not uncommon for these organizations to store massive amounts of video crowdsourced from eyewitnesses. Amnesty International, for example, has on the order of 1 terabyte of footage documenting possible violations in Myanmar, says McDermott Rees. Machine-learning techniques can allow them to scour these archives and demonstrate the pattern of human rights violations at a previously infeasible scale, making it far more difficult for courts to deny the evidence.

When you're looking at, for example, the targeting of hospitals, having one video that shows a hospital being targeted is strong; it makes a case," says Jeff Deutch, the lead researcher at Syrian Archive, a human rights group responsible for launching Yemeni Archive. But if you can show hundreds of videos of hundreds of incidents of hospitals being targeted, you can see that this is really a deliberate strategy of war. When things are seen as deliberate, it becomes more possible to identify intent. And intent might be something useful for legal cases in terms of accountability for war crimes."

As the Yemen collaborators prepare to submit their case, evidence on this scale will be particularly relevant. The Saudi-led air-strike coalition has already denied culpability in previous allegations of war crimes, which the UK government recognizes as the official record. The UK courts also dismissed an earlier case that GLAN submitted to stop the government from selling weapons to Saudi Arabia, because it deemed the open-source video evidence not sufficiently convincing. The collaborators hope the greater wealth of evidence will lead to different results this time. Cases using open-source videos in a Syrian context have previously resulted in convictions, McDermott Rees says.

This initiative isn't the first to use machine learning to filter evidence in a human rights context. The E-Lamp system from Carnegie Mellon University, a video analysis toolbox for human rights work, was developed to analyze the archives of the Syrian war. Harvey also previously worked with some of his current collaborators to identify munitions being used in Syria. The Yemen effort, however, will be one of the first to be involved in a court case. It could set a precedent for other human rights organizations.

Although this is an emerging field, it's a tremendous opportunity," says Sam Gregory, the program director of human rights nonprofit Witness and cochair of the Partnership on AI's working group on social and societal influence. [It's] also about leveling the playing field in access to AI and utilization of AI so as to turn both eyewitness evidence and perpetrator-shot footage into justice."

Correction: A previous version of this article misstated Jeff Deutch's involvement with Yemeni Archive. It has since been corrected.