Life, part 20

In today's episode I want to again pause for a moment - this time, to verify that our allegedly O(change) implementation of Stafford's algorithm really does have its performance gated on the number of cells changing in a tick.

Here's my new performance testing scenario:

I've modified my implementation of Stafford's algorithm to make an 11-quad; that is a 2048*2048 board. I've then tiled the bottom edge with 50 glider guns, each of which produces a new glider every 30 ticks.

Gliders move at c/4, so the leftmost glider gun's first glider will reach the top right corner in about 8200 ticks; the rightmost glider gun's first glider will reach the right edge almost immediately, and every other glider gun will be somewhere in between.

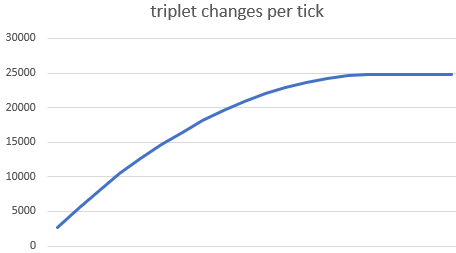

The result is that the number of changed triplets processed per tick starts off as a linear function which then gradually slopes down to become constant around 8200 ticks; at 8200 we are on average losing as many gliders to the rectangle of death as we are creating:

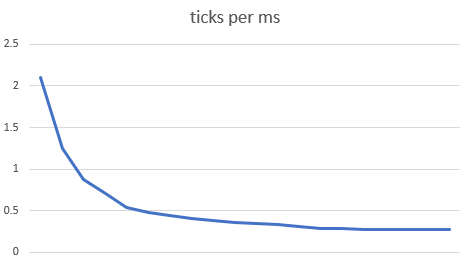

If our algorithm really is O(changes) then we should expect the number of ticks processed per second should go down where the number of changes is increasing, and should level off where changes levels off, and in fact that is what we see:

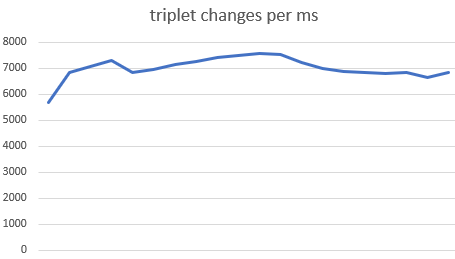

Or, put another way, we should expect that changes per millisecond should be roughly constant:

It's a little noisy but I did not go to the trouble of setting up a noise-free performance testing environment! We can see that the trend here certainly looks like it steadies out at around 7000 triplet changes processed per millisecond.

Like I said before, we could continue to optimize our implementation of Stafford's algorithm by profiling, finding the slowest part, micro-optimizing it, and so on; reader Mark Pflug reports that you can get a 20% marginal improvement by going to pointers instead of 2-d array accesses, for instance. I want this series to be more about the algorithms than the small details, so I think we are finally done with Stafford's algorithm.

(UPDATE: We are not quite done with Stafford's algorithm! Five minutes after I hit publish on this article I received a very kind email from David Stafford; I will discuss some further thoughts briefly in the next episode.)

However, I do want to make a few observations about the algorithms we've seen so far and the performance gains we've achieved in the context of this test case.

- O(changes) is clearly better than O(cells), but even so, the number of changing cells can grow linearly, and on a fixed-size board can be a considerable fraction of that board. (And I still haven't said whether there are patterns where the number of changes per tick grows super-linearly! What's your intuition there?)

- Of the four million cells in this board, almost half of them were dead cells never even close to a cell that ever changed or ever would change, but we spent over 600 kilobytes to hold the values of those dead cells.

- Once again we see that even with a board of four million cells, we run into the rectangle of death in only 8200 ticks, which is not that many in the grand scheme of things.

It seems like we're going to have difficulty scaling up to larger boards with patterns that we would like to run for a long time to understand their behaviours. Stafford's algorithm fits four million cells into 2.7 million bytes, and sure, we've got machines with plenty of memory these days, but it seems like we could be doing better.

Our insight for optimizing time was most cells stay the same, so only process the changes". Can we apply a similar insight to optimizing space? Thus far every algorithm we've considered has been O(cells) in memory; could we observe most cells are dead" and come up with an O(living cells) memory burden? And would that enable us to expand beyond these small, fixed-size rectangle of death" solutions?

Next time on FAIC: A new, much simpler algorithm that is O(changes) in time and O(living) in space, but at what cost in constant factor?

For today's extra content: Sometimes you want to change the direction of a glider. Ideally a configuration which changes the direction of an incoming glider should repair itself after the interaction with the glider so as to be ready for the next glider that needs reflecting. The number of ticks it takes to do so is the repeat time" of the reflector. Even more ideally, the reflector should be a still Life; such reflectors are called stable reflectors".

The stable reflector with the smallest known repeat time - 43 ticks - and size is the Snark; it was discovered in 2013 after a long hunt by Mike Playle. Image from the wiki; see that page for an animation of the Snark in action.