Real-time analytics

There's an ancient saying Whom the gods would destroy they first make mad." (Mad as in crazy, not mad as in angry.) I wrote a variation of this on Twitter:

Whom the gods would destroy, they first give real-time analytics.

Having more up-to-date information is only valuable up to a point. Past that point, you're more likely to be distracted by noise. The closer you look at anything, the more irregularities you see, and the more likely you are to over-steer [1].

I don't mean to imply that the noise isn't real. (More on that here.) But there's a temptation to pay more attention to the small variations you don't understand than the larger trends you believe you do understand.

I became aware of this effect when simulating Bayesian clinical trial designs. The more often you check your stopping rule, the more often you will stop [2]. You want to monitor a trial often enough to shut it down, or at least pause it, if things change for the worse. But monitoring too often can cause you to stop when you don't want to.

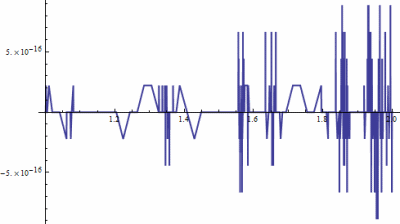

Flatter than glassA long time ago I wrote about the graph below.

The graph looks awfully jagged, until you look at the vertical scale. The curve represents the numerical difference between two functions that are exactly equal in theory. As I explain in that post, the curve is literally smoother than glass, and certainly flatter than a pancake.

Notes[1] See The Logic of Failure for a discussion of how over-steering is a common factor in disasters such as the Chernobyl nuclear failure.

[2] Bayesians are loathe to talk about things like -spending, but when you're looking at stopping frequencies, frequentist phenomena pop up.

The post Real-time analytics first appeared on John D. Cook.