TikTok’s QAnon ban has been ‘buggy’

TikTok has been cracking down on QAnon-related content, in line with similar moves by other major social media companies, including Facebook and YouTube, which focus on reducing the spread the baseless conspiracy theory across their respective platforms. According to a report by NPR this weekend, TikTok had quietly banned several hashtags associated with the QAnon conspiracy, and says it will also delete the accounts of users who promote QAnon content.

Tiktok tells us, however, these policies are not new. The company says they actually went on the books earlier this year.

TikTok had initially focused on reducing discoverability as an immediate step by blocking search results while it investigated, with help from partners, how such content manifested on its platform. This was covered in July by several news publications, TikTok said. In August, TikTok also set a policy to remove content and ban accounts, we're told.

Despite the policies, a report this month by Media Matters documented that TikTok was still hosting at least 14 QAnon-affiliated hashtags with over 488 million collective views. These came about because the platform had yet to address how QAnon followers were circumventing its community restrictions using variations and misspellings.

After Media Matters' report, TikTok removed 11 of the 14 hashtags it had referenced, the report noted in an update.

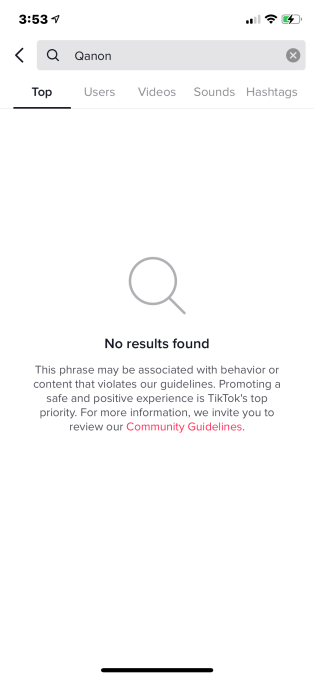

Today, a number of QAnon-related hashtags - like #QAnon, #TheStormIsComing, #Trump2Q2Q" and others - return no results in TikTok's search engine. They don't show under the Top" search results section, nor do they show under Videos" or Hashtags."

Instead of just showing users a blank page when these terms are searched, TikTok displays a message that explains how some phrases can be associated with behavior or content that violates TikTok's Community Guidelines, and offers a link to that resource.

Image Credits: TikTok screenshot via TechCrunch

Media Matters praised the changes in a statement to NPR as something TikTok was doing that was good and significant" even if long overdue."

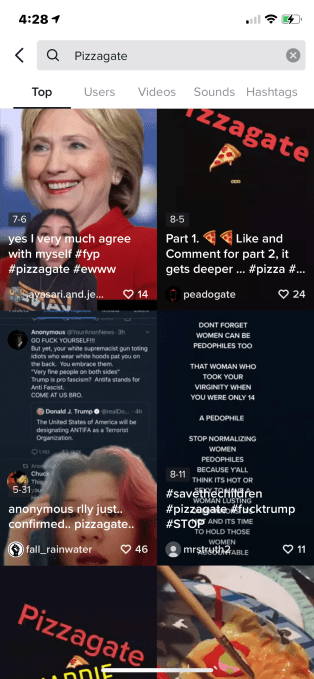

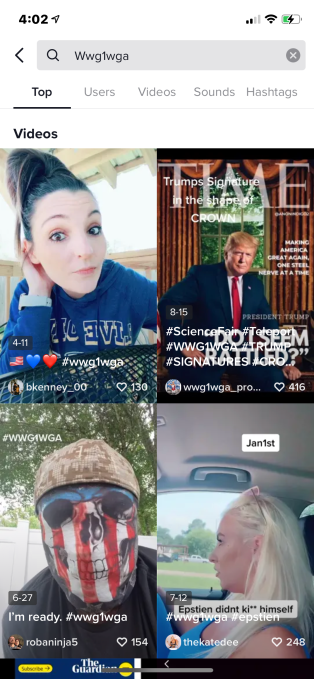

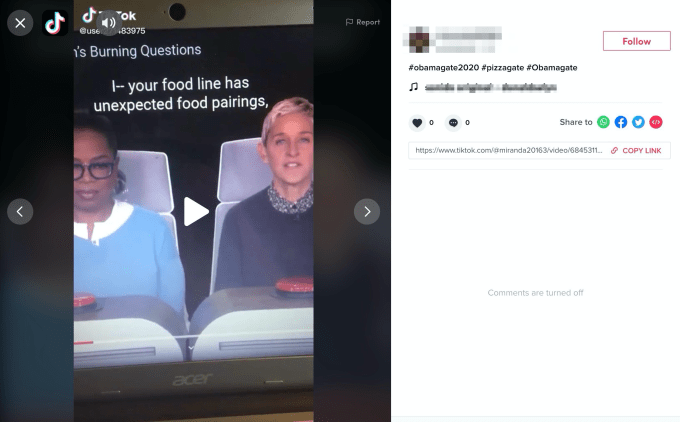

While TikTok's ban did tackle many of the top search results and tags associated with the conspiracy, we found it was overlooking others, like pizzagate and WWG1WGA, for instance. In tests this afternoon, these terms and many others still returned much content.

TikTok claims what we saw was likely a bug."

We had reached out to TikTok today to ask why searches for terms like pizzagate" and WWG1WGA" - popular QAnon terms - were still returning search results, even though their hashtags were banned.

For example, if you just searched for pizzagate," TikTok offered a long list of videos to scroll through, though you couldn't go directly to its hashtag. This was not the case for the other banned hashtags (like #QAnon) at the time of our tests.

Image Credits: TikTok screenshot via TechCrunch

The videos returned discussed the Pizzagate conspiracy - a baseless conspiracy theory which ultimately led to real-world violence when a gunman shot up a DC pizza business, thinking he was there to rescue trapped children.

While some videos were just discussing or debunking the idea, many were earnestly promoting the pizzagate conspiracy, even posting that it was was real" or claimed to be offering proof."

Above: Video recorded Oct. 19, 2020, 3:47 PM ET/12:47 PM PT

Other QAnon-associated hashtags were also not subject to a full ban, including WWG1WGA, WGA, ThesePeopleAreSick, cannibalclub, hollyweird and many others often used to circulate QAnon conspiracies.

When we searched these terms, we found more long lists of QAnon-related videos to scroll through.

We documented this with photos and videos before reaching out to TikTok to ask why these had been made exceptions to the ban. We specifically asked about the two top terms - pizzagate and WWG1WGA.

Image Credits: TikTok screenshot via TechCrunch

TikTok provided us with information about the timeline of its policy changes and the following statement:

Content and accounts that promote QAnon violate our disinformation policy and we remove them from our platform. We've also taken significant steps to make this content harder to find across search and hashtags by redirecting associated terms to our Community Guidelines. We continually update our safeguards with misspellings and new phrases as we work to keep TikTok a safe and authentic place for our community.

TikTok said also that the search term blocking must have been a bug, because it's now working properly.

We found that, upon receiving TikTok's confirmation, the terms we asked about were blocked, but others were not. This includes some of those mentioned above, as well as bizarre terms only a real conspiracy fan would know, like adrenochromereptilians.

We asked Media Matters whether it could still praise TikTok's actions to ban QAnon content, given what, at the time, had appeared to be a loophole in the QAnon ban.

TikTok has of course taken steps but not fully resolved the problem, but as we've noted, the true test of any of these policies - like we've said of other platform's measures - is in how and if they enforce them," the organization said.

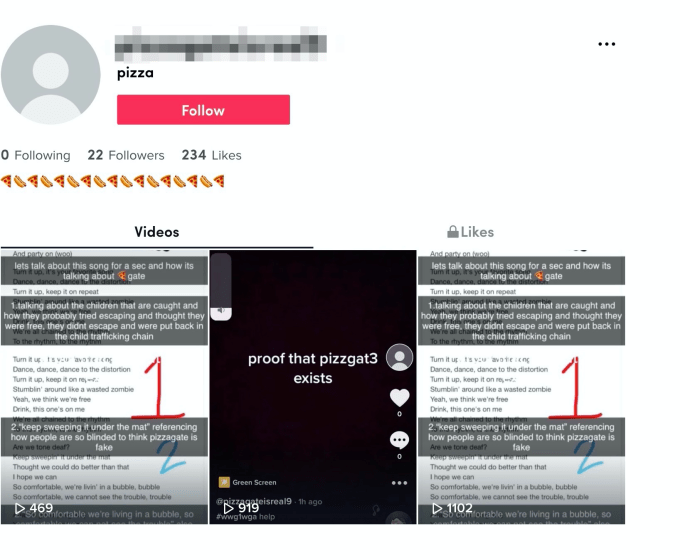

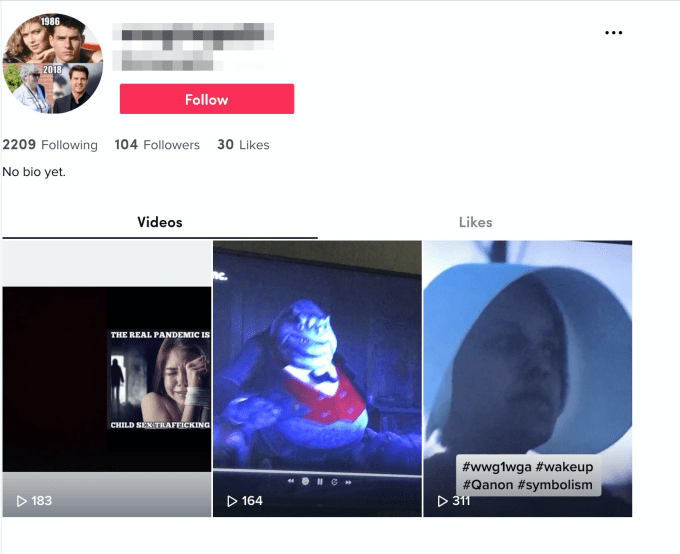

Even if the banned content was only showing today because of a bug," we found that many of the users who posted the content have not actually been banned from TikTok, it seems.

Twitter cracks down on QAnon conspiracy theory, banning 7,000 accounts

Though a search for their username won't return results now that the ban is no longer buggy," you can still go directly to these users' profile pages via their profile URL on the web.

We tried this on many profiles of those who had published QAnon content or used banned terms in their videos' hashtags and descriptions. (Below are a few of examples.)

What this means is that although TikTok reduced these users' discoverability in the app, the accounts can still be located if you know their username. And once you arrive on the account's page, you can still follow them.

Image Credits: TikTok screenshot via TechCrunch

Image Credits: TikTok screenshot via TechCrunch

Image Credits: TikTok screenshot via TechCrunch

These examples of bugs" or just oversights indicate how difficult it is to enforce content bans across social media platforms.

Without substantial investments in human moderation combined with automation, as well as tools that ensure banned users can't return, it's hard to keep up with the spread of disinformation at social media's scale.