New AI Inferencing Records

MLPerf, a consortium of AI experts and computing companies, has released a new set of machine learning records. The records were set on a series of benchmarks that measure the speed of inferencing: how quickly an already-trained neural network can accomplish its task with new data. For the first time, benchmarks for mobiles and tablets were contested. According to David Kanter, executive director of MLPerf's parent organization, a downloadable app is in the works that will allow anyone to test the AI capabilities of their own smartphone or tablet.

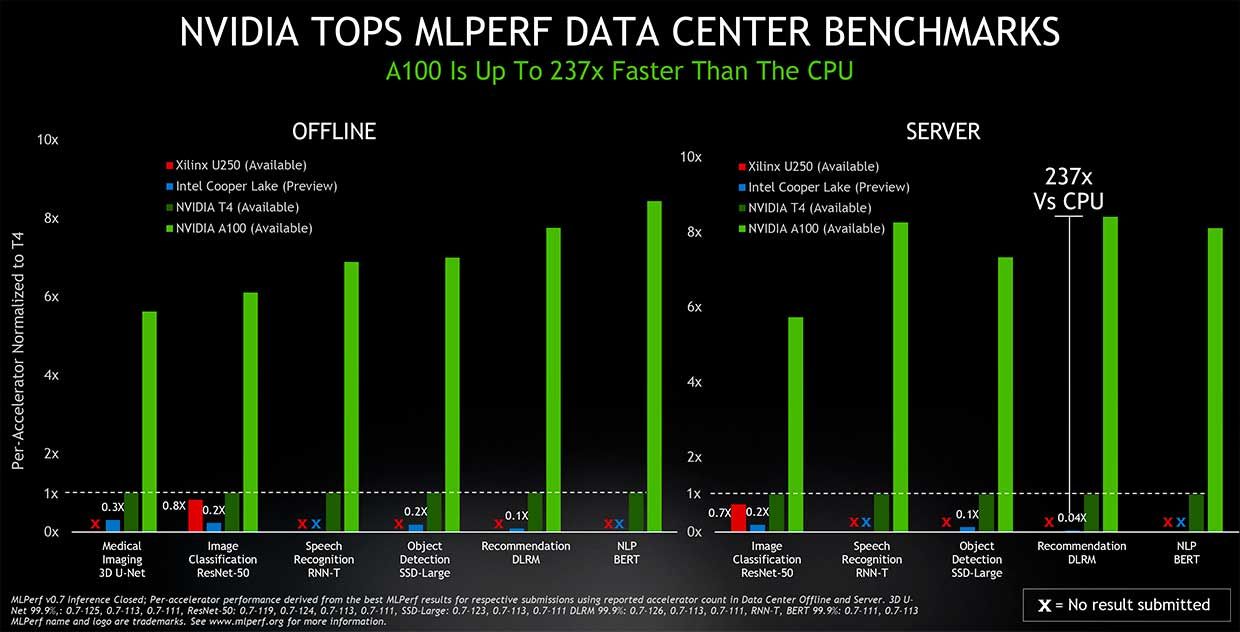

MLPerf's goal is to present a fair and straightforward way to compare AI systems. Twenty-three organizations-including Dell, Intel, and Nvidia-submitted a total of 1200 results, which were peer reviewed and subjected to random third-party audits. (Google was conspicuously absent this round.) As with the MLPerf records for training AIs released over the summer, Nvidia was the dominant force, besting what competition there was in all six categories for both datacenter and edge computing systems. Including submissions by partners like Cisco and Fujitsu, 1029 results, or 85 percent of the total for edge and data center categories, used Nvidia chips, according to the company.

Nvidia outperforms by a wide range on every test," says Paresh Kharaya, senior director of product management, accelerated computing at Nvidia. Nvidia's A100 GPUs powered its wins in the datacenter categories, while its Xavier was behind the GPU-maker's edge-computing victories. According to Kharaya, on one of the new MLPerf benchmarks, Deep Learning Recommendation Model (DLRM), a single DGX A100 system was the equivalent of 1000 CPU-based servers.

There were four new inferencing benchmarks introduced this year, adding to the two carried over from the previous round:

- BERT, for Bi-directional Encoder Representation from Transformers, is a natural language processing AI contributed by Google. Given a question input, BERT predicts a suitable answer.

- DLRM, for Deep Learning Recommendation Model is a recommender system that is trained to optimize click-through rates. It's used to recommend items for online shopping and rank search results and social media content. Facebook was the major contributor of the DLRM code.

- 3D U-Net is used in medical imaging systems to tell which 3D voxel in an MRI scan are parts of a tumor and which are healthy tissue. It's trained on a dataset of brain tumors.

- RNN-T, for Recurrent Neural Network Transducer, is a speech recognition model. Given a sequence of speech input, it predicts the corresponding text.

In addition to those new metrics, MLPerf put together the first set of benchmarks for mobile devices, which were used to test smartphone and tablet platforms from MediaTek, Qualcomm, and Samsung as well as a notebook from Intel. The new benchmarks included:

- MobileNetEdgeTPU, an image classification benchmark that is considered the most ubiquitous task in computer vision. It's representative of how a photo app might be able pick out the faces of you or your friends.

- SSD-MobileNetV2, for Single Shot multibox Detection with MobileNetv2, is trained to detect 80 different object categories in input frames with 300x300 resolution. It's commonly used to identify and track people and objects in photography and live video.

- DeepLabv3+ MobileNetV2: This is used to understand a scene for things like VR and navigation, and it plays a role in computational photography apps.

- MobileBERT is a mobile-optimized variant of the larger natural language processing BERT model that is fine-tuned for question answering. Given a question input, the MobileBERT generates an answer.

Image: NVIDIA Nvidia's A100 swept the board in AI inferencing tasks where the data was available all at once (offline) or delivered as it would be in online (server).

Image: NVIDIA Nvidia's A100 swept the board in AI inferencing tasks where the data was available all at once (offline) or delivered as it would be in online (server). The benchmarks were run on a purpose-built app that should be available to everyone within months, according to Kanter. We want something people can put into their hands for newer phones," he says.

The results released this week were dubbed version 0.7, as the consortium is still ramping up. Version 1.0 is likely to be complete in 2021.