Interlocking AIs let robots pick and place faster than ever

One of the jobs for which robots are best suited is the tedious, repetitive pick and place" task common in warehouses - but humans are still much better at it. UC Berkeley researchers are picking up the pace with a pair of machine learning models that work together to let a robot arm plan its grasp and path in just milliseconds.

People don't have to think hard about how to pick up an object and put it down somewhere else - it's not only something we've had years of practice doing every day, but our senses and brains are well adapted for the task. No one thinks, what if I picked up the cup, then jerked it really far up and then sideways, then really slowly down onto the table" - the paths we might move an object along are limited and usually pretty efficient.

Robots, however, don't have common sense or intuition. Lacking an obvious" solution, they need to evaluate thousands of potential paths for picking up an object and moving it, and that involves calculating the forces involved, potential collisions, whether it affects the type of grip that should be used, and so on.

Once the robot decides what to do it can execute quickly, but that decision takes time - several seconds at best, and possibly much more depending on the situation. Fortunately, roboticists at UC Berkeley have come up with a solution that cuts the time needed to do it by about 99 percent.

Boston Dynamics delivers plan for logistics robots as early as next year

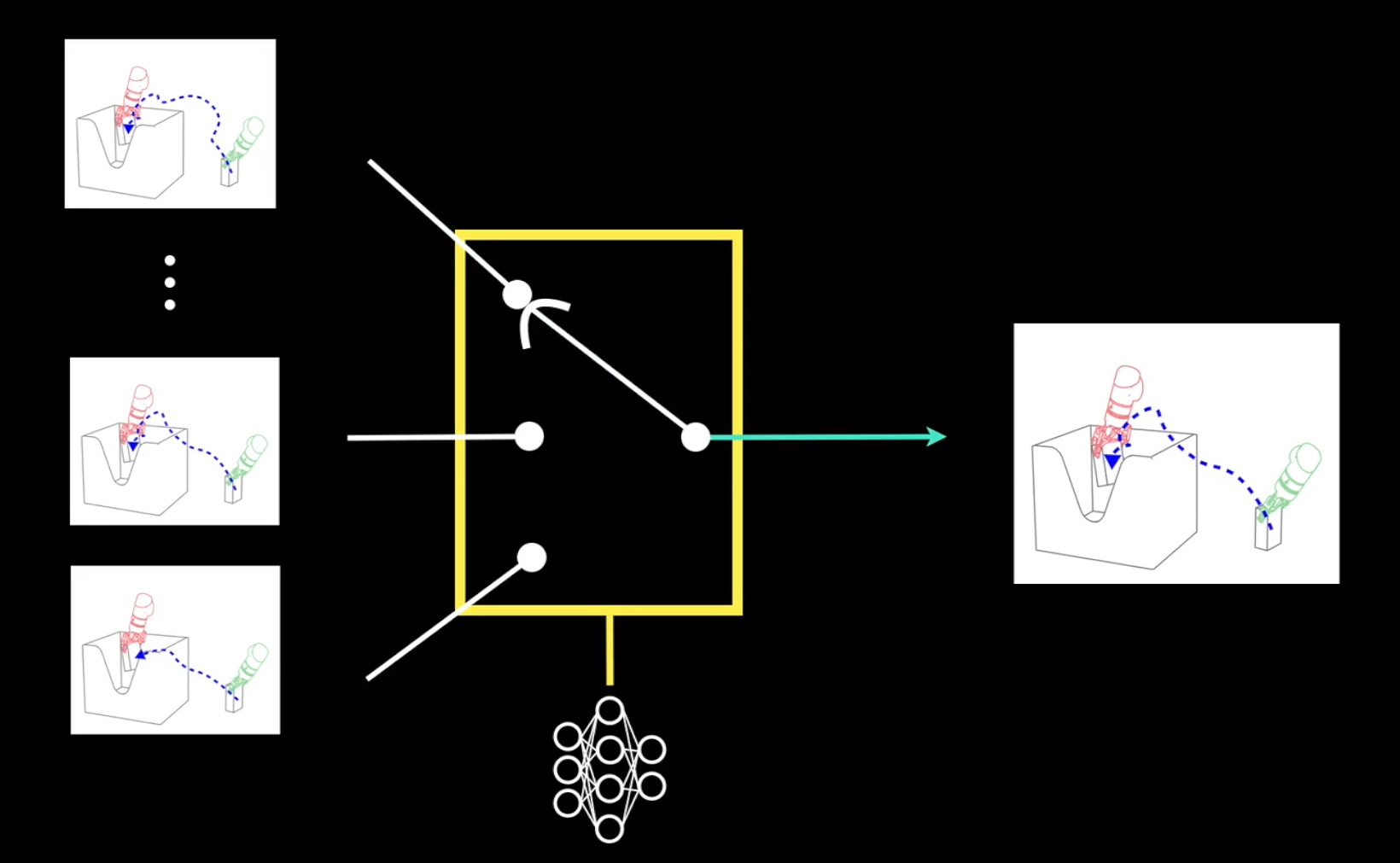

The system uses two machine learning models working in relay. The first is a rapid-fire generator of potential paths for the robot arm to take based on tons of example movements. It creates a bunch of options, and a second ML model, trained to pick the best, chooses from among them. This path tends to be a bit rough, however, and needs fine-tuning by a dedicated motion planner - but since the motion planner is given a warm start" with the general shape of the path that needs to be taken, its finishing touch is only a moment's work.

Diagram showing the decision process - the first agent creates potential paths and the second selects the best. A third system optimizes the selected path.

If the motion planner was working on its own, it tended to take between 10 and 40 seconds to finish. With the warm start, however, it rarely took more than a tenth of a second.

That's a benchtop calculation, however, and not what you'd see in an actual warehouse floor situation. The robot in the real world also has to actually accomplish the task, which can only be done so fast. But even if the motion planning period in a real world environment was only two or three seconds, reducing that to near zero adds up extremely fast.

Every second counts. Current systems spend up to half their cycle time on motion planning, so this method has potential to dramatically speed up picks per hour," said lab director and senior author Ken Goldberg. Sensing the environment properly is also time-consuming but being sped up by improved computer vision capabilities, he added.

Right now robots doing pick and place are nowhere near the efficiency of humans, but small improvements will combine to make them competitive and, eventually, more than competitive. The work when done by humans is dangerous and tiring, yet millions do it worldwide because there's no other way to fill the demand created by the growing online retail economy.

The team's research is published this week in the journal Science Robotics.

Where top VCs are investing in manufacturing and warehouse robotics