Facial recognition reveals political party in troubling new research

Researchers have created a machine learning system that they claim can determine a person's political party, with reasonable accuracy, based only on their face. The study, from a group that also showed that sexual preference can seemingly be inferred this way, candidly addresses and carefully avoids the pitfalls of modern phrenology," leading to the uncomfortable conclusion that our appearance may express more personal information that we think.

The study, which appeared this week in the Nature journal Scientific Reports, was conducted by Stanford University's Michal Kosinski. Kosinski made headlines in 2017 with work that found that a person's sexual preference could be predicted from facial data.

AI that can determine a person's sexuality from photos shows the dark side of the data age

The study drew criticism not so much for its methods but for the very idea that something that's notionally non-physical could be detected this way. But Kosinski's work, as he explained then and afterwards, was done specifically to challenge those assumptions and was as surprising and disturbing to him as it was to others. The idea was not to build a kind of AI gaydar - quite the opposite, in fact. As the team wrote at the time, it was necessary to publish in order to warn others that such a thing may be built by people whose interests went beyond the academic:

We were really disturbed by these results and spent much time considering whether they should be made public at all. We did not want to enable the very risks that we are warning against. The ability to control when and to whom to reveal one's sexual orientation is crucial not only for one's well-being, but also for one's safety.

We felt that there is an urgent need to make policymakers and LGBTQ communities aware of the risks that they are facing. We did not create a privacy-invading tool, but rather showed that basic and widely used methods pose serious privacy threats.

Similar warnings may be sounded here, for while political affiliation at least in the U.S. (and at least at present) is not as sensitive or personal an element as sexual preference, it is still sensitive and personal. A week hardly passes without reading of some political or religious dissident" or another being arrested or killed. If oppressive regimes could obtain what passes for probable cause by saying the algorithm flagged you as a possible extremist," instead of for example intercepting messages, it makes this sort of practice that much easier and more scalable.

The algorithm itself is not some hyper-advanced technology. Kosinski's paper describes a fairly ordinary process of feeding a machine learning system images of more than a million faces, collected from dating sites in the U.S., Canada, and the U.K., as well as American Facebook users. The people whose faces were used identified as politically conservative or liberal as part of the site's questionnaire.

The algorithm was based on open-source facial recognition software, and after basic processing to crop to just the face (that way no background items creep in as factors), the faces are reduced to 2,048 scores representing various features - as with other face recognition algorithms these aren't necessary intuitive thinks like eyebrow color" and nose type" but more computer-native concepts.

Image Credits: Michael Kosinski / Nature Scientific Reports

The system was given political affiliation data sourced from the people themselves, and with this it diligently began to study the differences between the facial stats of people identifying as conservatives and those identifying as liberal. Because it turns out, there are differences.

Of course it's not as simple as conservatives have bushier eyebrows" or liberals frown more." Nor does it come down to demographics, which would make things too easy and simple. After all, if political party identification correlates with both age and skin color, that makes for a simple prediction algorithm right there. But although the software mechanisms used by Kosinski are quite standard, he was careful to cover his bases in order that this study, like the last one, can't be dismissed as pseudoscience.

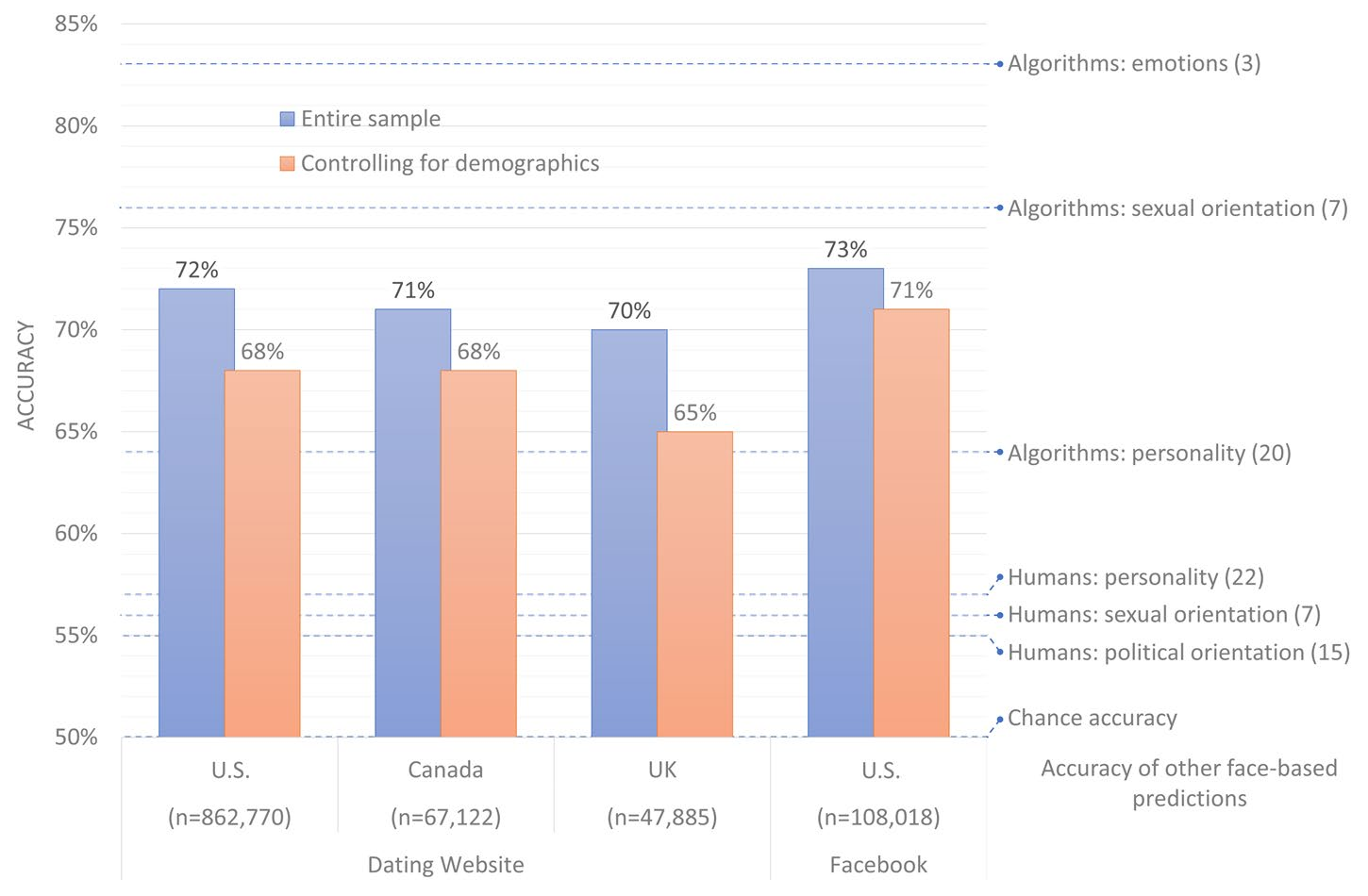

The most obvious way of addressing this is by having the system make guesses as to the political party of people of the same age, gender, and ethnicity. The test involved being presented with two faces, one of each party, and guessing which was which. Obviously chance accuracy is 50 percent. Humans aren't very good at this task, performing only slightly above chance, about 55 percent accurate.

The algorithm managed to reach as high as 71 percent accurate when predicting political party between two like individuals, and 73 percent when presented with two individuals of any age, ethnicity, or gender (but still guaranteed to be one conservative, one liberal).

Image Credits: Michael Kosinski / Nature Scientific Reports

Getting three out of four may not seem like a triumph for modern AI, but considering people can barely do better than a coin flip, there seems to be something worth considering here. Kosinski has been careful to cover other bases as well; this doesn't appear to be a statistical anomaly or exaggeration of an isolated result.

The idea that your political party may be written on your face is an unnerving one, for while one's political leanings are far from the most private of info, it's also something that is very reasonably thought of as being intangible. People may choose to express their political beliefs with a hat, pin, or t-shirt, but one generally considers one's face to be nonpartisan.

If you're wondering which facial features in particular are revealing, unfortunately the system is unable to report that. In a sort of para-study, Kosinski isolated a couple dozen facial features (facial hair, directness of gaze, various emotions) and tested whether those were good predictors of politics, but none led to more than a small increase in accuracy over chance or human expertise.

Head orientation and emotional expression stood out: Liberals tended to face the camera more directly, were more likely to express surprise, and less likely to express disgust," Kosinski wrote in author's notes for the paper. But what they added left more than 10 percentage points of accuracy not accounted for: That indicates that the facial recognition algorithm found many other features revealing political orientation."

Unregulated facial recognition technology presents unique risks for the LGBTQ+ community

The knee-jerk defense of this can't be true - phrenology was snake oil" doesn't hold much water here. It's scary to think it's true, but it doesn't help us to deny what could be a very important truth, since it could be used against people very easily.

As with the sexual orientation research, the point here is not to create a perfect detector for this information, but to show that it can be done in order that people begin to consider the dangers that creates. If for example an oppressive theocratic regime wanted to crack down on either non-straight people or those with a certain political leaning, this sort of technology gives them a plausible technological method to do so objectively." And what's more, it can be done with very little work or contact with the target, unlike digging through their social media history or analyzing their purchases (also very revealing).

We have already heard of China deploying facial recognition software to find members of the embattled Uyghur religious minority. And in our own country this sort of AI is trusted by authorities as well - it's not hard to imagine police using the latest technology" to, for instance, classify faces at a protest, saying these 10 were determined by the system as being the most liberal," or what have you.

The idea that a couple researchers using open-source software and a medium-sized database of faces (for a government, this is trivial to assemble in the unlikely possibility they do not have one already) could do so anywhere in the world, for any purpose, is chilling.

Don't shoot the messenger," said Kosinski. In my work, I am warning against widely used facial recognition algorithms. Worryingly, those AI physiognomists are now being used to judge people's intimate traits - scholars, policymakers, and citizens should take notice."

Portland passes expansive city ban on facial recognition tech