Auditors are testing hiring algorithms for bias, but there’s no easy fix

I'm at home playing a video game on my computer. My job is to pump up one balloon at a time and earn as much money as possible. Every time I click Pump," the balloon expands and I receive five virtual cents. But if the balloon pops before I press Collect," all my digital earnings disappear.

After filling 39 balloons, I've earned $14.40. A message appears on the screen: You stick to a consistent approach in high-risk situations. Trait measured: Risk."

This game is one of a series made by a company called Pymetrics, which many large US firms hire to screen job applicants. If you apply to McDonald's, Boston Consulting Group, Kraft Heinz, or Colgate-Palmolive, you might be asked to play Pymetrics's games.

While I play, an artificial-intelligence system measures traits including generosity, fairness, and attention. If I were actually applying for a position, the system would compare my scores with those of employees already working in that job. If my personality profile reflected the traits most specific to people who are successful in the role, I'd advance to the next hiring stage.

More and more companies are using AI-based hiring tools like these to manage the flood of applications they receive-especially now that there are roughly twice as many jobless workers in the US as before the pandemic. A survey of over 7,300 human-resources managers worldwide by Mercer, an asset management firm, found that the proportion who said their department uses predictive analytics jumped from 10% in 2016 to 39% in 2020.

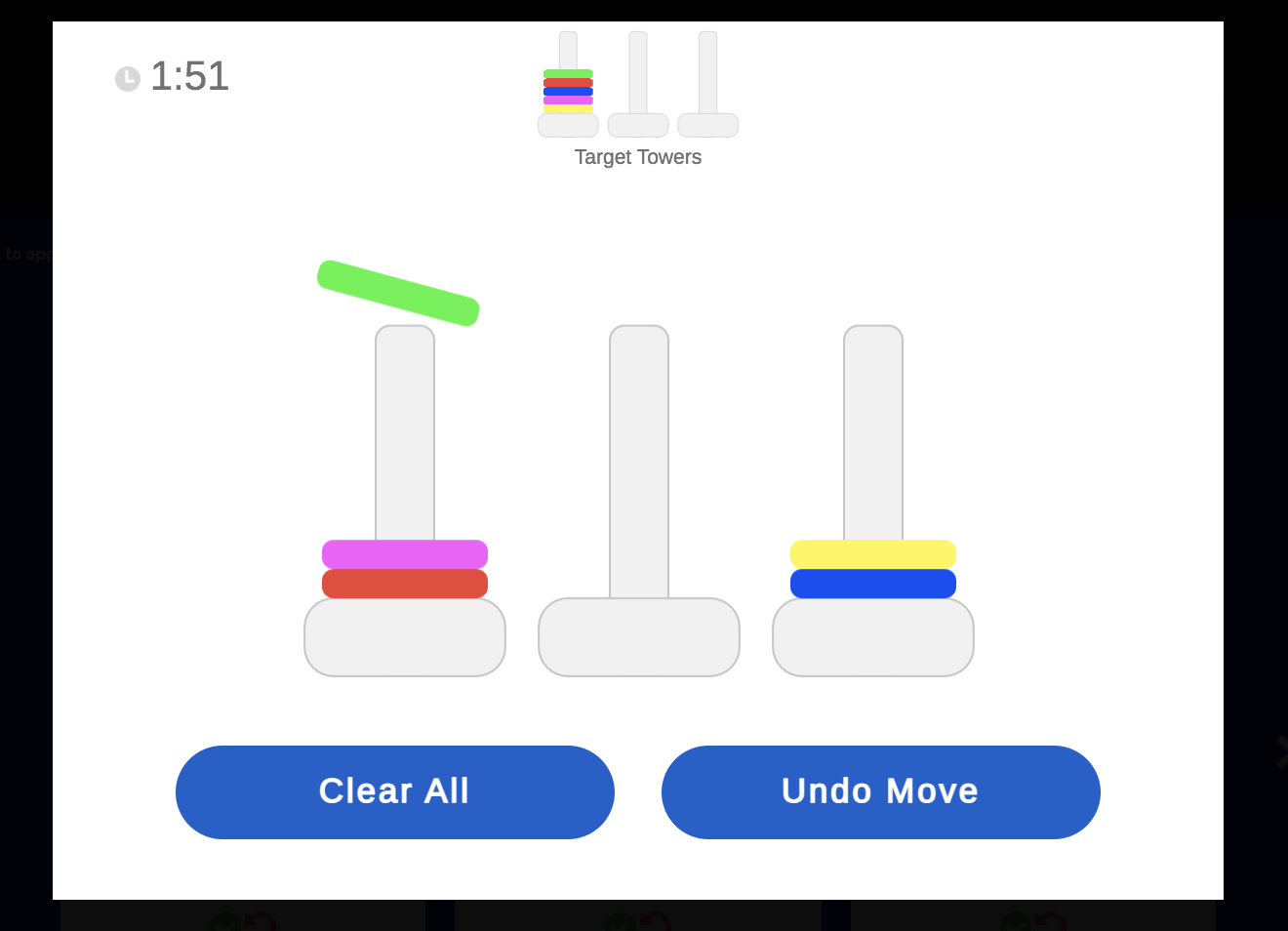

Stills of Pymetrics's core product, a suite of 12 AI-based games that the company says can discern a job applicant's social, cognitive, and emotional attributes.

Stills of Pymetrics's core product, a suite of 12 AI-based games that the company says can discern a job applicant's social, cognitive, and emotional attributes.

As with other AI applications, though, researchers have found that some hiring tools produce biased results-inadvertently favoring men or people from certain socioeconomic backgrounds, for instance. Many are now advocating for greater transparency and more regulation. One solution in particular is proposed again and again: AI audits.

Last year, Pymetrics paid a team of computer scientists from Northeastern University to audit its hiring algorithm. It was one of the first times such a company had requested a third-party audit of its own tool. CEO Frida Polli told me she thought the experience could be a model for compliance with a proposed law requiring such audits for companies in New York City, where Pymetrics is based.

Pymetrics markets its software as entirely bias free."

What Pymetrics is doing, which is bringing in a neutral third party to audit, is a really good direction in which to be moving," says Pauline Kim, a law professor at Washington University in St. Louis, who has expertise in employment law and artificial intelligence. If they can push the industry to be more transparent, that's a really positive step forward."

For all the attention that AI audits have received, though, their ability to actually detect and protect against bias remains unproven. The term AI audit" can mean many different things, which makes it hard to trust the results of audits in general. The most rigorous audits can still be limited in scope. And even with unfettered access to the innards of an algorithm, it can be surprisingly tough to say with certainty whether it treats applicants fairly. At best, audits give an incomplete picture, and at worst, they could help companies hide problematic or controversial practices behind an auditor's stamp of approval.

Inside an AI auditMany kinds of AI hiring tools are already in use today. They include software that analyzes a candidate's facial expressions, tone, and language during video interviews as well as programs that scan resumes, predict personality, or investigate an applicant's social media activity.

Regardless of what kind of tool they're selling, AI hiring vendors generally promise that these technologies will find better-qualified and more diverse candidates at lower cost and in less time than traditional HR departments. However, there's very little evidence that they do, and in any case that's not what the AI audit of Pymetrics's algorithm tested for. Instead, it aimed to determine whether a particular hiring tool grossly discriminates against candidates on the basis of race or gender.

Christo Wilson at Northeastern had scrutinized algorithms before, including those that drive Uber's surge pricing and Google's search engine. But until Pymetrics called, he had never worked directly with a company he was investigating.

Wilson's team, which included his colleague Alan Mislove and two graduate students, relied on data from Pymetrics and had access to the company's data scientists. The auditors were editorially independent but agreed to notify Pymetrics of any negative findings before publication. The company paid Northeastern $104,465 via a grant, including $64,813 that went toward salaries for Wilson and his team.

Pymetrics's core product is a suite of 12 games that it says are mostly based on cognitive science experiments. The games aren't meant to be won or lost; they're designed to discern an applicant's cognitive, social, and emotional attributes, including risk tolerance and learning ability. Pymetrics markets its software as entirely bias free." Pymetrics and Wilson decided that the auditors would focus narrowly on one specific question: Are the company's models fair?

They based the definition of fairness on what's colloquially known as the four-fifths rule, which has become an informal hiring standard in the United States. The Equal Employment Opportunity Commission (EEOC) released guidelines in 1978 stating that hiring procedures should select roughly the same proportion of men and women, and of people from different racial groups. Under the four-fifths rule, Kim explains, if men were passing 100% of the time to the next step in the hiring process, women need to pass at least 80% of the time."

If a company's hiring tools violate the four-fifths rule, the EEOC might take a closer look at its practices. For an employer, it's not a bad check," Kim says. If employers make sure these tools are not grossly discriminatory, in all likelihood they will not draw the attention of federal regulators."

To figure out whether Pymetrics's software cleared this bar, the Northeastern team first had to try to understand how the tool works.

When a new client signs up with Pymetrics, it must select at least 50 employees who have been successful in the role it wants to fill. These employees play Pymetrics's games to generate training data.Next, Pymetrics's system compares the data from those 50 employees with game data from more than 10,000 people randomly selected from over two million. The system then builds a model that identifies and ranks the skills most specific to the client's successful employees.

To check for bias, Pymetrics runs this model against another data set of about 12,000 people (randomly selected from over 500,000) who have not only played the games but also disclosed their demographics in a survey. The idea is to determine whether the model would pass the four-fifths test if it evaluated these 12,000 people.

If the system detects any bias, it builds and tests more models until it finds one that both predicts success and produces roughly the same passing rates for men and women and for members of all racial groups. In theory, then, even if most of a client's successful employees are white men, Pymetrics can correct for bias by comparing the game data from those men with data from women and people from other racial groups. What it's looking for are data points predicting traits that don't correlate with race or gender but do distinguish successful employees.

Christo Wilson of Northeastern UniversitySIMON SIMARD

Christo Wilson of Northeastern UniversitySIMON SIMARDWilson and his team of auditors wanted to figure out whether Pymetrics's anti-bias mechanism does in fact prevent bias and whether it can be fooled. To do that, they basically tried to game the system by, for example, duplicating game data from the same white man many times and trying to use it to build a model. The outcome was always the same: The way their code is sort of laid out and the way the data scientists use the tool, there was no obvious way to trick them essentially into producing something that was biased and get that cleared," says Wilson.

Last fall, the auditors shared their findings with the company: Pymetrics's system satisfies the four-fifths rule. The Northeastern team recently published the study of the algorithm online and will present a report on the work in March at the algorithmic accountability conference FAccT.

The big takeaway is that Pymetrics is actually doing a really good job," says Wilson.

An imperfect solutionBut though Pymetrics's software meets the four-fifths rule, the audit didn't prove that the tool is free of any bias whatsoever, nor that it actually picks the most qualified candidates for any job.

It effectively felt like the question being asked was more Is Pymetrics doing what they say they do?' as opposed to Are they doing the correct or right thing?'" says Manish Raghavan, a PhD student in computer science at Cornell University, who has published extensively on artificial intelligence and hiring.

It effectively felt like the question being asked was more Is Pymetrics doing what they say they do?' as opposed to Are they doing the correct or right thing?'"

For example, the four-fifths rule only requires people from different genders and racial groups to pass to the next round of the hiring process at roughly the same rates. An AI hiring tool could satisfy that requirement and still be wildly inconsistent at predicting how well people from different groups actually succeed in the job once they're hired. And if a tool predicts success more accurately for men than women, for example, that would mean it isn't actually identifying the best qualified women, so the women who are hired may not be as successful on the job," says Kim.

Another issue that neither the four-fifths rule nor Pymetrics's audit addresses is intersectionality. The rule compares men with women and one racial group with another to see if they pass at the same rates, but it doesn't compare, say, white men with Asian men or Black women. You could have something that satisfied the four-fifths rule [for] men versus women, Blacks versus whites, but it might disguise a bias against Black women," Kim says.

Pymetrics is not the only company having its AI audited. HireVue, another large vendor of AI hiring software, had a company called O'Neil Risk Consulting and Algorithmic Auditing (ORCAA) evaluate one of its algorithms. That firm is owned by Cathy O'Neil, a data scientist and the author of Weapons of Math Destruction, one of the seminal popular books on AI bias, who has advocated for AI audits for years.

ORCAA and HireVue focused their audit on one product: HireVue's hiring assessments, which many companies use to evaluate recent college graduates. In this case, ORCAA didn't evaluate the technical design of the tool itself. Instead, the company interviewed stakeholders (including a job applicant, an AI ethicist, and several nonprofits) about potential problems with the tools and gave HireVue recommendations for improving them. The final report is published on HireVue's website but can only be read after signing a nondisclosure agreement.

Alex Engler, a fellow at the Brookings Institution who has studied AI hiring tools and who is familiar with both audits, believes Pymetrics's is the better one: There's a big difference in the depths of the analysis that was enabled," he says. But once again, neither audit addressed whether the products really help companies make better hiring choices. And both were funded by the companies being audited, which creates a little bit of a risk of the auditor being influenced by the fact that this is a client," says Kim.

For these reasons, critics say, voluntary audits aren't enough. Data scientists and accountability experts are now pushing for broader regulation of AI hiring tools, as well as standards for auditing them.

Filling the gapsSome of these measures are starting to pop up in the US. Back in 2019, Senators Cory Booker and Ron Wyden and Representative Yvette Clarke introduced the Algorithmic Accountability Act to make bias audits mandatory for any large companies using AI, though the bill has not been ratified.

Meanwhile, there's some movement at the state level. The AI Video Interview Act in Illinois, which went into effect in January 2020, requires companies to tell candidates when they use AI in video interviews. Cities are taking action too-in Los Angeles, city council member Joe Buscaino proposed a fair hiring motion for automated systems in November.

The New York City bill in particular could serve as a model for cities and states nationwide. It would make annual audits mandatory for vendors of automated hiring tools. It would also require companies that use the tools to tell applicants which characteristics their system used to make a decision.

But the question of what those annual audits would actually look like remains open. For many experts, an audit along the lines of what Pymetrics did wouldn't go very far in determining whether these systems discriminate, since that audit didn't check for intersectionality or evaluate the tool's ability to accurately measure the traits it claims to measure for people of different races and genders.

And many critics would like to see auditing done by the government instead of private companies, to avoid conflicts of interest. There should be a preemptive regulation so that before you use any of these systems, the Equal Employment Opportunity Commission should need to review it and then license it," says Frank Pasquale, a professor at Brooklyn Law School and an expert in algorithmic accountability. He has in mind a preapproval process for algorithmic hiring tools similar to what the Food and Drug Administration uses with drugs.

So far, the EEOC hasn't even issued clear guidelines concerning hiring algorithms that are already in use. But things might start to change soon. In December, 10 senators sent a letter to the EEOC asking if it has the authority to start policing AI hiring systems to prevent discrimination against people of color, who have already been disproportionally affected by job losses during the pandemic.