Geoffrey Hinton has a hunch about what’s next for AI

Back in November, the computer scientist and cognitive psychologist Geoffrey Hinton had a hunch. After a half-century's worth of attempts-some wildly successful-he'd arrived at another promising insight into how the brain works and how to replicate its circuitry in a computer.

It's my current best bet about how things fit together," Hinton says from his home office in Toronto, where he's been sequestered during the pandemic. If his bet pays off, it might spark the next generation of artificial neural networks-mathematical computing systems, loosely inspired by the brain's neurons and synapses, that are at the core of today's artificial intelligence. His honest motivation," as he puts it, is curiosity. But the practical motivation-and, ideally, the consequence-is more reliable and more trustworthy AI.

A Google engineering fellow and cofounder of the Vector Institute for Artificial Intelligence, Hinton wrote up his hunch in fits and starts, and at the end of February announced via Twitter that he'd posted a 44-page paper on the arXiv preprint server. He began with a disclaimer: This paper does not describe a working system," he wrote. Rather, it presents an imaginary system." He named it, GLOM." The term derives from agglomerate" and the expression glom together."

Hinton thinks of GLOM as a way to model human perception in a machine-it offers a new way to process and represent visual information in a neural network. On a technical level, the guts of it involve a glomming together of similar vectors. Vectors are fundamental to neural networks-a vector is an array of numbers that encodes information. The simplest example is the xyz coordinates of a point-three numbers that indicate where the point is in three-dimensional space. A six-dimensional vector contains three more pieces of information-maybe the red-green-blue values for the point's color. In a neural net, vectors in hundreds or thousands of dimensions represent entire images or words. And dealing in yet higher dimensions, Hinton believes that what goes on in our brains involves big vectors of neural activity."

By way of analogy, Hinton likens his glomming together of similar vectors to the dynamic of an echo chamber-the amplification of similar beliefs. An echo chamber is a complete disaster for politics and society, but for neural nets it's a great thing," Hinton says. The notion of echo chambers mapped onto neural networks he calls islands of identical vectors," or more colloquially, islands of agreement"-when vectors agree about the nature of their information, they point in the same direction.

If neural nets were more like people, at least they can go wrong the same ways as people do, and so we'll get some insight into what might confuse them."

Geoffrey Hinton

In spirit, GLOM also gets at the elusive goal of modelling intuition-Hinton thinks of intuition as crucial to perception. He defines intuition as our ability to effortlessly make analogies. From childhood through the course of our lives, we make sense of the world by using analogical reasoning, mapping similarities from one object or idea or concept to another-or, as Hinton puts it, one big vector to another. Similarities of big vectors explain how neural networks do intuitive analogical reasoning," he says. More broadly, intuition captures that ineffable way a human brain generates insight. Hinton himself works very intuitively-scientifically, he is guided by intuition and the tool of analogy making. And his theory of how the brain works is all about intuition. I'm very consistent," he says.

Hinton hopes GLOM might be one of several breakthroughs that he reckons are needed before AI is capable of truly nimble problem solving-the kind of human-like thinking that would allow a system to make sense of things never before encountered; to draw upon similarities from past experiences, play around with ideas, generalize, extrapolate, understand. If neural nets were more like people," he says, at least they can go wrong the same ways as people do, and so we'll get some insight into what might confuse them."

For the time being, however, GLOM itself is only an intuition-it's vaporware," says Hinton. And he acknowledges that as an acronym nicely matches, Geoff's Last Original Model." It is, at the very least, his latest.

Outside the boxHinton's devotion to artificial neural networks (a mid-20th century invention) dates to the early 1970s. By 1986 he'd made considerable progress: whereas initially nets comprised only a couple of neuron layers, input and output, Hinton and collaborators came up with a technique for a deeper, multilayered network. But it took 26 years before computing power and data capacity caught up and capitalized on the deep architecture.

In 2012, Hinton gained fame and wealth from a deep learning breakthrough. With two students, he implemented a multilayered neural network that was trained to recognize objects in massive image data sets. The neural net learned to iteratively improve at classifying and identifying various objects-for instance, a mite, a mushroom, a motor scooter, a Madagascar cat. And it performed with unexpectedly spectacular accuracy.

Deep learning set off the latest AI revolution, transforming computer vision and the field as a whole. Hinton believes deep learning should be almost all that's needed to fully replicate human intelligence.

But despite rapid progress, there are still major challenges. Expose a neural net to an unfamiliar data set or a foreign environment, and it reveals itself to be brittle and inflexible. Self-driving cars and essay-writing language generators impress, but things can go awry. AI visual systems can be easily confused: a coffee mug recognized from the side would be an unknown from above if the system had not been trained on that view; and with the manipulation of a few pixels, a panda can be mistaken for an ostrich, or even a school bus.

GLOM addresses two of the most difficult problems for visual perception systems: understanding a whole scene in terms of objects and their natural parts; and recognizing objects when seen from a new viewpoint.(GLOM's focus is on vision, but Hinton expects the idea could be applied to language as well.)

An object such as Hinton's face, for instance, is made up of his lively if dog-tired eyes (too many people asking questions; too little sleep), his mouth and ears, and a prominent nose, all topped by a not-too-untidy tousle of mostly gray. And given his nose, he is easily recognized even on first sight in profile view.

Both of these factors-the part-whole relationship and the viewpoint-are, from Hinton's perspective, crucial to how humans do vision. If GLOM ever works," he says, it's going to do perception in a way that's much more human-like than current neural nets."

Grouping parts into wholes, however, can be a hard problem for computers, since parts are sometimes ambiguous. A circle could be an eye, or a doughnut, or a wheel. As Hinton explains it, the first generation of AI vision systems tried to recognize objects by relying mostly on the geometry of the part-whole-relationship-the spatial orientation among the parts and between the parts and the whole. The second generation instead relied mostly on deep learning-letting the neural net train on large amounts of data. With GLOM, Hinton combines the best aspects of both approaches.

There's a certain intellectual humility that I like about it," says Gary Marcus, founder and CEO of Robust.AI and a well-known critic of the heavy reliance on deep learning. Marcus admires Hinton's willingness to challenge something that brought him fame, to admit it's not quite working. It's brave," he says. And it's a great corrective to say, I'm trying to think outside the box.'"

The GLOM architectureIn crafting GLOM, Hinton tried to model some of the mental shortcuts-intuitive strategies, or heuristics-that people use in making sense of the world. GLOM, and indeed much of Geoff's work, is about looking at heuristics that people seem to have, building neural nets that could themselves have those heuristics, and then showing that the nets do better at vision as a result," says Nick Frosst, a computer scientist at a language startup in Toronto who worked with Hinton at Google Brain.

With visual perception, one strategy is to parse parts of an object-such as different facial features-and thereby understand the whole. If you see a certain nose, you might recognize it as part of Hinton's face; it's a part-whole hierarchy. To build a better vision system, Hinton says, I have a strong intuition that we need to use part-whole hierarchies." Human brains understand this part-whole composition by creating what's called a parse tree"-a branching diagram demonstrating the hierarchical relationship between the whole, its parts and subparts. The face itself is at the top of the tree, and the component eyes, nose, ears, and mouth form the branches below.

One of Hinton's main goals with GLOM is to replicate the parse tree in a neural net-this would distinguish it from neural nets that came before. For technical reasons, it's hard to do. It's difficult because each individual image would be parsed by a person into a unique parse tree, so we would want a neural net to do the same," says Frosst. It's hard to get something with a static architecture-a neural net-to take on a new structure-a parse tree-for each new image it sees." Hinton has made various attempts. GLOM is a major revision of his previous attempt in 2017, combined with other related advances in the field.

I'm part of a nose!"

GLOM vector

MS TECH | EVIATAR BACH VIA WIKIMEDIA

MS TECH | EVIATAR BACH VIA WIKIMEDIAA generalized way of thinking about the GLOM architecture is as follows: The image of interest (say, a photograph of Hinton's face) is divided into a grid. Each region of the grid is a location" on the image-one location might contain the iris of an eye, while another might contain the tip of his nose. For each location in the net there are about five layers, or levels. And level by level, the system makes a prediction, with a vector representing the content or information. At a level near the bottom, the vector representing the tip-of-the-nose location might predict: I'm part of a nose!" And at the next level up, in building a more coherent representation of what it's seeing, the vector might predict: I'm part of a face at side-angle view!"

But then the question is, do neighboring vectors at the same level agree? When in agreement, vectors point in the same direction, toward the same conclusion: Yes, we both belong to the same nose." Or further up the parse tree. Yes, we both belong to the same face."

Seeking consensus about the nature of an object-about what precisely the object is, ultimately-GLOM's vectors iteratively, location-by-location and layer-upon-layer, average with neighbouring vectors beside, as well as predicted vectors from levels above and below.

However, the net doesn't willy-nilly average" with just anything nearby, says Hinton. It averages selectively, with neighboring predictions that display similarities. This is kind of well-known in America, this is called an echo chamber," he says. What you do is you only accept opinions from people who already agree with you; and then what happens is that you get an echo chamber where a whole bunch of people have exactly the same opinion. GLOM actually uses that in a constructive way." The analogous phenomenon in Hinton's system is those islands of agreement."

Geoff is a highly unusual thinker..."

Sue Becker

Imagine a bunch of people in a room, shouting slight variations of the same idea," says Frosst-or imagine those people as vectors pointing in slight variations of the same direction. They would, after a while, converge on the one idea, and they would all feel it stronger, because they had it confirmed by the other people around them." That's how GLOM's vectors reinforce and amplify their collective predictions about an image.

GLOM uses these islands of agreeing vectors to accomplish the trick of representing a parse tree in a neural net. Whereas some recent neural nets use agreement among vectors for activation, GLOM uses agreement for representation-building up representations of things within the net. For instance, when several vectors agree that they all represent part of the nose, their small cluster of agreement collectively represents the nose in the net's parse tree for the face. Another smallish cluster of agreeing vectors might represent the mouth in the parse tree; and the big cluster at the top of the tree would represent the emergent conclusion that the image as a whole is Hinton's face. The way the parse tree is represented here," Hinton explains, is that at the object level you have a big island; the parts of the object are smaller islands; the subparts are even smaller islands, and so on."

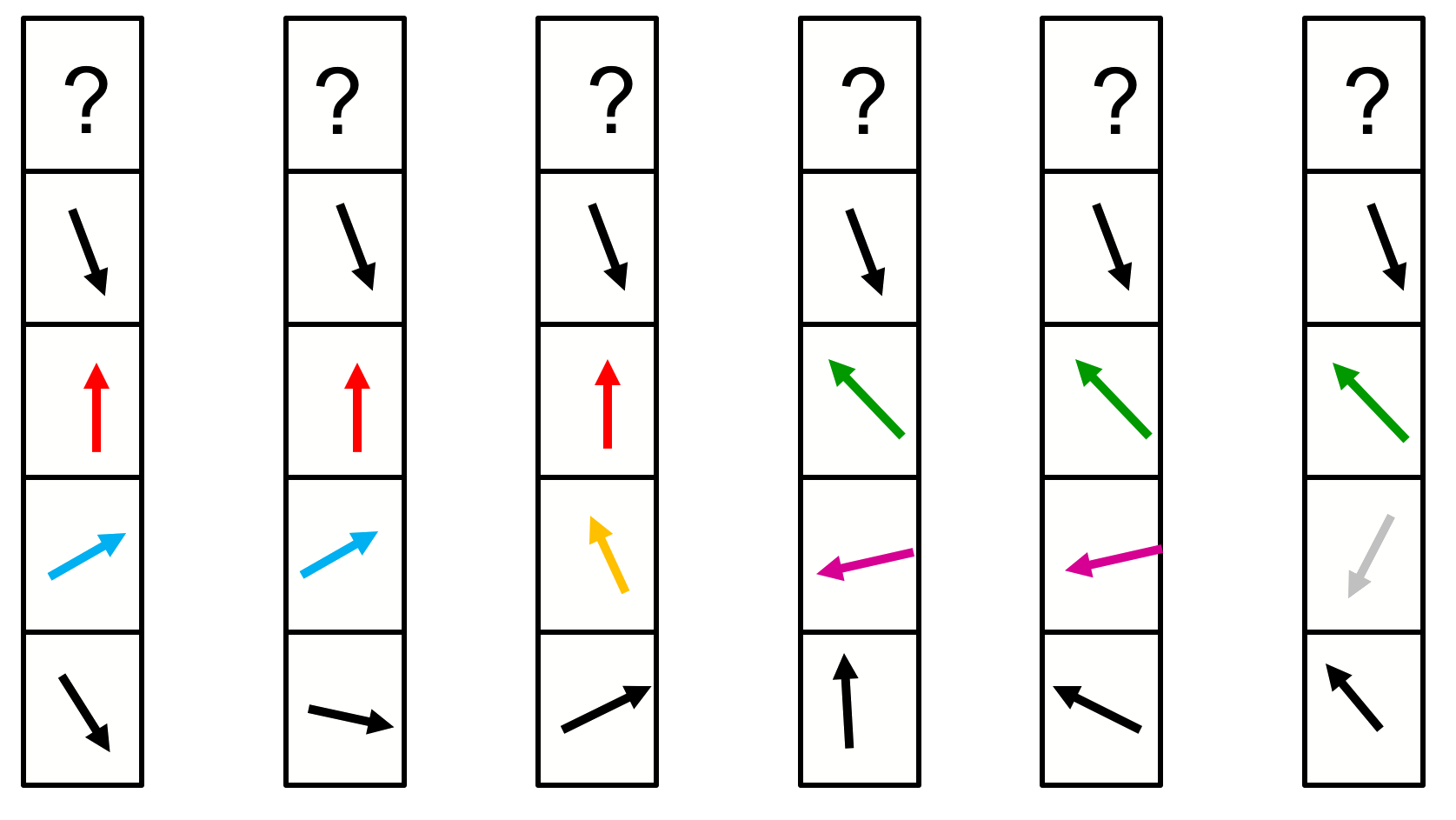

Figure 2 from Hinton's GLOM paper. The islands of identical vectors (arrows of the same color) at the various levels represent a parse tree. GEOFFREY HINTON

Figure 2 from Hinton's GLOM paper. The islands of identical vectors (arrows of the same color) at the various levels represent a parse tree. GEOFFREY HINTONAccording to Hinton's long-time friend and collaborator Yoshua Bengio, a computer scientist at the University of Montreal, if GLOM manages to solve the engineering challenge of representing a parse tree in a neural net, it would be a feat-it would be important for making neural nets work properly. Geoff has produced amazingly powerful intuitions many times in his career, many of which have proven right," Bengio says. Hence, I pay attention to them, especially when he feels as strongly about them as he does about GLOM."

The strength of Hinton's conviction is rooted not only in the echo chamber analogy, but also in mathematical and biological analogies that inspired and justified some of the design decisions in GLOM's novel engineering.

Geoff is a highly unusual thinker in that he is able to draw upon complex mathematical concepts and integrate them with biological constraints to develop theories," says Sue Becker, a former student of Hinton's, now a computational cognitive neuroscientist at McMaster University. Researchers who are more narrowly focused on either the mathematical theory or the neurobiology are much less likely to solve the infinitely compelling puzzle of how both machines and humans might learn and think."

Turning philosophy into engineeringSo far, Hinton's new idea has been well received, especially in some of the world's greatest echo chambers. On Twitter, I got a lot of likes," he says. And a YouTube tutorial laid claim to the term MeGLOMania."

Hinton is the first to admit that at present GLOM is little more than philosophical musing (he spent a year as a philosophy undergrad before switching to experimental psychology). If an idea sounds good in philosophy, it is good," he says. How would you ever have a philosophical idea that just sounds like rubbish, but actually turns out to be true? That wouldn't pass as a philosophical idea." Science, by comparison, is full of things that sound like complete rubbish" but turn out to work remarkably well-for example, neural nets, he says.

GLOM is designed to sound philosophically plausible. But will it work?

Chris Williams, a professor of machine learning in the School of Informatics at the University of Edinburgh, expects that GLOM might well spawn great innovations. However, he says, the thing that distinguishes AI from philosophy is that we can use computers to test such theories." It's possible that a flaw in the idea might be exposed-perhaps also repaired-by such experiments, he says. At the moment I don't think we have enough evidence to assess the real significance of the idea, although I believe it has a lot of promise."

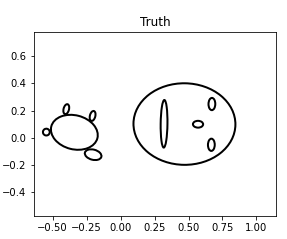

The GLOM test model inputs are ten ellipses that form a sheep or a face. LAURA CULP

The GLOM test model inputs are ten ellipses that form a sheep or a face. LAURA CULPSome of Hinton's colleagues at Google Research in Toronto are in the very early stages of investigating GLOM experimentally. Laura Culp, a software engineer who implements novel neural net architectures, is using a computer simulation to test whether GLOM can produce Hinton's islands of agreement in understanding parts and wholes of an object, even when the input parts are ambiguous. In the experiments, the parts are 10 ellipses, ovals of varying sizes, that can be arranged to form either a face or a sheep.

With random inputs of one ellipse or another, the model should be able to make predictions, Culp says, and deal with the uncertainty of whether or not the ellipse is part of a face or a sheep, and whether it is the leg of a sheep, or the head of a sheep." Confronted with any perturbations, the model should be able to correct itself as well. A next step is establishing a baseline, indicating whether a standard deep-learning neural net would get befuddled by such a task. As yet, GLOM is highly supervised-Culp creates and labels the data, prompting and pressuring the model to find correct predictions and succeed over time. (The unsupervised version is named GLUM-It's a joke," Hinton says.)

At this preliminary state, it's too soon to draw any big conclusions. Culp is waiting for more numbers. Hinton is already impressed nonetheless. A simple version of GLOM can look at 10 ellipses and see a face and a sheep based on the spatial relationships between the ellipses," he says. This is tricky, because an individual ellipse conveys nothing about which type of object it belongs to or which part of that object it is."

And overall, Hinton is happy with the feedback. I just wanted to put it out there for the community, so anybody who likes can try it out," he says. Or try some sub-combination of these ideas. And then that will turn philosophy into science."