Apple’s RealityKit 2 allows developers to create 3D models for AR using iPhone photos

At its Worldwide Developer Conference, Apple announced a significant update to RealityKit, its suite of technologies that allow developers to get started building AR (augmented reality) experiences. With the launch of RealityKit 2, Apple says developers will have more visual, audio, and animation control when working on their AR experiences. But the most notable part of the update is how Apple's new Object Capture technology will allow developers to create 3D models in minutes using only an iPhone.

Apple noted during its developer address that one of the most difficult parts of making great AR apps was the process of creating 3D models. These could take hours and thousands of dollars.

With Apple's new tools, developers will be able take a series of pictures using just an iPhone (or iPad or DSLR, if they prefer) to capture 2D images of an object from all angles, including the bottom.

Then, using the Object Capture API on macOS Monterey, it only takes a few lines of code to generate the 3D model, Apple explained.

Image Credits: Apple

To begin, developers would start a new photogrammetry session in RealityKit that points to the folder where they've captured the images. Then, they would call the process function to generate the 3D model at the desired level of detail. Object Capture allows developers to generate the USDZ files optimized for AR Quick Look - the system that lets developers add virtual, 3D objects in apps or websites on iPhone and iPad. The 3D models can also be added to AR scenes in Reality Composer in Xcode.

Apple said developers like Wayfair, Etsy and others are using Object Capture to create 3D models of real-world objects - an indication that online shopping is about to get a big AR upgrade.

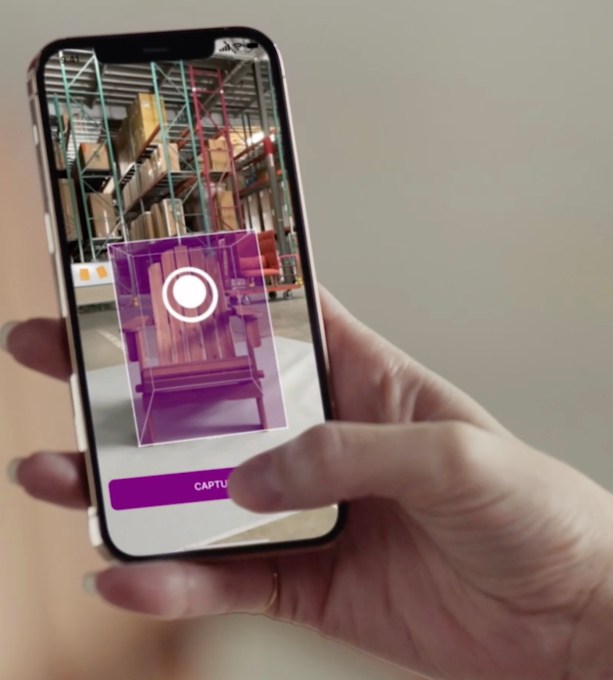

Wayfair, for example, is using Object Capture to develop tools for their manufacturers so they can create a virtual representation of their merchandise. This will allow Wayfair customers to be able to preview more products in AR than they could today.

Image Credits: Apple (screenshot of Wayfair tool))

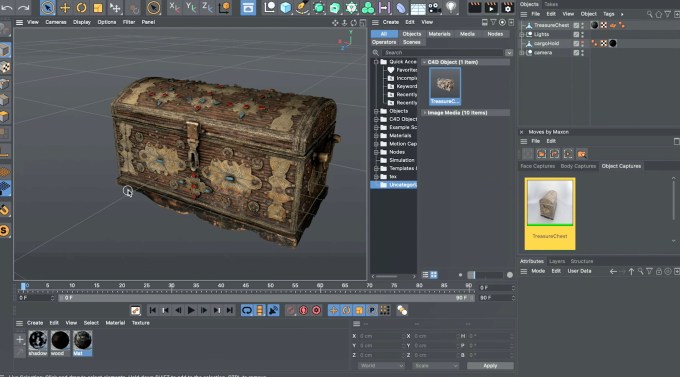

In addition, Apple noted developers including Maxon and Unity are using Object Capture for creating 3D content within 3D content creation apps, such as Cinema 4D and Unity MARS.

Other updates in RealityKit 2 include custom shaders that give developers more control over the rendering pipeline to fine tune the look and feel of AR objects; dynamic loading for assets; the ability to build your own Entity Component System to organize the assets in your AR scene; and the ability to create player-controlled characters so users can jump, scale and explore AR worlds in RealityKit-based games.

One developer, Mikko Haapoja of Shopify, has been trying out the new technology (see below) and shared some real-world tests where he shot objects using an iPhone 12 Max via Twitter.

Developers who want to test it for themselves can leverage Apple's sample app and install Monterey on their Mac to try it out.

Apple's Object Capture on a Pineapple. One of my fav things to test Photogrammetry against. This was processed using the RAW detail setting.

More info in thread

pic.twitter.com/2mICzbV8yY

- Mikko Haapoja (@MikkoH) June 8, 2021

Apple's Object Capture is the real deal. I'm impressed. Excited to see where @Shopify merchants could take this

Allbirds Tree Dashers. More details in thread

pic.twitter.com/fNKORtdtdB

- Mikko Haapoja (@MikkoH) June 8, 2021