Mojo Vision Puts Its AR Contact Lens Into Its CEO’s Eyes (Literally)

Editor's note: In March, I looked through Mojo Vision's AR contact lens-but I didn't put it in my eye. At that point, while non-working prototypes had been tested for wearability, nobody had worn the fully functional, battery-powered, wirelessly communicating, device. Today, Mojo announced that its augmented reality lens had gone on-eye-specifically, on the eye of Mojo Vision CEO Drew Perkins, on 23 June.

I've worn it. It works....and it was the first ever on eye demonstration of a feature complete augmented reality smart contact lens," reported Perkins in a blog post. The final technical hurdle to wearing the lens was ensuring that the power and radio communications systems worked without wires. Cutting the cord [proved] that the lens and all major components are fully functional and reduce many of the technical challenges in building a smart contact lens."

It's an exciting milestone for Perkins and the Mojo Vision team, and we'll continue monitoring their progress.

Story from 30 March 2022 follows:

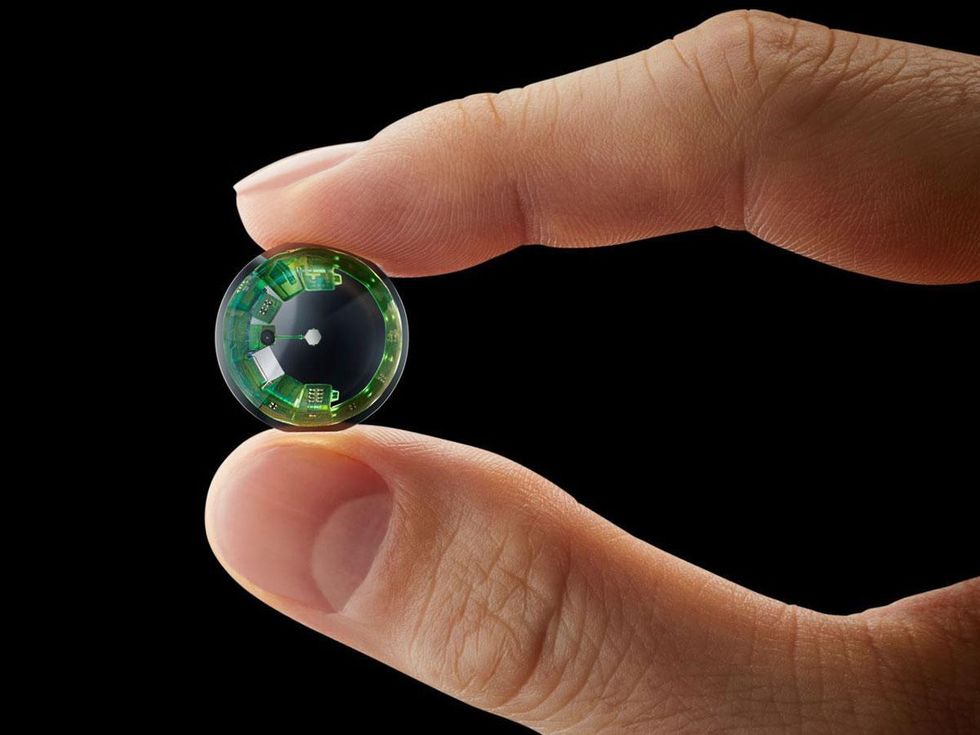

Early this year, IEEE Spectrum editor Tekla Perry tested Mojo Vision's AR contact lens by holding it very close to one eye and peeking through.Mojo Vision

Early this year, IEEE Spectrum editor Tekla Perry tested Mojo Vision's AR contact lens by holding it very close to one eye and peeking through.Mojo Vision

In March, Mojo Vision unveiled its latest AR contact lens. Still a prototype, the device has clinical testing and further development ahead before it can apply for the U.S. Food and Drug Administration (FDA) approval needed to sell to consumers. But Mojo's engineers are steadily ticking off engineering milestones.

Last week I got to literally peek through Mojo's newest lens. Here's what I saw, and what Steve Sinclair, Mojo senior vice president of product and marketing, had to say about the company's progress so far and the challenges that remain.

First, the demo. I did not put the lens in my eye-this prototype is still in safety testing, and fitting a contact lens requires an eye exam. Instead, I held a lens very close to one eye and peeked through. I was able to move around freely, but because holding instead of wearing the device means that it cannot track eye movements, Mojo has temporarily incorporated a tiny crosshair into the user interface to help with alignment. The nature of the demo also meant that images I saw were flat; with a lens in both eyes, the images will appear in 3D.

What's New in the Mojo LensMedical-grade microbatteries on board along with a custom power management IC

High-res 14,000 pixels per inch monochrome microLED display (up from about 8,000 pixels per inch)

Custom 5-gigahertz radio using a proprietary low-latency communications protocol

Eye tracking using accelerometer, gyroscope, and magnetometer

Coming soon: Custom image sensors

I tried out several applications. To select them, I looked around the periphery of the real-world view in front of me, which caused icons to appear. By focusing on one (in this case, aligning the crosshairs), I selected it. The app I found to be the most fun to use wasn't the most complex, but it did show off the sensors on board by tagging compass headings as I turned to face different directions. I also played with a travel tools app that included a high-resolution monochrome image of an incoming Uber driver, a biking app that called up heart rate and other information useful on a training ride, a teleprompter app that naturally scrolled up and down to move through the text, and a monochrome video stream. All these demos took place in the prototype lens, with me fully in control of them. Mojo's team then moved to a VR simulation to demonstrate eye tracking features that won't work unless the lens is sitting in your eye.

This isn't the first time I've seen the Mojo lens in person. But since that last demo in 2020, the engineering team has moved from wireless power to batteries on board, increased the resolution of the display from 8,000 pixels per inch to 14,000, thinned commercial motion sensors and developed its own radio and power management, and created several apps. Image sensors-a feature of earlier demos that showed off edge detection in low light and other vision enhancements-have yet to be built into the current prototype, though Sinclair says that they are in the works, and that the company is continuing to test apps for people with low vision and still expects them to be among the earliest customers for the device.

Here's what else Sinclair had to say about the technology as developed so far and the path ahead.

Let's talk about the batteries.

Steve Sinclair: The battery is in the outer ring, embedded in the lens. We are partnering with a medical-grade battery company that makes implantable microbatteries for things like pacemakers, to design something safe to wear. Previously, we were using magnetic inductive coupling, and that seemed fine when you were holding it up to your eye. But the moment you put it on your eye and started moving your head and darting your eye and looking around, we were losing that connection to the wireless field. It wasn't as reliable as we needed it to be. And so we made a decision about a year and a half ago to switch over to battery power.

I recall you always wanted to get batteries on board?

Sinclair: We thought so. But we sped that path up, because we came to the conclusion that the other path was just not a good way to go.

Why doesn't this version have the image sensor?

Sinclair: We've decided to leave out the imager, the camera, for right now; it's not critical to the use cases that we're looking at first.

But you had been working to develop applications for people with low vision. What happened to that?

Sinclair: We've been using mainly low-vision capabilities built into smartphones right now to take pictures of things and bring them up to your eye for zooming in and out; we'll add in the imager and test those capabilities out next. It's just about there, but it wasn't necessary to get to this milestone, so we decided to simplify things a little bit.

Given the amount of lens real estate being taken up by batteries and chips, was oxygenation an issue?

Sinclair: Absolutely. That was a core engineering point that needed to be solved before we could get to this lens. So this lens has channels built into it and a special design that allows air to get in and get through. The oxygen diffuses out over the surface of the cornea and the sclera.

Does the circuitry block vision at all?

Sinclair: See the cutout on the side? Imagine I'm wearing it. It's going to be oriented like this [the cutout away from the nose] because I need the peripheral vision on the outside, not towards the nasal side. Ultimately, there's even more we can do to push components further toward the edges of the lens to maximize light entering the pupil.

Now that you explain the arrangement of circuitry to protect peripheral vision, it seems obvious, but some of the most obvious things take figuring out.

Sinclair: It wasn't immediately obvious; our original design had [the electronics] all the way around.

What happens next?

Sinclair: We're already wearing lenses that don't have any electronics in them, but they're shaped the same way, so that we can test for comfort and for how long can we wear them and have the oxygenation still working to our satisfaction.

Next we start testing [the complete prototype] on-eye, and see just how well it works in different situations. We can't say exactly when that happens. We hope it's soon, but we've got to make sure it's safe and everything's working the way we expect it to work. That testing will start with Drew Perkins wearing it, our CEO, then probably Mike Wiemer, our CTO. And then it goes to folks like myself and others on the executive team, along with some of the key engineers that need to start evaluating things quickly.

We'll be testing the software, the battery life, and the wireless connectivity and [its] speeds. There's likely to be a spin of one, two, or three of the chips built into the lens as we discover things that don't work right or could be optimized to work better together as a system.

What's the timeline for commercialization?

Sinclair: Everything is predicated on eventually getting FDA certification. We don't like to presume how long that's going to take.

You're going for the consumer market. Today, it seems, most AR glasses manufacturers have decided to focus on the enterprise market initially. Why the different path?

Sinclair: With AR glasses, there are definitely some awesome enterprise use cases. But these are not great for contact lenses. Say you're an IT manager, and you've come up with an awesome contact lens application. Can you tell your workers to wear a contact lens? Not usually. But consumers can make that decision. The reason people wear contact lenses is very consumery: I want to look like myself. I want to use it when I'm working out or doing sports. I don't like things on my face. I don't like the fashion sense of glasses; it's not me. We decided to lean into those reasons that people pick contact lenses.

Can you talk about pricing?

Sinclair: Like anything else, when we first bring it out, it'll be a little expensive. Our goal when we're running at volume is that it should come out somewhere close to a high-end smartphone. But factor in the fact that people are already spending US $500, $600, or $700 for eyewear today, subtract that out of the total price, and the adder on top of that is not huge.

This article appears in the June 2022 print issue as My Peek Through Mojo Vision's AR Contacts."