Wandering Robots in the Wild

In order to better understand how people will interact with mobile robots in the wild, we need to take them out of the lab and deploy them in the real world. But this isn't easy to do.

Roboticists tend to develop robots under the assumption that they'll know exactly where their robots are at any given time-clearly that's an important capability if the robot's job is to usefully move between specific locations. But that ability to localize generally requires the robot to have powerful sensors and a map of its environment. There are ways to wriggle out of some of these requirements: If you don't have a map, there are methods that build a map and localize at the same time, and if you don't have a good range sensor, visual navigation methods use just a regular RGB camera, which most robots would have anyway. Unfortunately, these alternatives to traditional localization-based navigation are either computationally expensive, not very robust, or both.

We ran into this problem when we wanted to deploy our Kuri mobile social robot in the halls of our building for a user study. Kuri's lidar sensor can't see far enough to identify its location on a map, and its onboard computer is too weak for visual navigation. After some thought, we realized that for the purposes of our deployment, we didn't actually need Kuri to know exactly where it was most of the time. We did need Kuri to return to its charger when it got low on battery, but this would be infrequent enough that a person could help with that if necessary. We decided that perhaps we could achieve what we wanted by just letting Kuri abandon exact localization, and wander.

Robotic WanderingIf you've seen an older-model robot vacuum cleaner doing its thing, you're already familiar with what wandering looks like: The robot drives in one direction until it can't anymore, maybe because it senses a wall or because it bumps into an obstacle, and then it turns in a different direction and keeps going. If the robot does this for long enough, it's statistically very likely to cover the whole floor, probably multiple times. Newer and fancier robot vacuums can make a map and clean more systematically and efficiently, but these tend to be more expensive.

You can think of a wandering behavior as consisting of three parts:

- Moving in a straight line

- Detecting event(s) that trigger the selection of a new direction

- A method that's used to select a new direction

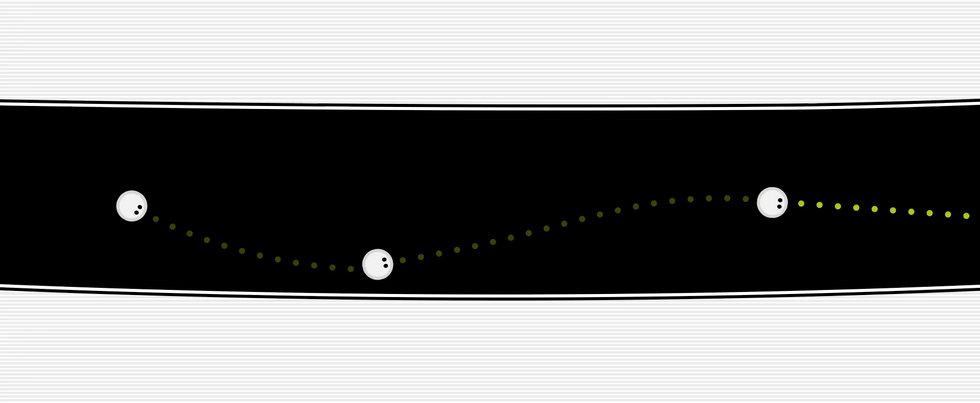

Many possible wandering behaviors turn out not to work very well. For example, we found that having the robot move a few meters before selecting a new direction at random led it to get stuck moving back and forth in long corridors. The curve of the corridors meant that simply waiting for the robot to collide before selecting a new direction quickly devolved into the robot bouncing between the walls. We explored variations using odometry information to bias direction selection, but these didn't help because the robot's estimate of its own heading-which was poor to begin with-would degrade every time the robot turned.

In the end, we found that a preference for moving in the same direction as long as possible-a strategy we call informed direction selection-was most effective at making Kuri roam the long, wide corridors of our building.

Informed direction selection uses a local costmap-a small, continuously updating map of the area around the robot-to pick the direction that is easiest for the robot to travel in, breaking ties in preference for directions that are closer to the previously selected direction. The resulting behavior can look like a wave; the robot commits to a direction, but eventually an obstacle comes into view on the costmap and the local controller starts to turn the robot slightly to get around it." If it were a small obstruction, like a person walking by, the robot would circumnavigate and continue in roughly the original direction, but in the case of large obstacles like walls, the local controller will eventually detect that it has drifted too far from the original linear plan and give up. Informed direction selection will kick in and trace lines through the costmap to find the most similar heading that goes through free space. Typically, this will be the line that moves along and slightly away from the wall.

Our wandering behavior is more complicated than something like always choosing to turn 90 degrees without considering any other context, but it's much simpler than any approach that involves localization, since the robot just needs to be able to perceive obstacles in its immediate vicinity and keep track of roughly which direction it's traveling in. Both of these capabilities are quite accessible, as there are implementations in core ROS packages that do the heavy lifting, even for basic range sensors and noisy inertial measurement units and wheel encoders.

Like more intelligent autonomous-navigation approaches, wandering does sometimes go wrong. Kuri's lidar has a hard time seeing dark surfaces, so it would occasionally wedge itself against them. We use the same kinds of recovery behaviors that are common in other systems, detecting when the robot hasn't moved (or hasn't moved enough) for a certain duration, then attempting to rotate in place or move backward. We found it important to tune our recovery behaviors to unstick the robot from the hazards particular to our building. In our first rounds of testing, the robot would reliably get trapped with one tread dangling off a cliff that ran along a walkway. We were typically able to get the robot out via teleoperation, so we encoded a sequence of velocity commands that would rotate the robot back and forth to reengage the tread as a last-resort recovery. This type of domain-specific customization is likely necessary to fine-tune wandering behaviors for a new location.

Other types of failures are harder to deal with. During testing, we occasionally ran the robot on a different floor, which had tables and chairs with thin, metallic legs. Kuri's lidar couldn't see these reliably and would sometimes clothesline" itself with the seat of the chair, tilting back enough to lose traction. No combination of commands could recover the robot from this state, so adding a tilt-detection safety behavior based on the robot's cliff sensors would've been critical if we had wanted to deploy on this floor.

Using Human HelpEventually, Kuri needs to get to a charger, and wandering isn't an effective way of making that happen. Fortunately, it's easy for a human to help. We built some chatbot software that the robot used to ping a remote helper when its battery was low. Kuri is small and light, so we opted to have the helper carry the robot back to its charger, but one could imagine giving a remote helper a teleoperation interface and letting them drive the robot back instead.

Kuri was able to navigate all 350 meters of hallway on this floor, which took it 32 hours in total.

Kuri was able to navigate all 350 meters of hallway on this floor, which took it 32 hours in total.

We deployed this system for four days in our building. Kuri was able to navigate all 350 meters of hallway on the floor, and ran for 32 hours total. Each of the 12 times Kuri needed to charge, the system notified its designated helper, and they found the robot and placed it on its charger. The robot's recovery behaviors kept it from getting stuck most of the time, but the helper needed to manually rescue it four times when it got wedged near a difficult-to-perceive banister.

Wandering with human help enabled us to run an exploratory user study on remote interactions with a building photographer robot that wouldn't have been possible otherwise. The system required around half an hour of the helper's time over the course of its 32-hour deployment. A well-tuned autonomous navigation system could have done it with no human intervention at all, but we would have had to spend a far greater amount of engineering time to get such a system to work that well. The only other real alternative would have been to fully teleoperate the robot, a logistical impossibility for us.

To Wander, or Not to Wander?It's important to think about the appropriate level of autonomy for whatever it is you want a robot to do. There's a wide spectrum between autonomous" and teleoperated," and a solution in the middle may help you get farther along another dimension that you care more about, like cost or generality. This can be an unfashionable suggestion to robotics researchers (for whom less-than-autonomous solutions can feel like defeat), but it's better to think of it as an invitation for creativity: What new angles could you explore if you started from an 80 percent autonomy solution rather than a fully autonomous solution? Would you be able to run a system for longer, or in a place you couldn't before? How could you sprinkle in human assistance to bridge the gap?

We think that wandering with human help is a particularly effective approach in some scenarios that are especially interesting to human-robot interaction researchers, including:

- Studying human perceptions of robots

- Studying how robots should interact with and engage bystanders

- Studying how robots can interact with remote users and operators

You obviously wouldn't want to build a commercial mail-courier robot using wandering, but it's certainly possible to use wandering to start studying some of the problems these robots will face. And you'll even be able to do it with expressive and engaging platforms like Kuri (give our code a shot!), which wouldn't be up for the task otherwise. Even if wandering isn't a good fit for your specific use case, we hope you'll still carry the mind-set with you-that simple solutions can go a long way if you budget just a touch of human assistance into your system design.

Nick Walker researches how humans and robots communicate with one another, with an eye toward future home and workplace robots. While he was a Ph.D. student at the University of Washington, he worked on both implicit communication-a robot's motion, for instance-and explicit communication, such as natural-language commands.

Amal Nanavati does research in human-robot interaction and assistive technologies. His past projects have included developing a robotic arm to feed people with mobility impairments, developing a mobile robot to guide people who are blind, and cocreating speech-therapy games for and with a school for the deaf in India. Beyond his research at the University of Washington, Amal is an activist and executive board member of UAW 4121.