Everyone’s Mad At Cloudflare; Is There Room For Principled Takes On Moderation?

I originally wrote a version of this post last week before Cloudflare decided to block Kiwi Farms, intending to post it after the long weekend, but I needed to rewrite a significant portion of it after Cloudflare's decision. None of the salient points have changed (nor has my mind on how to think about all of this), and large chunks of this post remain from the original. But the change in direction from Cloudflare changed the nature of the post.

I'm going to do the thing that every so often I feel the need to do: discuss a topic that is complicated and requires nuanced thinking, but where many people have strong opinions that leave little room for nuance or discussion. As I've been known to do when I write these posts, I ask, politely, that you to try to read the whole piece and consider it before resorting to the immediate response.

Here's something, however, that's not nuanced, and fairly straightforward: Kiwi Farms is awful.

Also, people want Kiwi Farms to be shut down. Whatever it takes.

If you don't know what Kiwi Farms is, it's an online forum that is basically the definition of the forum for the worst, most maladjusted, horrible, awful people in the world." Kiwi Farms has basically no redeeming qualities, and that includes the fact that many of its users gleefully embrace the doxxing and harassing of people it doesn't like - sometimes up to and including swatting. The people who like Kiwi Farms push back on this and insist that they just like crass and norm-breaking humor. But the fact remains that, on the whole, it's a forum for punching down on the marginalized, where people gleefully try to make others' lives miserable, with a special focus on attacking the trans community.

To many of the people who wanted Kiwi Farms shut down, there was a simple solution: Cloudflare should stop providing its anti-DDoS protections to the site, which would almost certainly lead to the site going down.

It's a simple solution. Except that it isn't quite that simple.

While we may think that Kiwi Farm is, fairly objectively, a horrible awful website with no redeeming value, we live in a world where (1) not everyone agrees (for example, many of the people on Kiwi Farms seem to believe it has value), and (2) there are many other sites that we believe do have tremendous social value that other people believe are horrible, awful websites with no redeeming values.

Before we get to the Cloudflare question, let's take a step back and talk about another time. A decade ago, there was a pretty big debate over SOPA. This was a law that was, in the minds of its backers, a tool to take offline (completely) a bunch of websites that, to the law's supporters, were horrible awful websites with zero redeeming value. Those were sites dedicated to piracy" of copyright protected material.

The underlying basis of SOPA was that it potentially allowed copyright holders to travel further down the stack. The DMCA already existed to take down content where there was an accusation of infringement. But the core idea in the SOPA law was that's not enough, we need to be able to take down entire websites." And part of that effort involved the ability to target infrastructure providers.

And that was problematic then, as it should remain problematic today. The biggest issue with targeting infrastructure providers is that - generally speaking - they don't have any nuance on their side when it comes to remedies. They pull their services, and an entire site breaks. It does not allow for the more narrow targeting of specific content (in the case of SOPA, with infringing content). It's very much a nuclear option.

And, in part, that's why it's an appealing solution to people who insist that entire sites must be wiped out.

But as we learned, not just in the SOPA fight but in other copyright battles, it is somewhat - unfortunately - inevitable that those looking to silence or suppress a certain bit of content will travel further up the infrastructure stack as far as they can go to kill a site entirely. And that can be quite problematic. When we're talking about taking down entire sites because some content on them is objectionable, even to a horrifying level, things get really messy, really fast.

Now, let's get back to Cloudflare. Everyone has been mad at Cloudflare. People wanted Kiwi Farms dead, and they've moved up the stack to the point where they've discovered that Cloudflare provides some security tools to Kiwi Farms. The easy answer was that Cloudflare should pull the plug.

Now, if you've been following this at all, you probably already know that five years ago (almost exactly), Cloudflare stopped providing its anti-DDoS service to the neo-Nazi forum the Daily Stormer, which kicked off a debate (one that Cloudflare CEO Matthew Prince directly asked for) about the role of infrastructure providers in content moderation. Unfortunately, to date, that debate has not resulted in many conclusions.

Last year, here at Techdirt, we hosted an online symposium (starting with articles, and following up with a live discussion) all about the challenges of moderating content at the infrastructure layer. And what came out of that, to me, is that all of this is way more complicated than any simple answer can provide. I came into that discussion believing that the content moderation discussion should be focused at the edge providers - those that directly touch the users - and that infrastructure providers were the wrong layer to focus on.

But, over the course of the discussion - as with so much these days - it became clear that divvying up infrastructure and edge isn't so easy either, and there will always be cases where what seems to make sense as a principle doesn't necessarily apply in practice. But, still, we need principles, because if we're just making these decisions on the fly, it's going to create a mess.

So, that finally brings us to the statement that Cloudflare put out last week (signed by CEO Matthew Prince and their head of public policy, Alissa Starzak, both of whom have been thinking deeply about these issues and talking to many, many experts), which was obviously a response to this whole mess, even if it doesn't directly address Kiwi Farms. People were very mad online about the statement. Mainly because it doesn't say the one simple thing they want to hear: we're kicking Kiwi Farms off our service."

Instead, it was four days later that Prince put out a second statement that went further. It said not only was Cloudflare no longer supporting Kiwi Farms, but that it would redirect visitors to another site:

We have blocked Kiwifarms. Visitors to any of the Kiwifarms sites that use any of Cloudflare's services will see a Cloudflare block page and a link to this post. Kiwifarms may move their sites to other providers and, in doing so, come back online, but we have taken steps to block their content from being accessed through our infrastructure.

Now people are still mad at Cloudflare, because they feel it took them too long to make this decision. As Prince's statement notes, the change was due to targeted threats" on Kiwi Farms having escalated over the last 48 hours to the point that we believe there is an unprecedented emergency and immediate threat to human life unlike we have previously seen from Kiwifarms or any other customer before."

That, alone, is a bit difficult to believe if you've followed any of Kiwi Farms' history, in which targeted threats that could put lives in danger happen regularly enough.

So there's a strong argument that if it was reasonable to pull service from Kiwi Farms on Saturday, it was worth doing it earlier.

But, before we discuss the final decision, I want to go back to the original statement from last Wednesday. It is worth reading and considering, even as tons of smart people were screaming about it being ridiculous. Even if you disagree with it, I think it's hard to argue that the statement is ridiculous. It actually lays out the nuances and challenges involved in its position.

This is actually unlike most companies' statements on content moderation, which are vague and post-hoc rationalization for decisions. Cloudflare's statement actually lays out an understandable set of principles and a framework for how to think about things.

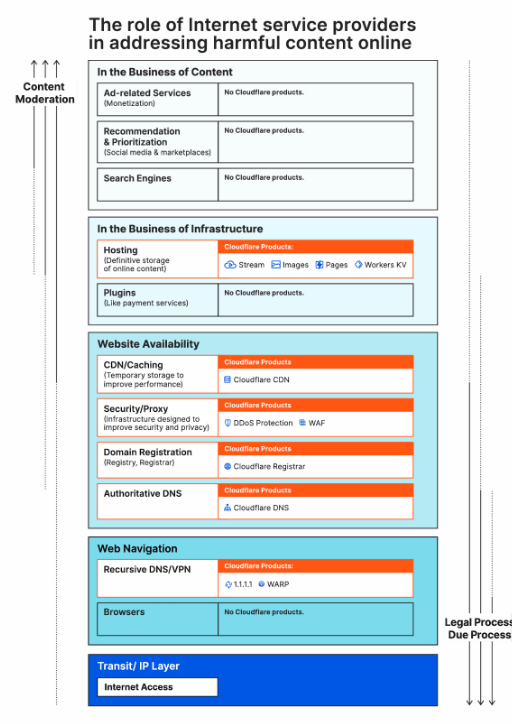

It notes, correctly, that Cloudflare has a number of different product offerings and services, some of which are closer to the edge, and some of which are deeper in the infrastructure layer. It even lays out this nice graphic displaying not just the way it views the different layers, and where Cloudflare plays within those layers, but also where content moderation comes into play as compared to where legal due process comes into play (and it's interesting to see how these things are represented as almost the opposite of one another).

You can disagree with pieces of this, but you can see that a lot of thought has been put into how all of this works together and plays out.

And the writeup lays out some pretty clear principles. Including this one which it's difficult to deny is quite accurate (and important, as principles go):

Our guiding principle is that organizations closest to content are best at determining when the content is abusive. It also recognizes that overbroad takedowns can have significant unintended impact on access to content online.

The discussion over how to handle moderation of its security practices is similarly sensible and principled. The analogy of the fire department here is as powerful one:

Some argue that we should terminate these services to content we find reprehensible so that others can launch attacks to knock it offline. That is the equivalent argument in the physical world that the fire department shouldn't respond to fires in the homes of people who do not possess sufficient moral character. Both in the physical world and online, that is a dangerous precedent, and one that is over the long term most likely to disproportionately harm vulnerable and marginalized communities.

The fire department analogy also got me thinking, because it kind of highlights how much of the anger directed at Cloudflare is similarly misplaced. The anger is basically saying you need to remove your protection, so that we can burn Kiwi Farms down." But, the larger question remains unaddressed: why does Kiwi Farms exist in the first place, and why is it left to Cloudflare to determine whether or not the public should be able to burn it down?

When we're in a place where the only way to deal with those seeking to harm others is to demand that the fire department stand aside so we can burn down their house, we're in a very, very dark place.

Again, to those being targeted and harassed (to ridiculous lengths) by people on Kiwi Farms, there is no time for principles and nuance. And that's where much of all of this feels like it falls down. It also seems to be the breaking point for Cloudflare, which also realized that at some point lives are on the line for no good reason at all (actually terrible reasons), and there's the question of how not doing anything itself can enable harm. And that's also a principle worth taking seriously.

Cloudflare's statement then highlights something that I think is key. In the rare instances where they have taken down other services, the immediate response is greater demands, often from authoritarian countries (and they don't say this, but I know it's true: also some countries we don't normally think of as authoritarian) to take down other sites citing the exact same language used as the reasons for taking down the initial sites:

This isn't hypothetical. Thousands of times per day we receive calls that we terminate security services based on content that someone reports as offensive. Most of these don't make news. Most of the time these decisions don't conflict with our moral views. Yet two times in the past we decided to terminate content from our security services because we found it reprehensible. In 2017, we terminated the neo-Nazi troll site The Daily Stormer. And in 2019, we terminated the conspiracy theory forum 8chan.

In a deeply troubling response, after both terminations we saw a dramatic increase in authoritarian regimes attempting to have us terminate security services for human rights organizations - often citing the language from our own justification back to us.

I recognize that to many people, it feels like there's an easy response to this: take down the truly reprehensible sites and tell the authoritarian regimes to fuck off. Of course, that includes a lot of assumptions that aren't necessarily true in real life - mainly that everything is as black and white as that. The reality is that it's not that clear, and it's reminiscent of the arguments from people who have never dealt with any real content moderation decisions, and who insist that it's simple just delete the bad content, and leave the good content."

The reality is not that simple. Whether content is good or bad is not a clear thing. Lots of people think it is, but it's not. What's good to some people is bad to others. It's not like there are obvious decisions here. Everything involves judgment calls, and even if you can point to something like Kiwi Farms and say that the only people who can see any good in it are sociopathic, it still leaves you with other questions and challenges with no easy answer.

Let's say we assume that on the scale of sociopathic rage sites from 1 to 100, KF is at 100. So, you say, that should be left unprotected for people to tear down with pitchforks." Then where do you draw the line? The site that is 99% sociopathic is still pretty problematic. What about the one that's 70%? 50% is still halfway there.

And if you say that those other sites don't exist, you're wrong. There are all kinds of sites out there, and some are way worse than others. Drawing that line is an impossible task.

So, Cloudflare is taking a principled stance to say look, this shouldn't be up to us." And the company is right about that - except that it appears that the rest of society, which should be taking responsibility for minimizing the harms of such things, has basically said that it won't. And what do you do in that case?

The rest of society - including the government and law enforcement - seems unwilling to do anything, so the next in line is Cloudflare. That's not a great situation, but it's reality. And reality sometimes makes principles difficult. Especially when lives are at stake.

There's value in having principles rather than arbitrary decision making (which feels much more common in content moderation). But that's incredibly unsatisfying to anyone who simply wants Kiwi Farms to not exist any more, and for horrible people to stop harassing others.

Cloudflare is actually right to take a principled stance. It's right to look at all this and say this decision shouldn't belong to us, because if it does, it's going to lead to other bad decisions." But the people who want Kiwi Farms to stop putting lives in danger are also absolutely right. And those two forces were opposed, and eventually Cloudflare did what it needed to do.

This is immensely unsatisfying to everyone, but it again highlights that we should be looking at the question of why something like Kiwi Farms exist in the first place - not why Cloudflare is providing services to them and preventing the public from burning them down.

Do we need better law enforcement that takes such things more seriously? Yes. There are countless reports out there of people harassed to ridiculous lengths by Kiwi Farms who, when they turned to law enforcement, were basically said that there's nothing that can be done. Do we need better education and mental health care so that fewer people are attracted to a sociopathic forum like Kiwi Farms? Yes. That too.

But we don't have those things. And that's not very helpful either. And it's going to lead to more problems down the road.

While I'm assuming most people didn't read the full piece from Cloudflare, down towards the end, the company highlights how the demands keep going further down the stack as well, and tie it back together to the point I made up top about SOPA, with regards to demands that Cloudflare block certain sites entirely via its DNS offering:

While we will generally follow legal orders to restrict security and conduit services, we have a higher bar for core Internet technology services like Authoritative DNS, Recursive DNS/1.1.1.1, and WARP. The challenge with these services is that restrictions on them are global in nature. You cannot easily restrict them just in one jurisdiction so the most restrictive law ends up applying globally.

We have generally challenged or appealed legal orders that attempt to restrict access to these core Internet technology services, even when a ruling only applies to our free customers. In doing so, we attempt to suggest to regulators or courts more tailored ways to restrict the content they may be concerned about.

Unfortunately, these cases are becoming more common where largely copyright holders are attempting to get a ruling in one jurisdiction and have it apply worldwide to terminate core Internet technology services and effectively wipe content offline. Again, we believe this is a dangerous precedent to set, placing the control of what content is allowed online in the hands of whatever jurisdiction is willing to be the most restrictive.

That should be quite concerning to everyone, and brings us back to what the SOPA fight was all about. But if you argue that it should be Cloudflare's responsibility to take down truly awful websites, then it's difficult to have a principled response when the legacy copyright industries show up to demand that other sites be taken offline globally.

What's been most fascinating to me is watching the reaction to this. Almost universally, people are attacking Cloudflare's stance, even as it's carefully laid out and argued on a principled level. But since the original post answers the simple question will you still provide services to Kiwi Farms" with a yes," most people have no time for the nuances and principles.

The only exception I've seen is that the people I know who have spent years grappling with these tricky and impossible tradeoffs regarding moderation at the infrastructure level have been mostly highlighting how thoughtful Cloudflare's piece is. I'd link to some of those discussions on Twitter, except that at least a few of the examples I've seen have since been deleted, as they were attacked in response, because they appeared to be supporting Cloudflare's thoughtful take on this - and that meant not allowing the public to burn down Kiwi Farms. Is there some irony in the fact that those demanding the removal of Kiwi Farms for the harassment it enables immediately resort to harassment of anyone who tries to hold a nuanced discussion on the topic? Perhaps.

There are other parts of Cloudflare's statement that I question. I think the company is way too quick to claim that its security services are the equivalent of a utility, as that seemingly suggests a kind of must-carry requirement that, in turn, raises many other thorny questions. Also, while I understand why the company also talked up some of the projects it has put in place to do good in the world, and in particular some of the many projects it has put together to help better protect at risk people (which truly are good programs, and which the company has generally not hyped up too much), it does come off as quite self-serving here, and reads awkwardly.

But, of course, none of that post answered the simple question, in which there is no room for nuance: why is Kiwi Farms still allowed to use Cloudflare's infrastructure? A few days later, Cloudflare admitted that it couldn't really answer that question either and took action.

It's a case where principles are important, but if your principles take you to a place where you eventually realize harm is happening that you could stop, it may be time to revisit at least some of them.

The problem is that it shouldn't be Cloudflare having to answer the question, because the only tool in its toolbox, really, is to stand aside and let the people with pitchforks burn a site down. And that should be worrying in its own right.

The real question should be why have we set up this world where it's Cloudflare's decision to make in the first place? And, once again, that leads us back to what seems to be at the heart of so many of these content moderation debates: there are larger societal issues at play here, and the one party that is supposed to be dealing with larger societal issues, the government, continues to fail to deal with anything... and then leaves it up to private corporations to shoulder the burden - and the widespread hate.

Much of it only exists because the government failed to do its job.

At the very least, I appreciate that Cloudflare management is willing to say we should take a principled look at how we deal with this," whereas so many other companies take a totally arbitrary position where decisions are inscrutable. And, over and over again, one of the biggest complaints that people have about content moderation is that the policies frequently seem so reactive and arbitrary, rather than thoughtful and principled.

But, when taking a thoughtful and principled stance leads to the wrong decision - as seemed to have been the case here - eventually someone has to step in and correct it. I'm glad that Cloudflare made the right call eventually, but I agree with its general principles, and even more importantly with the idea that this shouldn't be on the shoulders of one company. We should be exploring how society allowed this to happen in the first place, and left it on Cloudflare to fix.