Lagrange multiplier setup: Now what?

Suppose you need to optimize, i.e. maximize or minimize, a function f(x). If this is a practical problem and not a textbook exercise, you probably need to optimize f(x) subject to some constraint on x, say g(x) = 0.

Hmm. Optimize one function subject to a constraint given by another function. Oh yeah, Lagrange multipliers! You just need to solve

Or maybe you have two constraints: g(x) = 0 and h(x) = 0. No big deal. Just introduce another Lagrange multiplier:

OK. Now what?

Suppose your x is an n-dimensional vector, and you have k constraints. Now you have a system of n + k equations in n + k unknowns, one equation for each component of the Lagrange multiplier equation and one equation for each constraint.

If your system of equations is linear, hurrah! Maybe it won't be hard to find where your function takes on its maximum and minimum values [1].

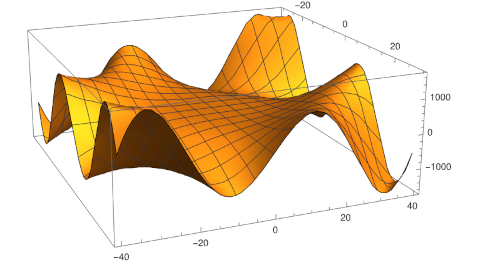

But in general you have a system of nonlinear equations, potentially a lot of nonlinear equations in a lot of unknowns. Maybe you're new problem is harder than your original problem. That is, it might be easier to optimize your original function, subject to its constraints, using a different method than to solve the nonlinear system of equations that applying Lagrange multipliers gives you.

How do you solve a system of nonlinear equations? That's a hard question because nonlinear" is not a hypothesis; it's the negation of a hypothesis. Suppose I tell you I'm thinking of an animal, and it's not an elephant. That's not much to go on. Non-linear is sorta like non-elephantine: you've said what a thing is not, but you haven't said what it is.

One way for functions to be nonlinear is to be convex; convexity is often a tractable generalization of linearity. If your original optimization problem is convex, i.e. both the objective function and the constraints are convex functions, then directly solving your optimization problem may be easier than introducing Lagrange multipliers.

Another class of nonlinear functions is polynomials. If Lagrange multipliers give you a system of several polynomial equations in several unknowns, you may be able to solve your system of equations using techniques from algebraic geometry.

If your equations do not involve polynomial functions, but do involve functions that can be adequately approximated by polynomial functions, maybe the algebraic geometry route will work. But adequate" here means that the solution to the approximate problem, the polynomial problem, gives a sufficiently accurate solution to the original problem. That may be hard to assess.

Your initial exposure to Lagrange multipliers in a calculus class may have left the wrong impression. Calculus textbooks are filled carefully contrived exercises that can be solved in a moderate amount of time, and for good reason. The problems you solve for exercises are not intrinsically important; you're solving them to learn a technique. But in a real application, where the problem is intrinsically important (and the solution technique is not) things might be a lot harder.

Related posts[1] If then system of equations is linear, then your optimization problem must have a very special form: optimizing a quadratic objective function subject to linear constraints, i.e. a quadratic programming problem.

The post Lagrange multiplier setup: Now what? first appeared on John D. Cook.