When my dad was sick, I started Googling grief. Then I couldn’t escape it.

I've always been a super-Googler, coping with uncertainty by trying to learn as much as I can about whatever might be coming. That included my father's throat cancer. Initially I focused on the purely medical. I endeavored to learn as much as I could about molecular biomarkers, transoral robotic surgeries, and the functional anatomy of the epiglottis.

Then, as grief started to become a likely scenario, it too got the same treatment. It seemed that one of the pillars of my life, my dad, was about to fall, and I grew obsessed with trying to understand and prepare for that.

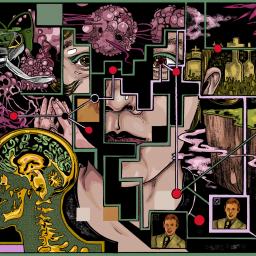

I am a mostly visual thinker, and thoughts pose as scenes in the theater of my mind. When my many supportive family members, friends, and colleagues asked how I was doing, I'd see myself on a cliff, transfixed by an omniscient fog just past its edge. I'm there on the brink, with my parents and sisters, searching for a way down. In the scene, there is no sound or urgency and I am waiting for it to swallow me. I'm searching for shapes and navigational clues, but it's so huge and gray and boundless.

I wanted to take that fog and put it under a microscope. I started Googling the stages of grief, and books and academic research about loss, from the app on my iPhone, perusing personal disaster while I waited for coffee or watched Netflix. How will it feel? How will I manage it?

I started, intentionally and unintentionally, consuming people's experiences of grief and tragedy through Instagram videos, various newsfeeds, and Twitter testimonials. It was as if the internet secretly teamed up with my compulsions and started indulging my own worst fantasies; the algorithms were a sort of priest, offering confession and communion.

Yet with every search and click, I inadvertently created a sticky web of digital grief. Ultimately, it would prove nearly impossible to untangle myself. My mournful digital life was preserved in amber by the pernicious personalized algorithms that had deftly observed my mental preoccupations and offered me ever more cancer and loss.

I got out-eventually. But why is it so hard to unsubscribe from and opt out of content that we don't want, even when it's harmful to us?

I'm well aware of the power of algorithms-I've written about the mental-health impact of Instagram filters, the polarizing effect of Big Tech's infatuation with engagement, and the strange ways that advertisers target specific audiences. But in my haze of panic and searching, I initially felt that my algorithms were a force for good. (Yes, I'm calling them my" algorithms, because while I realize the code is uniform, the output is so intensely personal that they feel like mine.) They seemed to be working with me, helping me find stories of people managing tragedy, making me feel less alone and more capable.

In my haze of panic and searching, I initially felt that my algorithms were a force for good. They seemed to be working with me, making me feel less alone and more capable.

In reality, I was intimately and intensely experiencing the effects of an advertising-driven internet, which Ethan Zuckerman, the renowned internet ethicist and professor of public policy, information, and communication at the University of Massachusetts at Amherst, famously called the Internet's Original Sin" in a 2014 Atlantic piece. In the story, he explained the advertising model that brings revenue to content sites that are most equipped to target the right audience at the right time and at scale. This, of course, requires moving deeper into the world of surveillance," he wrote. This incentive structure is now known as surveillance capitalism."

Understanding how exactly to maximize the engagement of each user on a platform is the formula for revenue, and it's the foundation for the current economic model of the web.

In principle, most ad targeting still exploits basic methods like segmentation, where people grouped by characteristics such as gender, age, and location are served content akin to what others in their group have engaged with or liked.

But in the eight and half years since Zuckerman's piece, artificial intelligence and the collection of ever more data have made targeting exponentially more personalized and chronic. The rise of machine learning has made it easier to direct content on the basis of digital behavioral data points rather than demographic attributes. These can be stronger predictors than traditional segmenting," according to Max Van Kleek, a researcher on human-computer interaction at the University of Oxford. Digital behavior data is also very easy to access and accumulate. The system is incredibly effective at capturing personal data-each click, scroll, and view is documented, measured, and categorized.

Simply put, the more that Instagram and Amazon and the other various platforms I frequented could entangle me in webs of despair for ever more minutes and hours of my day, the more content and the more ads they could serve me.

Whether you're aware of it or not, you're also probably caught in a digital pattern of some kind. These cycles can quickly turn harmful, and I spent months asking experts how we can get more control over rogue algorithms.

A history of grievingThis story starts at what I mistakenly thought was the end of a marathon-16 months after my dad went to the dentist for a toothache and hours later got a voicemail about cancer. That was really the only day I felt brave.

The marathon was a 26.2-mile army crawl. By mile 3, all the skin on your elbows is ground up and there's a paste of pink tissue and gravel on the pavement. It's bone by mile 10. But after 33 rounds of radiation with chemotherapy, we thought we were at the finish line.

Then this past summer, my dad's cancer made a very unlikely comeback, with a vengeance, and it wasn't clear whether it was treatable.

Really, the sounds were the worst. The coughing, coughing, choking-Is he breathing? He's not breathing, he's not breathing-choking, vomit, cough. Breath.

That was the soundtrack as I started grieving my dad privately, prematurely, and voyeuristically.

I began reading obituaries from bed in the morning.

The husband of a fellow Notre Dame alumna dropped dead during a morning run. I started checking her Instagram daily, trying to get a closer view. This drew me into #widowjourney and #youngwidow. Soon, Instagram began recommending the accounts of other widows.

A friend gently suggested that I could maybe stop examining the fog. Have you tried looking away?"

I stayed up all night sometime around Thanksgiving sobbing as I traveled through a rabbit hole about the death of Princess Diana.

Sometime that month, my Amazon account gained a footer of grief-oriented book recommendations. I was invited to consider The Year of Magical Thinking, Crying in H Mart: A Memoir, and F*ck Death: An Honest Guide to Getting Through Grief Without the Condolences, Sympathy, and Other BS as I shopped for face lotion.

Amazon's website says its recommendations are based on your interests." The site explains, We examine the items you've purchased, items you've told us you own, and items you've rated. We compare your activity on our site with that of other customers, and using this comparison, recommend other items that may interest you in Your Amazon." (An Amazon spokesperson gave me a similar explanation and told me I could edit my browsing history.)

At some point, I had searched for a book on loss.

Content recommendation algorithms run on methods similar to ad targeting, though each of the major content platforms has its own formula for measuring user engagement and determining which posts are prioritized for different people. And those algorithms change all the time, in part because AI enables them to get better and better, and in part because platforms are trying to prevent users from gaming the system.

Sometimes it's not even clear what exactly the recommendation algorithms are trying to achieve, says Ranjit Singh, a data and policy researcher at Data & Society, a nonprofit research organization focused on tech governance. One of the challenges of doing this work is also that in a lot of machine-learning modeling, how the model comes up with the recommendation that it does is something that is even unclear to the people who coded the system," he says.

This is at least partly why by the time I became aware of the cycle I had created, there was little I could do to quickly get out. All this automation makes it harder for individual users and tech companies alike to control and adjust the algorithms. It's much harder to redirect an algorithm when it's not clear why it's serving certain content in the first place.

When personalization becomes toxicOne night, I described my cliff phantasm to a dear friend as she drove me home after dinner. She had tragically lost her own dad. She gently suggested that I could maybe stop examining the fog. Have you tried looking away?" she asked.

Perhaps I could fix my gaze on those with me at this lookout and try to appreciate that we had not yet had to walk over the edge.

It was brilliant advice that my therapist agreed with enthusiastically.

I committed to creating more memories at present with my family rather than spending so much time alone wallowing in what might come. I struck up conversations with my dad and told him stories I hadn't before.

I tried hard to bypass triggering stories on my feeds and regain focus when I started going down a rabbit hole. I stopped checking for updates from the widows and widowers I had grown attached to. I unfollowed them along with other content I knew was unhealthy.

But the more I tried to avoid it, the more it came to me. No longer a priest, my algorithms had become more like a begging dog.

My Google mobile app was perhaps the most relentless, as it seemed to insightfully connect all my searching for cancer pathologies to stories of personal loss. In the home screen of my search app, which Google calls Discover," a YouTube video imploring me to Trust God Even When Life Is Hard" would be followed by a Healthline story detailing the symptoms of bladder cancer.

(As a Google spokesperson explained to me, Discover helps you find information from high-quality sources about topics you're interested in. Our systems are not designed to infer sensitive characteristics like health conditions, but sometimes content about these topics could appear in Discover"-I took this to mean that I was not supposed to be seeing the content I was-and we're working to make it easier for people to provide direct feedback and have even more control over what they see in their feed.")

There's an assumption the industry makes that personalization is a positive thing," says Singh. The reason they collect all of this data is because they want to personalize services so that it's exactly catered to what you want."

But, he cautions, this strategy is informed by two false ideas that are common among people working in the field. The first is that platforms ought to prioritize the individual unit, so that if a person wants to see extreme content, the platform should offer extreme content; the effect of that content on an individual's health or on broader communities is peripheral.

There's an assumption the industry makes that personalization is a positive thing."

The second is that the algorithm is the best judge of what content you actually want to see.

For me, both assumptions were not just wrong but harmful. Not only were the various algorithms I interacted with no longer trusted mediators, but by the time I realized all my ideation was unhealthy, the web of content I'd been living in was overwhelming.

I found that the urge to click loss-related prompts was inescapable, and at the same time, the content seemed to be getting more tragic. Next to articles about the midterm elections, I'd see advertisements for stories about someone who died unexpectedly just hours after their wedding and the increase in breast cancer in women under 30.

These algorithms can rabbit hole' users into content that can feel detrimental to their mental health," says Nina Vasan, the founder and executive director of Brainstorm, a Stanford mental-health lab. For example, you can feel inundated with information about cancer and grief, and that content can get increasingly emotionally extreme."

Eventually, I deleted the Instagram and Twitter apps from my phone altogether. I stopped looking at stories suggested by Google. Afterwards, I felt lighter and more present. The fog seemed further out.

The internet doesn't forgetMy dad started to stabilize by early winter, and I began to transition from a state of crisis to one of tentative normalcy (though still largely app-less). I also went back to work, which requires a lot of time online.

The internet is less forgetful than people; that's one of its main strengths. But harmful effects of digital permanence have been widely exposed-for example, there's the detrimental impact that a documented adolescence has on identity as we age. In one particularly memorable essay, Wired's Lauren Goode wrote about how various apps kept re-upping old photos and wouldn't let her forget that she was once meant to be a bride after she called off her wedding.

When I logged back on, my grief-obsessed algorithms were waiting for me with a persistence I had not anticipated. I just wanted them to leave me alone.

As Singh notes, fulfilling that wish raises technical challenges. At a particular moment of time, this was a good recommendation for me, but it's not now. So how do I actually make that difference legible to an algorithm or a recommendation system? I believe that it's an unanswered question," he says.

Oxford's Van Kleek echoes this, explaining that managing upsetting content is a hugely subjective challenge, which makes it hard to deal with technically. The exposure to a single piece of information can be completely harmless or deeply harmful depending on your experience," he says. It's quite hard to deal with that subjectivity when you consider just how much potentially triggering information is on the web.

We don't have tools of transparency that allow us to understand and manage what we see online, so we make up theories and change our scrolling behavior accordingly. (There's an entire research field around this behavior, called algorithmic folk," which explores all the conjectures we make as we try to decipher the algorithms that sort our digital lives.)

I supposed not clicking or looking at content centered on trauma and cancer ought to do the trick eventually. I'd scroll quickly past a post about a brain tumor on my Instagram's For you" page, as if passing an old acquaintance I was trying to avoid on the street.

It did not really work.

Most of these companies really fiddle with how they define engagement. So it can vary from one time in space to another, depending on how they're defining it from month to month," says Robyn Caplan, a social media researcher at Data & Society.

Many platforms have begun to build in features to give users more control over their recommendations. There are a lot more mechanisms than we realize," Caplan adds, though using those tools can be confusing. You should be able to break free of something that you find negative in your life in online spaces. There are ways that these companies have built that in, to some degree. We don't always know whether they're effective or not, or how they work." Instagram, for instance, allows you to click Not interested" on suggested posts (though I admit I never tried to do it). A spokesperson for the company also suggested that I adjust the interests in my account settings to better curate my feed.

By this point, I was frustrated that I was having such a hard time moving on. Cancer sucks so much time, emotion, and energy from the lives and families it affects, and my digital space was making it challenging to find balance. While searching Twitter for developments on tech legislation for work, I'd be prompted with stories about a child dying of a rare cancer.

I resolved to be more aggressive about reshaping my digital life.

How to better manage your digital spaceI started muting and unfollowing accounts on Instagram when I'd scroll pass triggering content, at first tentatively and then vigorously. A spokesperson for Instagram sent over a list of helpful features that I could use, including an option to snooze suggested posts and to turn on reminders to take a break" after a set period of time on the app.

I cleared my search history on Google and sought out Twitter accounts related to my professional interests. I adjusted my recommendations on Amazon (Account > Recommendations > Improve your recommendations) and cleared my browsing history.

I also capitalized on my network of sources-a privilege of my job that few in similar situations would have-and collected a handful of tips from researchers about how to better control rogue algorithms. Some I knew about; others I didn't.

Everyone I talked to told me I had been right to assume that it works to stop engaging with content I didn't want to see, though they emphasized that it takes time. For me, it has taken months. It also has required that I keep exposing myself to harmful content and manage any triggering effects while I do this-a reality that anyone in a similar situation should be aware of.

Relatedly, experts told me that engaging with content you do want to see is important. Caplan told me she personally asked her friends to tag her and DM her with happy and funny content when her own digital space grew overwhelming.

That is one way that we kind of reproduce the things that we experience in our social life into online spaces," she says. So if you're finding that you are depressed and you're constantly reading sad stories, what do you do? You ask your friends, Oh, what's a funny show to watch?'"

Another strategy experts mentioned is obfuscation-trying to confuse your algorithm. Tactics include liking and engaging with alternative content, ideally related to topics that the platform might have a plethora of further suggestions-like dogs, gardening, or political news. (I personally chose to engage with accounts related to #DadHumor, which I do not regret.) Singh recommended handing over the account to a friend for a few days with instructions to use it however might be natural for them, which can help you avoid harmful content and also throw off the algorithm.

You can also hide from your algorithms by using incognito mode or private browsers, or by regularly clearing browsing histories and cookies (this is also just good digital hygiene). I turned off Personal results" on my Google iPhone app, which helped immensely.

One of my favorite tips was to embrace the Finsta," a reference to fake Instagram accounts. Not only on Instagram but across your digital life, you can make multiple profiles dedicated to different interests or modes. I created multiple Google accounts: one for my personal life, one for professional content, another for medical needs. I now search, correspond, and store information accordingly, which has made me more organized and more comfortable online in general.

All this is a lot of work and requires a lot of digital savvy, time, and effort from the end user, which in and of itself can be harmful. Even with the right tools, it's incredibly important to be mindful of how much time you spend online. Research findings are overwhelming at this point: too much time on social media leads to higher rates of depression and anxiety.

For most people, studies suggest that spending more than one hour a day on social media can make mental health worse. Overall there is a link between increase in time spent on social media and worsening mental health," says Stanford's Vasan. She recommends taking breaks to reset or regularly evaluating how your time spent online is making you feel.

A clean scanCancer does not really end-you just sort of slowly walk out of it, and I am still navigating stickiness across the personal, social, and professional spheres of my life. First you finish treatment. Then you get an initial clean scan. The sores start to close-though the fatigue lasts for years. And you hope for a second clean scan, and another after that.

The faces of doctors and nurses who carried you every day begin to blur in your memory. Sometime in December, topics like work and weddings started taking up more time than cancer during conversations with friends.

What I actually want is to control when I look at information about disease, grief, and anxiety.

My dad got a cancer-free scan a few weeks ago. My focus and creativity have mostly returned and I don't need to take as many breaks. I feel anxiety melting out of my spine in a slow, satisfying drip.

And while my online environment has gotten better, it's still not perfect. I'm no longer traveling down rabbit holes of tragedy. I'd say some of my apps are cleansed; some are still getting there. The advertisements served to me across the web often still center on cancer or sudden death. But taking an active approach to managing my digital space, as outlined above, has dramatically improved my experience online and my mental health overall.

Still, I remain surprised at just how harmful and inescapable my algorithms became while I was struggling this fall. Our digital lives are an inseparable part of how we experience the world, but the mechanisms that reinforce our subconscious behaviors or obsessions, like recommendation algorithms, can make our digital experience really destructive. This, of course, can be particularly damaging for people struggling with issues like self-harm or eating disorders-even more so if they're young.

With all this in mind, I'm very deliberate these days about what I look at and how.

What I actually want is to control when I look at information about disease, grief, and anxiety. I'd actually like to be able to read about cancer, at appropriate times, and understand the new research coming out. My dad's treatment is fairly new and experimental. If he'd gotten the same diagnosis five years ago, it most certainly would have been a death sentence. The field is changing, and I'd like to stay on top of it. And when my parents do pass away, I want to be able to find support online.

But I won't do any of it the same way. For a long time, I was relatively dismissive of alternative methods of living online. It seemed burdensome to find new ways of doing everyday things like searching, shopping, and following friends-the power of tech behemoths is largely in the ease they guarantee.

Indeed, Zuckerman tells me that the challenge now is finding practical substitute digital models that empower users. There are viable options; user control over data and platforms is part of the ethos behind hyped concepts like Web3. Van Kleek says the reignition of the open-source movement in recent years makes him hopeful: increased transparency and collaboration on projects like Mastodon, the burgeoning Twitter alternative, might give less power to the algorithm and more power to the user.

I would suggest that it's not as bad as you fear. Nine years ago, complaining about an advertising-based web was a weird thing to be doing. Now it's a mainstream complaint," Zuckerman recently wrote to me in an email. We just need to channel that dissatisfaction into actual alternatives and change."

My biggest digital preoccupation these days is navigating the best way to stay connected with my dad over the phone now that I am back in my apartment 1,200 miles away. Cancer stole the g" from Good morning, ball player girl," his signature greeting, when it took half his tongue.

I still Google things like How to clean a feeding tube" and recently watched a YouTube video to refresh my memory of the Heimlich maneuver. But now I use Tor.

Clarification: This story has been updated to reflect that the explanation of Amazon's recommendations on its site refers to its recommendation algorithm generally, not specifically its advertising recommendations.