Image Recognition Has an Income Problem

Image-recognition neural networks are only as good as the data they're trained on. And that data, at least the easily available data, is heavy on images from high-income countries in Europe and North America. So, when confronted with everyday household items from lower-income countries, they get it right as little as 20 percent of the time, according to research presented in at NeurIPS 2022. But a set of training data released today by machine-learning benchmarking organization MLCommons makes the image-recognition neural network ResNet more than 50 percent more accurate. The goal is to make machine learning work for everyone," says MLCommons executive director David Kanter.

You can see the problem below. These are all stoves, even if your typical computer vision system wouldn't always know it:

These are all stoves, but today's machine-learning systems would likely struggle to recognize them as such. Source: The Dollar Street Dataset: Images Representing the Geographic and Socioeconomic Diversity of the World"Coactive AI

These are all stoves, but today's machine-learning systems would likely struggle to recognize them as such. Source: The Dollar Street Dataset: Images Representing the Geographic and Socioeconomic Diversity of the World"Coactive AI

The images for the data set come from a project called Dollar Street run by Gapminder, a Swedish nonprofit. Gapminder sent photographers into more than 400 homes in 63 countries around the world to photograph nearly 300 categories of household objects. Crucially, these homes spanned a wide range of incomes. Gapminder then collaborated with MLCommons, Harvard University, and startup Coactive AI to curate the images and their metadata into a form usable in neural-network training. MLCommons will host the data, providing it under a CC-BY 4.0 license (meaning it can be used without charge for both commercial and academic purposes). The hope is that using Dollar Street will help researchers and companies build new image-recognition systems that are less biased against people in low-income countries.

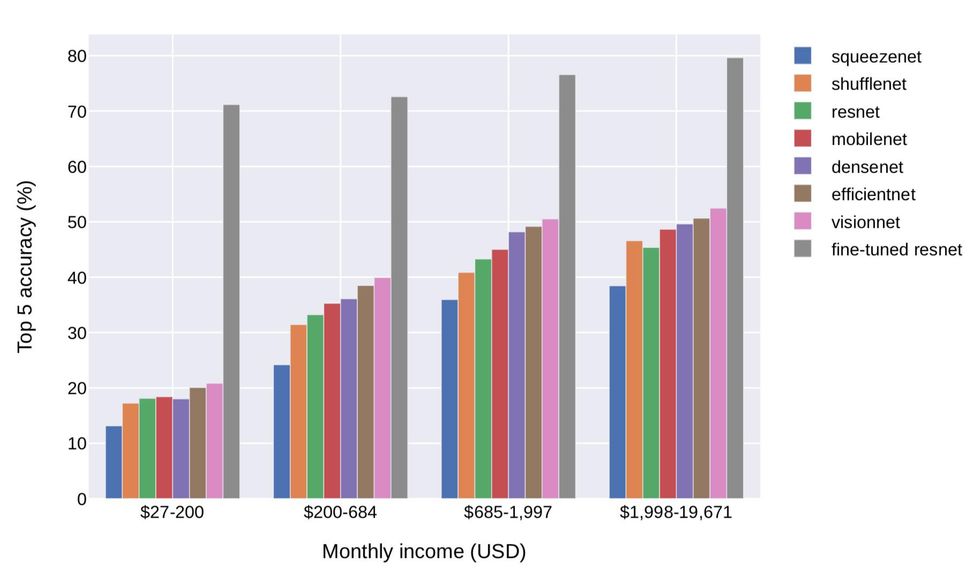

Image recognition neural networks performed worse and worse as the income of the image went down. Training using Dollar Street made improvement across the board. Source: The Dollar Street Dataset: Images Representing the Geographic and Socioeconomic Diversity of the World"Coactive AI

Image recognition neural networks performed worse and worse as the income of the image went down. Training using Dollar Street made improvement across the board. Source: The Dollar Street Dataset: Images Representing the Geographic and Socioeconomic Diversity of the World"Coactive AI

38,479 images

289 everyday household items

404 homes

63 countries

407 median images per country

US $26.99-$19,672 monthly income* of households

$685 median monthly income

101.3 GB total data set size

* measured by consumption using U.S. dollars adjusted for purchasing power parity

The collaboration proved Dollar Street's usefulness in research presented late last year. They trained seven common image-recognition neural networks using the ImageNet data set. They then mapped Dollar Street's metadata to categories in ImageNet and tested the neural networks against the new data set. They divided the results into income quartiles. The neural networks did well enough on images taken from homes in the highest income quartile-US $1,998 to $19,671 per month-but they got progressively worse as incomes fell. By the $27 to $200-per-month quartile, even the best neural network barely cracked 20 percent.

The results were jaw dropping," says MLCommons' Kanter, a coauthor of the NeurIPs research. It's terrible. There's no two ways about it."

But fine-tuning" the ResNet neural network using a selection of Dollar Street data boosted accuracy to 70 percent or above for all income divisions.

Going forward, MLCommons will make updates to the data set and also handle any data removal, should the subjects of the data wish it in the future. Read the organization's blog post about Dollar Street here. You can find the Dollar Street data here, and a TED talk about its origins here.