AI Reconstructs 'High-Quality' Video Directly from Brain Readings in Study

Researchers have used generative AI to reconstruct "high-quality" video from brain activity, a new study reports.

Researchers Jiaxin Qing, Zijiao Chen, and Juan Helen Zhou from the National University of Singapore and The Chinese University of Hong Kong used fMRI data and the text-to-image AI model Stable Diffusion to create a model called MinD-Video that generates video from the brain readings. Their paper describing the work was posted to the arXiv preprint server last week.

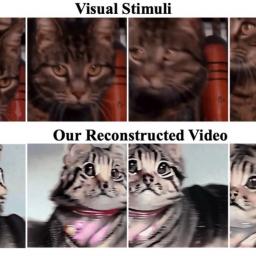

Their demonstration on the paper's corresponding website shows a parallel between videos that were shown to subjects and the AI-generated videos created based on their brain activity. The differences between the two videos are slight and for the most part, contain similar subjects and color palettes.

MinD-Video is defined by the researchers as a two-module pipeline designed to bridge the gap between image and video brain decoding." To train the system, the researchers used a publicly available dataset containing videos and fMRI brain readings from test subjects who watched them. The "two-module pipeline" comprised a trained fMRI encoder and a fine-tuned version of Stable Diffusion, a widely-used image generation AI model.

Videos published by the researchers show the original video of horses in a field and then a reconstructed video of a more vibrantly colored version of the horses. In another video, a car drives down a wooded area and the reconstructed video displays a first-person-POV of someone traveling down a winding road. The researchers found that the reconstructed videos were "high-quality," as defined by motions and scene dynamics. They also reported that the videos had an accuracy of 85 percent, an improvement on previous approaches.

We believe this field has promising applications as large models develop, from neuroscience to brain-computer interfaces," the authors wrote.

Specifically, they said that these results illuminated three major findings. One is the dominance of the visual cortex, revealing that this part of the brain is a major component of visual perception. Another is that the fMRI encoder operates in a hierarchical fashion, which begins with structural information and then shifts to more abstract and visual features on deeper layers. Finally, the authors found that the fMRI encoder evolved through each learning stage, showing its ability to take on more nuanced information as it continues its training.

This study represents another advancement in the field of, essentially, reading people's minds using AI. Previously, researchers at Osaka University found that they could reconstruct high-resolution images from brain activity with a technique that also used fMRI data and Stable Diffusion.

The augmented Stable Diffusion model in this new research allows the visualization to be more accurate. One of the key advantages of our stable diffusion model over other generative models, such as GANs, lies in its ability to produce higher-quality videos. It leverages the representations learned by the fMRI encoder and utilizes its unique diffusion process to generate videos that are not only of superior quality but also better align with the original neural activities," the researchers wrote.