Spectrum's Top AI Stories of 2023

2023 may well go down in history as one of the most wild and dramatic years in the history of artificial intelligence. People were still struggling to understand the power of OpenAI's ChatGPT, which had been introduced in late 2022, when the company released its newest large language model, GPT-4, in March 2023 (LLMs are essentially the brains behind consumer-facing applications).

All through the spring of 2023, important and credible people freaked out about the possible negative consequences-ranging from somewhat troubling to existentially bad-of ever-improving AI. First came an open letter calling for a pause on the development of advanced models, then a statement about existential risk, the first international summit on AI safety, and landmark regulations in the form of a U.S. executive order and the E.U. AI Act.

Here are Spectrum's top 10 articles of 2023 about AI, according to how much time readers spent with them. Take a look to get the flavor of AI in 2023, a year that may well go down in history... unless 2024 is even crazier.

10. AI Art Generators Can Be Fooled Into Making NSFW Images:

Pai-Shih Lee/Getty Images

Pai-Shih Lee/Getty Images

With text-to-image generators like Dall-E 2 and Stable Diffusion, users type a prompt describing the image they'd like to produce, and the model does the rest. And while they have safeguards to keep users from generating violent, pornographic, and otherwise unacceptable images, both AI researchers and hackers have taken delight in figuring out how to circumvent such safeguards. For white hats and black hats, jailbreaking is the new hobby.

9. OpenAI's Moonshot: Solving the AI Alignment Problem:

Daniel Zender

Daniel Zender

This Q&A with OpenAI's Jan Leike delves into the AI alignment problem. That's the concern that we may build superintelligent AI systems whose goals are not aligned with those of humans, potentially leading to the extinction of our species. It's legitimately an important issue, and OpenAI is devoting serious resources to finding ways to empirically research a problem that doesn't yet exist (because superintelligent AI systems don't yet exist).

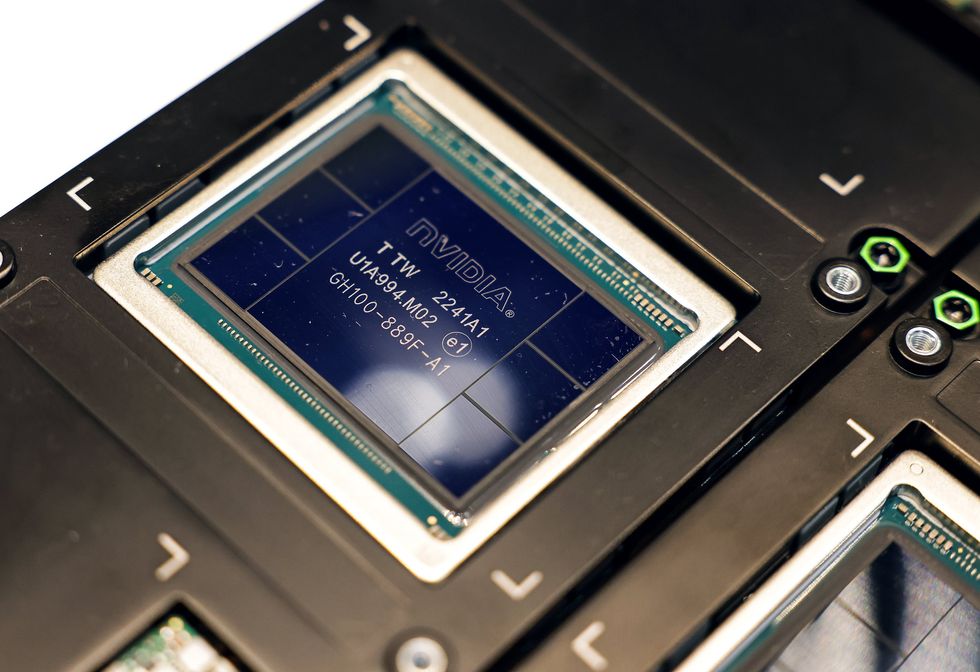

8. The Secret to Nvidia's Success:

I-Hwa Cheng/Bloomberg/Getty Images

I-Hwa Cheng/Bloomberg/Getty Images

Nvidia had a great year as its AI-accelerating GPU, the H-100, became arguably the hottest piece of hardware in tech. The company's chief scientist, Bill Dally, reflected at a conference on the four ingredients that launched Nvidia into the stratosphere. Moore's Law was a surprisingly small part of Nvidia's magic and new number formats a very large part," writes IEEE Spectrum senior editor Sam Moore.

7. ChatGPT's Hallucinations Could Keep It from Succeeding:

Zuma/Alamy

Zuma/Alamy

One issue that has bedeviled LLMs is their habit of making things up-unpredictably spouting lies in a most confident tone. This habit is a particular problem when people try to use it for things that really matter, like writing legal briefs. OpenAI believes it's a solvable problem, but some outside experts, like Meta AI's Yann LeCun, disagree.

6. Ten Essential Insights into the State of AI in 2023, in Graphs:

It's a list within a list! Every year, Spectrum editors unpack the massive AI Index issued by the Stanford Institute for Human-Centered Artificial Intelligence, distilling the report down into a handful of graphs that speak to the most important trends. In 2023, highlights included the costs and energy requirements of training large models, and industry's dominance over academia when it comes to recruiting Ph.D.s and building models.

5. The Creepy New Digital Afterlife Industry:

Harry Campbell

Harry Campbell

Here's an excerpt from an excellent book called We, the Data, by Wendy H. Wong. The excerpt takes a long look at the services that are popping up as part of the new digital afterlife industry: Some companies offer to send out messages on your behalf after your demise, others enable you to record stories that others can later play back by asking questions. And there have already been a few examples of people building digital replicas of deceased loved ones based on the data they left behind.

4. The AI Apocalypse: A Scorecard:

IEEE Spectrum

IEEE Spectrum

This project came about as Spectrum editors discussed how surprising it is that really smart AI practitioners-people who have worked in the field for decades-can have such very opposing views on two important questions. Namely, are today's LLMs a sign that AI will soon achieve superhuman intelligence, and would such superintelligent AI systems spell doom for Homo sapiens. To help readers understand the range of opinions, we put together a scorecard.

3. 200-Year-Old Math Opens Up AI's Mysterious Black Box:

P. Hassanzadeh/Rice University

P. Hassanzadeh/Rice University

The neural networks that power much of AI today are famously black boxes; researchers give them the training data and see the results, but don't have much insight into what happens in between. One set of researchers who work on fluid dynamics decided to use Fourier analysis, a math technique used to identify patterns that's been around for roughly 200 years, to study neural nets trained to predict turbulence.

2. How Duolingo's AI Learns What You Need to Learn:

Eddie Guy

Eddie Guy

This article is one of Spectrums signature deep dives, a feature article written by the experts who are building the technology. In this case, it's the AI team behind Duolingo, the language-learning app. They explain how they developed Birdbrain, an AI system that draws on both educational psychology and machine learning to present users with lessons that are at just the right level of difficulty to keep them engaged.

1. Just Calm Down About GPT-4 Already:

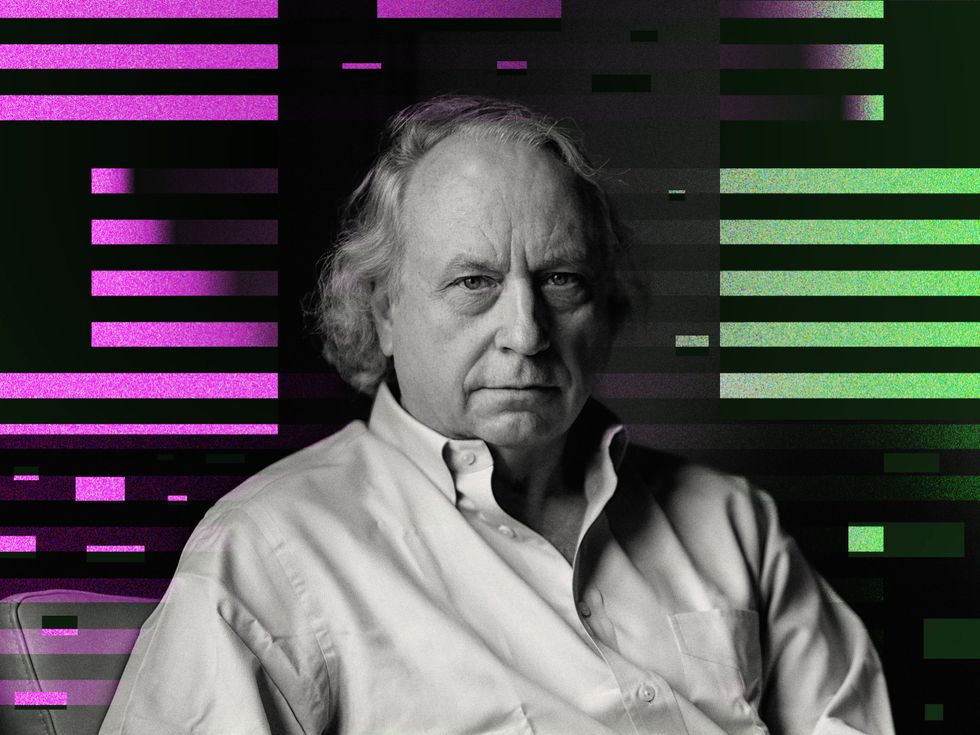

Rodney Brooks: Christopher Michel/Wikipedia; Background: Ruby Chen/OpenAI

Rodney Brooks: Christopher Michel/Wikipedia; Background: Ruby Chen/OpenAI

Spectrum readers have a contrarian streak, and thus quite enjoyed this Q&A with Rodney Brooks, a self-described AI skeptic who has been working in the field for decades. Rather than hailing GPT-4 as a step toward artificial general intelligence, Brooks drew attention to the LLM's difficulty in generalizing from one task to another. What the large language models are good at is saying what an answer should sound like, which is different from what an answer should be, he said.