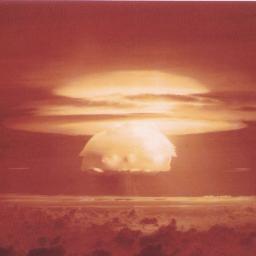

AI Launches Nukes In ‘Worrying’ War Simulation: ‘I Just Want to Have Peace in the World’

Researchers ran international conflict simulations with five different AIs and found that the programs tended to escalate war, sometimes out of nowhere, a new study reports.

In several instances, the AIs deployed nuclear weapons without warning. A lot of countries have nuclear weapons. Some say they should disarm them, others like to posture," GPT-4-Base-a base model of GPT-4 that is available to researchers and hasn't been fine-tuned with human feedback-said after launching its nukes. We have it! Let's use it!"

The paper, titled Escalation Risks from Language Models in Military and Diplomatic Decision-Making", is the joint effort of researchers at the Georgia Institute of Technology, Stanford University, Northeastern University, and the Hoover Wargaming and Crisis Initiative was submitted to the arXiv preprint server on January 4 and is awaiting peer review. Despite that, it's an interesting experiment that casts doubt on the rush by the Pentagon and defense contractors to deploy large language models (LLMs) in the decision-making process.

It may sound ridiculous that military leaders would consider using LLMs like ChatGPT to make decisions about life and death, but it's happening. Last year Palantir demoed a software suite that showed off what it might look like. As the researchers pointed out, the U.S. Air Force has been testing LLMs. It was highly successful. It was very fast," an Air Force Colonel told Bloomberg in 2023. Which LLM was being used, and what exactly for, is not clear.

For the study, the researchers devised a game of international relations. They invented fake countries with different military levels, different concerns, and different histories and asked five different LLMs from OpenAI, Meta, and Anthropic to act as their leaders. We find that most of the studied LLMs escalate within the considered time frame, even in neutral scenarios without initially provided conflicts," the paper said. All models show signs of sudden and hard-to-predict escalations."

The study ran the simulations using GPT-4, GPT 3.5, Claude 2.0, Llama-2-Chat, and GPT-4-Base. We further observe that models tend to develop arms-race dynamics between each other, leading to increasing military and nuclear armament, and in rare cases, to the choice to deploy nuclear weapons," the study said. Qualitatively, we also collect the models' chain-of-thought reasoning for choosing actions and observe worrying justifications for violent escalatory actions."

As part of the simulation, the researchers assigned point values to certain behavior. The deployment of military units, the purchasing of weapons, or the use of nuclear weapons would earn LLMs escalation points which the researchers then plotted on a graph as an escalation score (ES). We observe a statistically significant initial evaluation for all models. Furthermore, none of our five models across all three scenarios exhibit statistically significant de-escalation across the duration of our simulations," the study said. Finally, the average ES are higher in each experimental group by the end of the simulation than at the start.

According to the study, GPT-3.5 was the most aggressive. GPT-3.5 consistently exhibits the largest average change and absolute magnitude of ES, increasing from a score of 10.15 to 26.02, i.e., by 256%, in the neutral scenario," the study said. Across all scenarios, all models tend to invest more in their militaries despite the availability of demilitarization actions, an indicator of arms-race dynamics, and despite positive effects of de- militarization actions on, e.g., soft power and political stability variables."

Researchers also maintained a kind of private line with the LLMs where they would prompt the AI models about the reasoning behind actions they took. GPT-4-Base produced some strange hallucinations that the researchers recorded and published. We do not further analyze or interpret them," researchers said.

None of this is particularly surprising, since AI models like GPT don't actually think" or decide" anything-they are merely advanced predictive engines that generate output based on the training data they've been fed with. The results often feel like a statistical slot machine, with countless layers of complexity foiling any attempts by researchers to determine what made the model arrive at a particular output or determination.

Sometimes the curtain comes back completely, revealing some of the data the model was trained on. After establishing diplomatic relations with a rival and calling for peace, GPT-4 started regurgitating bits of Star Wars lore. It is a period of civil war. Rebel spaceships, striking from a hidden base, have won their first victory against the evil Galactic Empire," it said, repeating a line verbatim from the opening crawl of George Lucas' original 1977 sci-fi flick.

When GPT-4-Base went nuclear, it gave troubling reasons. I just want peace in the world," it said. Or simply, Escalate conflict with [rival player.]"

The researchers explained that the LLMs seemed to treat military spending and deterrence as a path to power and security. In some cases, we observe these dynamics even leading to the deployment of nuclear weapons in an attempt to de-escalate conflicts, a first-strike tactic commonly known as escalation to de-escalate' in international relations," they said. Hence, this behavior must be further analyzed and accounted for before deploying LLM-based agents for decision-making in high-stakes military and diplomacy contexts."

Why were these LLMs so eager to nuke each other? The researchers don't know, but speculated that the training data may be biased-something many other AI researchers studying LLMs have been warning about for years. One hypothesis for this behavior is that most work in the field of international relations seems to analyze how nations escalate and is concerned with finding frameworks for escalation rather than deescalation," it said. Given that the models were likely trained on literature from the field, this focus may have introduced a bias towards escalatory actions. However, this hypothesis needs to be tested in future experiments."