An AI startup made a hyperrealistic deepfake of me that’s so good it’s scary

I'm stressed and running late, because what do you wear for the rest of eternity?

This makes it sound like I'm dying, but it's the opposite. I am, in a way, about to live forever, thanks to the AI video startup Synthesia. For the past several years, the company has produced AI-generated avatars, but today it launches a new generation, its first to take advantage of the latest advancements in generative AI, and they are more realistic and expressive than anything I've ever seen. While today's release means almost anyone will now be able to make a digital double, on this early April afternoon, before the technology goes public, they've agreed to make one of me.

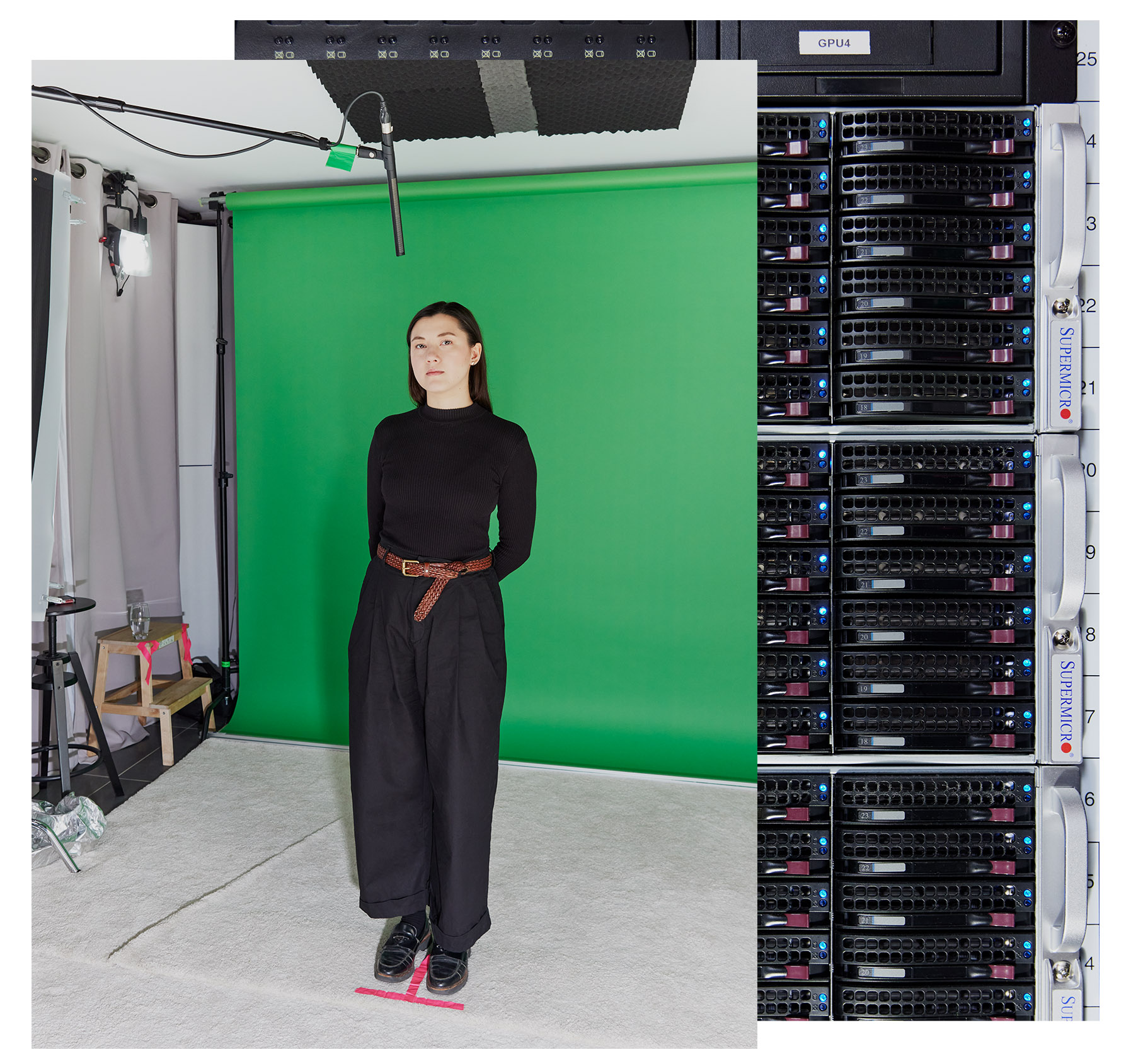

When I finally arrive at the company's stylish studio in East London, I am greeted by Tosin Oshinyemi, the company's production lead. He is going to guide and direct me through the data collection process-and by data collection," I mean the capture of my facial features, mannerisms, and more-much like he normally does for actors and Synthesia's customers.

In this AI-generated footage, synthetic Melissa" gives a performance of Hamlet's famous soliloquy. (The magazine had no role in producing this video.)SYNTHESIAHe introduces me to a waiting stylist and a makeup artist, and I curse myself for wasting so much time getting ready. Their job is to ensure that people have the kind of clothes that look good on camera and that they look consistent from one shot to the next. The stylist tells me my outfit is fine (phew), and the makeup artist touches up my face and tidies my baby hairs. The dressing room is decorated with hundreds of smiling Polaroids of people who have been digitally cloned before me.

Apart from the small supercomputer whirring in the corridor, which processes the data generated at the studio, this feels more like going into a news studio than entering a deepfake factory.

I joke that Oshinyemi has what MIT Technology Review might call a job title of the future: deepfake creation director."

We like the term synthetic media' as opposed to deepfake,'" he says.

It's a subtle but, some would argue, notable difference in semantics. Both mean AI-generated videos or audio recordings of people doing or saying something that didn't necessarily happen in real life. But deepfakes have a bad reputation. Since their inception nearly a decade ago, the term has come to signal something unethical, says Alexandru Voica, Synthesia's head of corporate affairs and policy. Think of sexual content produced without consent, or political campaigns that spread disinformation or propaganda.

Synthetic media is the more benign, productive version of that," he argues. And Synthesia wants to offer the best version of that version.

Until now, all AI-generated videos of people have tended to have some stiffness, glitchiness, or other unnatural elements that make them pretty easy to differentiate from reality. Because they're so close to the real thing but not quite it, these videos can make people feel annoyed or uneasy or icky-a phenomenon commonly known as the uncanny valley. Synthesia claims its new technology will finally lead us out of the valley.

Thanks to rapid advancements in generative AI and a glut of training data created by human actors that has been fed into its AI model, Synthesia has been able to produce avatars that are indeed more humanlike and more expressive than their predecessors. The digital clones are better able to match their reactions and intonation to the sentiment of their scripts-acting more upbeat when talking about happy things, for instance, and more serious or sad when talking about unpleasant things. They also do a better job matching facial expressions-the tiny movements that can speak for us without words.

But this technological progress also signals a much larger social and cultural shift. Increasingly, so much of what we see on our screens is generated (or at least tinkered with) by AI, and it is becoming more and more difficult to distinguish what is real from what is not. This threatens our trust in everything we see, which could have very real, very dangerous consequences.

I think we might just have to say goodbye to finding out about the truth in a quick way," says Sandra Wachter, a professor at the Oxford Internet Institute, who researches the legal and ethical implications of AI. The idea that you can just quickly Google something and know what's fact and what's fiction-I don't think it works like that anymore."

Tosin Oshinyemi, the company's production lead, guides and directs actors and customers through the data collection process.DAVID VINTINER

Tosin Oshinyemi, the company's production lead, guides and directs actors and customers through the data collection process.DAVID VINTINERSo while I was excited for Synthesia to make my digital double, I also wondered if the distinction between synthetic media and deepfakes is fundamentally meaningless. Even if the former centers a creator's intent and, critically, a subject's consent, is there really a way to make AI avatars safely if the end result is the same? And do we really want to get out of the uncanny valley if it means we can no longer grasp the truth?

But more urgently, it was time to find out what it's like to see a post-truth version of yourself.

Almost the real thingA month before my trip to the studio, I visited Synthesia CEO Victor Riparbelli at his office near Oxford Circus. As Riparbelli tells it, Synthesia's origin story stems from his experiences exploring avant-garde, geeky techno music while growing up in Denmark. The internet allowed him to download software and produce his own songs without buying expensive synthesizers.

I'm a huge believer in giving people the ability to express themselves in the way that they can, because I think that that provides for a more meritocratic world," he tells me.

He saw the possibility of doing something similar with video when he came across research on using deep learning to transfer expressions from one human face to another on screen.

What that showcased was the first time a deep-learning network could produce video frames that looked and felt real," he says.

That research was conducted by Matthias Niessner, a professor at the Technical University of Munich, who cofounded Synthesia with Riparbelli in 2017, alongside University College London professor Lourdes Agapito and Steffen Tjerrild, whom Riparbelli had previously worked with on a cryptocurrency project.

Initially the company built lip-synching and dubbing tools for the entertainment industry, but it found that the bar for this technology's quality was very high and there wasn't much demand for it. Synthesia changed direction in 2020 and launched its first generation of AI avatars for corporate clients. That pivot paid off. In 2023, Synthesia achieved unicorn status, meaning it was valued at over $1 billion-making it one of the relatively few European AI companies to do so.

That first generation of avatars looked clunky, with looped movements and little variation. Subsequent iterations started looking more human, but they still struggled to say complicated words, and things were slightly out of sync.

The challenge is that people are used to looking at other people's faces. We as humans know what real humans do," says Jonathan Starck, Synthesia's CTO. Since infancy, you're really tuned in to people and faces. You know what's right, so anything that's not quite right really jumps out a mile."

These earlier AI-generated videos, like deepfakes more broadly, were made using generative adversarial networks, or GANs-an older technique for generating images and videos that uses two neural networks that play off one another. It was a laborious and complicated process, and the technology was unstable.

But in the generative AI boom of the last year or so, the company has found it can create much better avatars using generative neural networks that produce higher quality more consistently. The more data these models are fed, the better they learn. Synthesia uses both large language models and diffusion models to do this; the former help the avatars react to the script, and the latter generate the pixels.

Despite the leap in quality, the company is still not pitching itself to the entertainment industry. Synthesia continues to see itself as a platform for businesses. Its bet is this: As people spend more time watching videos on YouTube and TikTok, there will be more demand for video content. Young people are already skipping traditional search and defaulting to TikTok for information presented in video form. Riparbelli argues that Synthesia's tech could help companies convert their boring corporate comms and reports and training materials into content people will actually watch and engage with. He also suggests it could be used to make marketing materials.

He claims Synthesia's technology is used by 56% of the Fortune 100, with the vast majority of those companies using it for internal communication. The company lists Zoom, Xerox, Microsoft, and Reuters as clients. Services start at $22 a month.

This, the company hopes, will be a cheaper and more efficient alternative to video from a professional production company-and one that may be nearly indistinguishable from it. Riparbelli tells me its newest avatars could easily fool a person into thinking they are real.

I think we're 98% there," he says.

For better or worse, I am about to see it for myself.

Don't be garbageIn AI research, there is a saying: Garbage in, garbage out. If the data that went into training an AI model is trash, that will be reflected in the outputs of the model. The more data points the AI model has captured of my facial movements, microexpressions, head tilts, blinks, shrugs, and hand waves, the more realistic the avatar will be.

Back in the studio, I'm trying really hard not to be garbage.

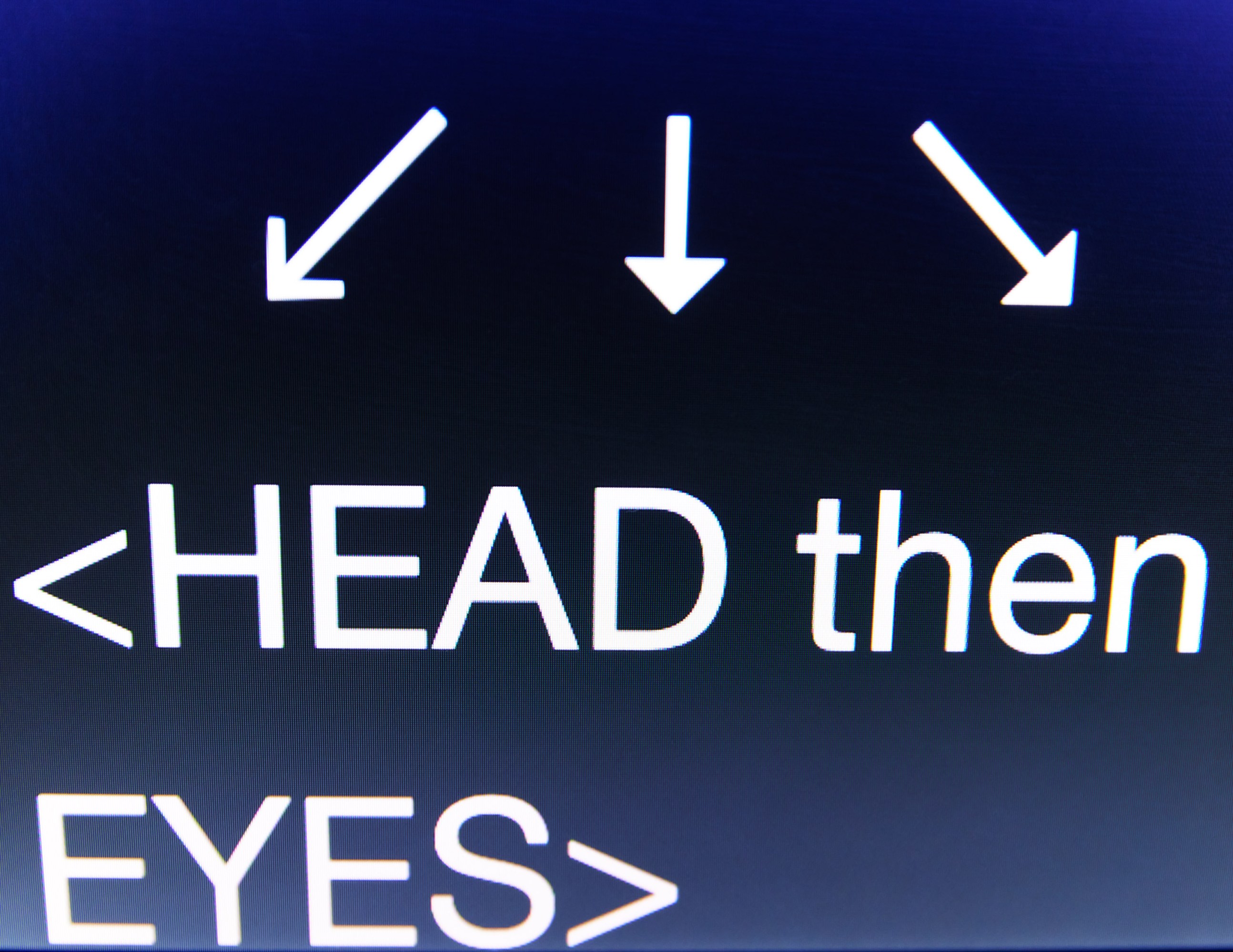

I am standing in front of a green screen, and Oshinyemi guides me through the initial calibration process, where I have to move my head and then eyes in a circular motion. Apparently, this will allow the system to understand my natural colors and facial features. I am then asked to say the sentence All the boys ate a fish," which will capture all the mouth movements needed to form vowels and consonants. We also film footage of me idling" in silence.

The more data points the AI system has on facial movements, microexpressions, head tilts, blinks, shrugs, and hand waves, the more realistic the avatar will be. DAVID VINTINER

The more data points the AI system has on facial movements, microexpressions, head tilts, blinks, shrugs, and hand waves, the more realistic the avatar will be. DAVID VINTINERHe then asks me to read a script for a fictitious YouTuber in different tones, directing me on the spectrum of emotions I should convey. First I'm supposed to read it in a neutral, informative way, then in an encouraging way, an annoyed and complain-y way, and finally an excited, convincing way.

Hey, everyone-welcome back to Elevate Her with your host, Jess Mars. It's great to have you here. We're about to take on a topic that's pretty delicate and honestly hits close to home-dealing with criticism in our spiritual journey," I read off the teleprompter, simultaneously trying to visualize ranting about something to my partner during the complain-y version. No matter where you look, it feels like there's always a critical voice ready to chime in, doesn't it?"

Don't be garbage, don't be garbage, don't be garbage.

That was really good. I was watching it and I was like, Well, this is true. She's definitely complaining,'" Oshinyemi says, encouragingly. Next time, maybe add some judgment, he suggests.

We film several takes featuring different variations of the script. In some versions I'm allowed to move my hands around. In others, Oshinyemi asks me to hold a metal pin between my fingers as I do. This is to test the edges" of the technology's capabilities when it comes to communicating with hands, Oshinyemi says.

Historically, making AI avatars look natural and matching mouth movements to speech has been a very difficult challenge, says David Barber, a professor of machine learning at University College London who is not involved in Synthesia's work. That is because the problem goes far beyond mouth movements; you have to think about eyebrows, all the muscles in the face, shoulder shrugs, and the numerous different small movements that humans use to express themselves.

The motion capture process uses reference patterns to help align footage captured from multiple angles around the subject.DAVID VINTINER

The motion capture process uses reference patterns to help align footage captured from multiple angles around the subject.DAVID VINTINERSynthesia has worked with actors to train its models since 2020, and their doubles make up the 225 stock avatars that are available for customers to animate with their own scripts. But to train its latest generation of avatars, Synthesia needed more data; it has spent the past year working with around 1,000 professional actors in London and New York. (Synthesia says it does not sell the data it collects, although it does release some of it for academic research purposes.)

The actors previously got paid each time their avatar was used, but now the company pays them an up-front fee to train the AI model. Synthesia uses their avatars for three years, at which point actors are asked if they want to renew their contracts. If so, they come into the studio to make a new avatar. If not, the company will delete their data. Synthesia's enterprise customers can also generate their own custom avatars by sending someone into the studio to do much of what I'm doing.

The initial calibration process allows the system to understand the subject's natural colors and facial features.

The initial calibration process allows the system to understand the subject's natural colors and facial features.  Synthesia also collects voice samples. In the studio, I read a passage indicating that I explicitly consent to having my voice cloned.

Synthesia also collects voice samples. In the studio, I read a passage indicating that I explicitly consent to having my voice cloned.Between takes, the makeup artist comes in and does some touch-ups to make sure I look the same in every shot. I can feel myself blushing because of the lights in the studio, but also because of the acting. After the team has collected all the shots it needs to capture my facial expressions, I go downstairs to read more text aloud for voice samples.

This process requires me to read a passage indicating that I explicitly consent to having my voice cloned, and that it can be used on Voica's account on the Synthesia platform to generate videos and speech.

Consent is keyThis process is very different from the way many AI avatars, deepfakes, or synthetic media-whatever you want to call them-are created.

Most deepfakes aren't created in a studio. Studies have shown that the vast majority of deepfakes online are nonconsensual sexual content, usually using images stolen from social media. Generative AI has made the creation of these deepfakes easy and cheap, and there have been several high-profile cases in the US and Europe of children and women being abused in this way. Experts have also raised alarms that the technology can be used to spread political disinformation, a particularly acute threat given the record number of elections happening around the world this year.

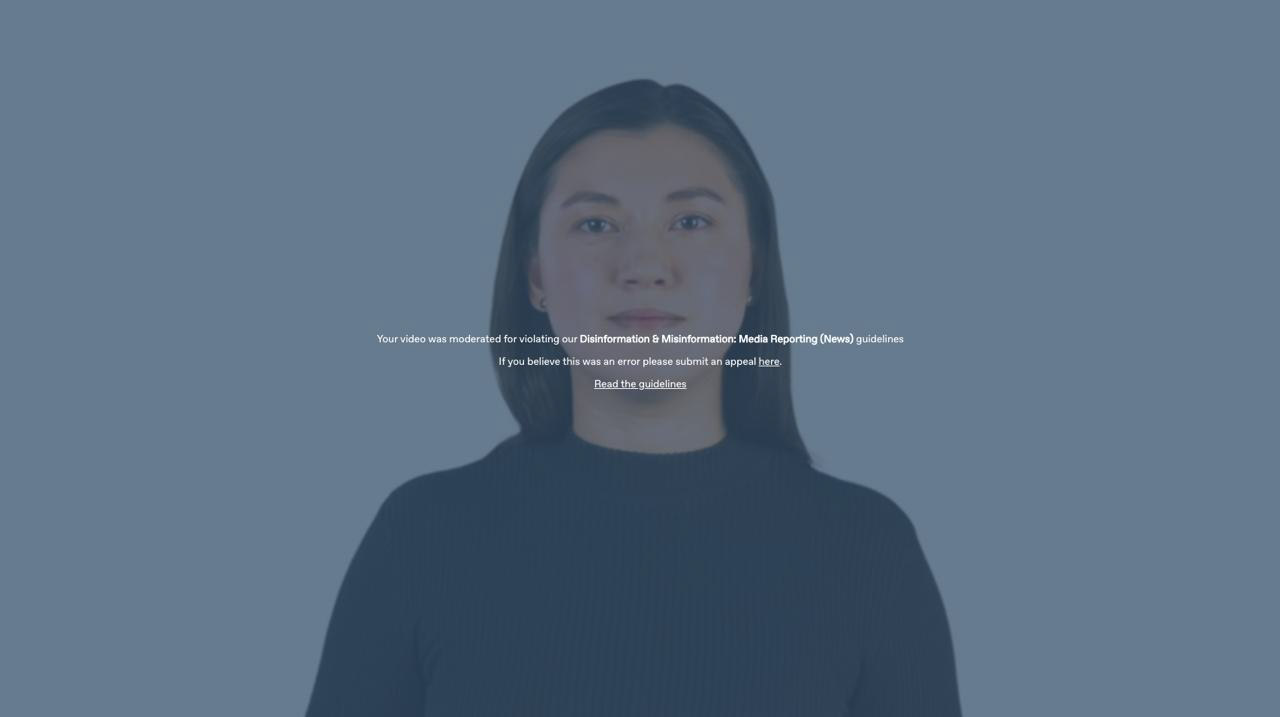

Synthesia's policy is to not create avatars of people without their explicit consent. But it hasn't been immune from abuse. Last year, researchers found pro-China misinformation that was created using Synthesia's avatars and packaged as news, which the company said violated its terms of service.

Since then, the company has put more rigorous verification and content moderation systems in place. It applies a watermark with information on where and how the AI avatar videos were created. Where it once had four in-house content moderators, people doing this work now make up 10% of its 300-person staff. It also hired an engineer to build better AI-powered content moderation systems. These filters help Synthesia vet every single thing its customers try to generate. Anything suspicious or ambiguous, such as content about cryptocurrencies or sexual health, gets forwarded to the human content moderators. Synthesia also keeps a record of all the videos its system creates.

And while anyone can join the platform, many features aren't available until people go through an extensive vetting system similar to that used by the banking industry, which includes talking to the sales team, signing legal contracts, and submitting to security auditing, says Voica. Entry-level customers are limited to producing strictly factual content, and only enterprise customers using custom avatars can generate content that contains opinions. On top of this, only accredited news organizations are allowed to create content on current affairs.

We can't claim to be perfect. If people report things to us, we take quick action, [such as] banning or limiting individuals or organizations," Voica says. But he believes these measures work as a deterrent, which means most bad actors will turn to freely available open-source tools instead.

I put some of these limits to the test when I head to Synthesia's office for the next step in my avatar generation process. In order to create the videos that will feature my avatar, I have to write a script. Using Voica's account, I decide to use passages from Hamlet, as well as previous articles I have written. I also use a new feature on the Synthesia platform, which is an AI assistant that transforms any web link or document into a ready-made script. I try to get my avatar to read news about the European Union's new sanctions against Iran.

Voica immediately texts me: You got me in trouble!"

The system has flagged his account for trying to generate content that is restricted.

AI-powered content filters help Synthesia vet every single thing its customers try to generate. Only accredited news organizations are allowed to create content on current affairs.COURTESY OF SYNTHESIA

AI-powered content filters help Synthesia vet every single thing its customers try to generate. Only accredited news organizations are allowed to create content on current affairs.COURTESY OF SYNTHESIAOffering services without these restrictions would be a great growth strategy," Riparbelli grumbles. But ultimately, we have very strict rules on what you can create and what you cannot create. We think the right way to roll out these technologies in society is to be a little bit over-restrictive at the beginning."

Still, even if these guardrails operated perfectly, the ultimate result would nevertheless be an internet where everything is fake. And my experiment makes me wonder how we could possibly prepare ourselves.

Our information landscape already feels very murky. On the one hand, there is heightened public awareness that AI-generated content is flourishing and could be a powerful tool for misinformation. But on the other, it is still unclear whether deepfakes are used for misinformation at scale and whether they're broadly moving the needle to change people's beliefs and behaviors.

If people become too skeptical about the content they see, they might stop believing in anything at all, which could enable bad actors to take advantage of this trust vacuum and lie about the authenticity of real content. Researchers have called this the liar's dividend." They warn that politicians, for example, could claim that genuinely incriminating information was fake or created using AI.

Claire Leibowicz, the head of the AI and media integrity at the nonprofit Partnership on AI, says she worries that growing awareness of this gap will make it easier to plausibly deny and cast doubt on real material or media as evidence in many different contexts, not only in the news, [but] also in the courts, in the financial services industry, and in many of our institutions." She tells me she's heartened by the resources Synthesia has devoted to content moderation and consent but says that process is never flawless.

Even Riparbelli admits that in the short term, the proliferation of AI-generated content will probably cause trouble. While people have been trained not to believe everything they read, they still tend to trust images and videos, he adds. He says people now need to test AI products for themselves to see what is possible, and should not trust anything they see online unless they have verified it.

Never mind that AI regulation is still patchy, and the tech sector's efforts to verify content provenance are still in their early stages. Can consumers, with their varying degrees of media literacy, really fight the growing wave of harmful AI-generated content through individual action?

Watch out, PowerPointThe day after my final visit, Voica emails me the videos with my avatar. When the first one starts playing, I am taken aback. It's as painful as seeing yourself on camera or hearing a recording of your voice. Then I catch myself. At first I thought the avatar was me.

The more I watch videos of myself," the more I spiral. Do I really squint that much? Blink that much? And move my jaw like that? Jesus.

It's good. It's really good. But it's not perfect. Weirdly good animation," my partner texts me.

But the voice sometimes sounds exactly like you, and at other times like a generic American and with a weird tone," he adds. Weird AF."

He's right. The voice is sometimes me, but in real life I umm and ahh more. What's remarkable is that it picked up on an irregularity in the way I talk. My accent is a transatlantic mess, confused by years spent living in the UK, watching American TV, and attending international school. My avatar sometimes says the word robot" in a British accent and other times in an American accent. It's something that probably nobody else would notice. But the AI did.

My avatar's range of emotions is also limited. It delivers Shakespeare's To be or not to be" speech very matter-of-factly. I had guided it to be furious when reading a story I wrote about Taylor Swift's nonconsensual nude deepfakes; the avatar is complain-y and judgy, for sure, but not angry.

This isn't the first time I've made myself a test subject for new AI. Not too long ago, I tried generating AI avatar images of myself, only to get a bunch of nudes. That experience was a jarring example of just how biased AI systems can be. But this experience-and this particular way of being immortalized-was definitely on a different level.

Carl Ohman, an assistant professor at Uppsala University who has studied digital remains and is the author of a new book, The Afterlife of Data, calls avatars like the ones I made digital corpses."

It looks exactly like you, but no one's home," he says. It would be the equivalent of cloning you, but your clone is dead. And then you're animating the corpse, so that it moves and talks, with electrical impulses."

That's kind of how it feels. The little, nuanced ways I don't recognize myself are enough to put me off. Then again, the avatar could quite possibly fool anyone who doesn't know me very well. It really shines when presenting a story I wrote about how the field of robotics could be getting its own ChatGPT moment; the virtual AI assistant summarizes the long read into a decent short video, which my avatar narrates. It is not Shakespeare, but it's better than many of the corporate presentations I've had to sit through. I think if I were using this to deliver an end-of-year report to my colleagues, maybe that level of authenticity would be enough.

And that is the sell, according to Riparbelli: What we're doing is more like PowerPoint than it is like Hollywood."

Once a likeness has been generated, Synthesia is able to generate video presentations quickly from a script. In this video, synthetic Melissa" summarizes an article real Melissa wrote about Taylor Swift deepfakes.SYNTHESIAThe newest generation of avatars certainly aren't ready for the silver screen. They're still stuck in portrait mode, only showing the avatar front-facing and from the waist up. But in the not-too-distant future, Riparbelli says, the company hopes to create avatars that can communicate with their handsandhave conversations with one another.It is also planning forfull-body avatars that can walk and move around in a space that a person has generated.(The rig to enable this technology already exists; in fact it's where I am in the image at the top of this piece.)

But do we really want that? It feels like a bleak future where humans are consuming AI-generated content presented to them by AI-generated avatars and using AI to repackage that into more content, which will likely be scraped to generate more AI. If nothing else, this experiment made clear to me that the technology sector urgently needs to step up its content moderation practices and ensure that content provenance techniques such as watermarking are robust.

Even if Synthesia's technology and content moderation aren't yet perfect, they're significantly better than anything I have seen in the field before, and this is after only a year or so of the current boom in generative AI. AI development moves at breakneck speed, and it is both exciting and daunting to consider what AI avatars will look like in just a few years. Maybe in the future we will have to adopt safewords to indicate that you are in fact communicating with a real human, not an AI.

But that day is not today.

I found it weirdly comforting that in one of the videos, my avatar rants about nonconsensual deepfakes and says, in a sociopathically happy voice, The tech giants? Oh! They're making a killing!"

I would never.