ChatGPT's Advanced Voice Mode Is Here (If You're Willing to Pay for It)

Back in May, OpenAI announced the launch of advanced voice mode for ChatGPT. The company pitched the new function as the then-extant Voice Mode on steroids. Not only would you be able to have a discussion with ChatGPT, but the conversations were going to be more natural: You would be able to interrupt the bot when you wanted to change topics, and ChatGPT would understand the speed and tone of your voice, and respond in kind with its own emotion.

If that sounds a bit like the AI voice assistant in the 2013 film Her, that's not by accident. In fact, OpenAI demoed the product with a voice that sounded a bit too similar to that of the actress Scarlett Johansson, who voices that fictional machine mind. Johansson got litigious, and the company later removed the voice entirely. No matter: There are nine other voices for you to try.

While OpenAI started testing advanced voice mode with a small group of testers back in July, the feature is rolling out now to all paid users. If you have an eligible account, you should be able to try it out on your end today.

How to use ChatGPT's advanced voice modeAt this time, only paid ChatGPT subscribers can access advanced voice mode. That means you either need a ChatGPT Plus or ChatGPT Teams membership in order to see the feature. Free users can still use the free voice mode, which appears in the app as a pair of headphones.

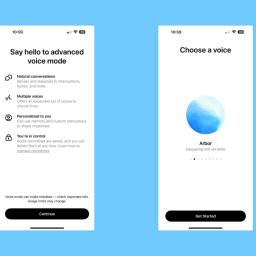

Advanced mode shows up as a waveform icon, visible only to Plus and Team subscribers. To access the feature, open a new chat and tap this icon. The first time you use advanced voice mode, you'll need to choose a voice from a pool of nine options. I've included OpenAI's descriptions for each:

Arbor: Easygoing and versatile

Breeze: Animated and earnest

Cove: Composed and direct

Ember: Confident and optimistic

Juniper: Open and upbeat

Maple: Cheerful and candid

Sol: Savvy and relaxed

Spruce: Calm and affirming

Vale: Bright and inquisitive

I ended up going with Arbor, which reminds me a lot of the Headspace guy. From here, advanced voice mode works very much like the standard voice mode. You say something to ChatGPT, and it responds in kind.

How ChatGPT advanced voice mode actually performsIn my brief time with the new mode, I haven't noticed too many advancements over the previous voice mode. The new voices are new, of course, and I suppose a bit more "natural" than past voices, but I don't think the conversation feels any more lifelike. The ability to interrupt your digital parter does sell the illusion a bit, but it is sensitive: I picked up my iPhone while ChatGPT was speaking, and it stopped instantly. This is something I noticed in OpenAI's original demo, too' I think OpenAI needs to work on the bot's ability to understand when a user wants to interrupt, and when a random external sound occurs.

(OpenAI recommends you use headphones to avoid unwanted interruptions, and, if you're using an iPhone, to enable Voice Isolation mode. I was using Voice Isolation mode without headphones, so take that as you will.)

While it seems OpenAI has dialed back the whimsical and flirtatious side of ChatGPT, you can still get the bot to laugh-if you ask it to. The laugh is impressive for a fake voice, I guess, but it feels unnatural, like it's pulling from another recording to "laugh." Ask it to make any other similar sounds, however, like crying or screaming, and it refuses.

I tried to get my voice mode to listen to a song and identify it, which it said it couldn't do. The bot specifically asked me to share the lyrics alone, which I did, and it suggested a song based on the vibes of those lyrics-not based on the actual lyrics themselves. As such, its guess was wildly off, but it doesn't seem built for this type of task yet, so I'll give it a pass.

Can ChatPGT talk to itself?I had to pit two voice modes against each other. The first time I tried it, they kept interrupting each other in a perfectly socially awkward exchange, until one of them glitched out, and ended up repeating the message they spoke to me earlier about sharing lyrics to figure out the song. The other one then said something along the lines of, "Absolutely, share the lyrics with me, and I'll help you figure it out." The other replied, "Sure: Share the lyrics, and I'll do my best to identify the song." This went back and forth for five minutes before I killed the conversation.

Once I set up the chatbots with a clear chat, they went back and forth forever while saying almost nothing of interest. They talked about augmented reality, cooking, and morning routines with the usual enthusiasm and vagueness that chatbots are known for. What was weird, however, was when one of the bots finished talking about how, if it could cook, it would like to make lasagna; it asked the other chatbot about any dishes it liked to cook or was excited to try. The other bot responded: "User enjoys having coffee and catching up on the news in the morning."

That was something I told ChatGPT during a past test, when it asked me about my morning routine. It's evidence OpenAI's memory feature is working, but the execution was, um, bizarre. Why did it respond to a question about favorite recipes like that? Did I short-circuit the bot? Did it figure out it was chatting with itself, and decided to warn the other bot what was going on? I don't really like the implications here.

How advanced voice mode handles user privacyWhen you use advanced voice mode, OpenAI saves your recordings-including recordings of your side of the conversation. When you delete a chat, OpenAI says it will delete your audio recordings within 30 days, unless the company decides it has to keep it for security or legal reasons. OpenAI will also keep the recording after you delete a chat if you previously shared audio recordings and this audio clip was disassociated from your account.

To make sure you aren't letting OpenAI train its models with your voice recordings and chat transcripts, go to ChatGPT settings, choose Data Controls, then disable Improve the model for everyone and Improve voice for everyone.