Claude AI Can Now End 'Harmful' Conversations

Chatbots, by their nature, are prediction machines. When you get a response from something like Claude AI, it might seem like the bot is engaging in natural conversation. However, at its core, all the bot is really doing is guessing what the next word in the sequence should really be.

Despite this core functionality, AI companies are exploring how the bots themselves respond to human interactions-especially when the humans are engaging negatively or in bad faith. Anthropic, the company behind Claude, is now working on a system to prevent this.

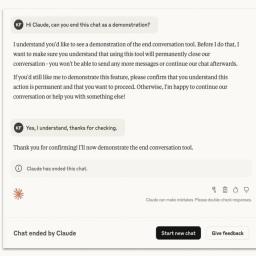

Claude can end harmful conversationsOn Friday, the company announced that Claude Opus 4 and 4.1 can now end chatbot conversations when the bot detects "extreme cases of persistently harmful or abusive user interactions." According to Anthropic, the company noticed that Opus 4 already has a "strong preference" against responding to requests for harmful tasks, as well as "a pattern of apparent distress" when interacting with the users who are issuing these prompts. When Anthropic tested the ability for Opus to end conversations it deemed to be harmful, the model had a tendency to do so.

Anthropic notes that the persistency is the key here: Claude did not necessarily have an issue if a user backed off their requests following a refusal, but if the user continued to push the subject, that's when Claude would struggle. As such, Claude will only end a chat as a "last resort," when the bot has tried to get the user to stop multiple times. The user themself can also ask Claude to end the chat, but even still, the bot will try to dissuade them. In fact, the bot will not end the conversation if it detects the user is "at imminent risk of harming themselves or others."

To be fair to the bot, it seems the topics Claude has issue with really are harmful. Anthropic says examples include "sexual content involving minors" or "information that would enable large-scale violence or acts of terror." I'd end the chat immediately, too, if someone was messaging me requests like that.

And to be clear, ending a Claude chat doesn't end your ability to use Claude. While the bot might make it sound dire, all Claude is really doing is ending the current session. You can start a new chat at any time, or edit a previous message to start a new branch of the conversation. It's pretty low stakes.

Does this mean Claude has feelings?I highly doubt it. Large language models are not conscious; they're a product of their training. Likely, the model was trained to avoid responding to extreme and harmful requests, and when repeatedly presented with these requests, it predicts words that relate to moving on from the conversation. It's not like Claude discovered the ability to end conversations on its own. It was only when Anthropic built the capability that the model implemented it.

Instead, I think it's good for companies like Anthropic to build fail-safes into their systems to prevent abuse. After all, Anthropic's M.O. is ethical AI, so this tracks for the company. There's no reason any LLM should oblige these types of requests, and if the user can't take a hint, maybe it's best to shut down the conversation.