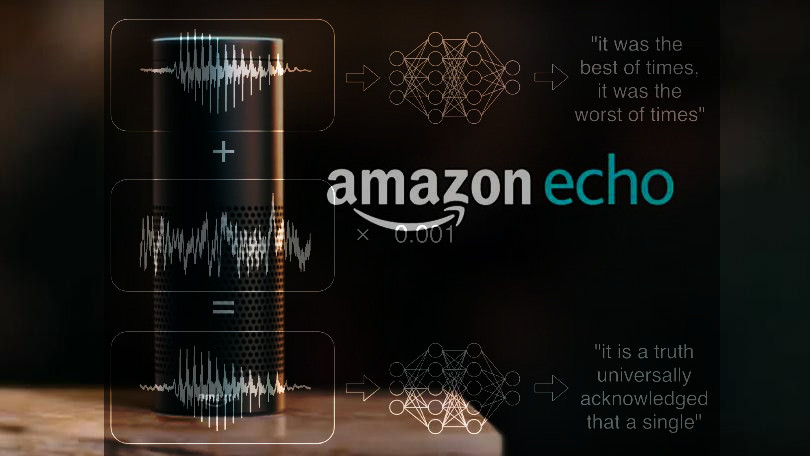

Adversarial examples: attack can imperceptibly alter any sound (or silence), embedding speech that only voice-assistants will hear

by Cory Doctorow from on (#3D1ZD)

Adversarial examples have torn into the robustness of machine-vision systems: it turns out that changing even a single well-placed pixel can confound otherwise reliable classifiers, and with the right tricks they can be made to reliably misclassify one thing as another or fail to notice an object altogether. But even as vision systems were falling to adversarial examples, audio systems remained stubbornly hard to fool, until now. (more")