Social Media Is Broken, But You Should Still Report Hate

After only a year of the #MeToo movement going viral, I was already jaded from bad news and men still not "getting it." By the time the Brett Kavanaugh hearings came up, I assumed everyone was feeling just as exhausted-until one of my friends told me she had channelled her rage into social media vigilantism.

My friend had successfully reported hateful content online, including a misogynistic men's rights group on Facebook. In less than a day, Facebook had either taken down the groups she reported, or taken down specific posts on the pages.

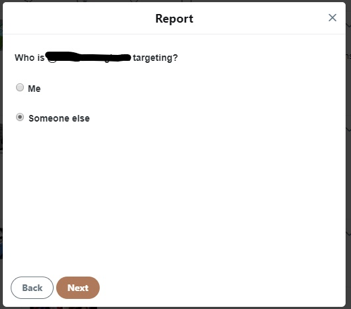

Twitter's reporting process. Images: Twitter screengrabs

Twitter's reporting process. Images: Twitter screengrabsAfter flagging a Day of the Rope tweet, Twitter gave the option to add four additional tweets from the offending account. When a post is offensive but not harmful, it's often difficult to find more explicitly troubling content. This time, I found five immediately.

"Just call them out and say, 'Stop being an asshole'"

Twitter requires the user violating its code of conduct to delete the offending tweet before allowing them to tweet again, rather than just taking it down. In an October 2018 blog post, Twitter explained, "Once we've required a Tweet to be deleted, we will display a notice stating that the Tweet is unavailable because it violated the Twitter Rules."

When I checked back two weeks later, the Twitter account was still up, but the tweets I reported were no longer available. It was a small action, to be sure, but by reporting something terrible, others didn't have to see it come up across their feed.

Users who report content do the important job of "protect[ing] other bystanders from potentially experiencing harm," said Kumar.

It's not up to users to fix social media's trouble with hateful content, and for the sake of your sanity, it's not recommended to go out of your way to look for it.

After all, moderation is a full-time job. Additionally, because hateful content is so pervasive, increased bystander reporting is unlikely to shift the onus of moderation from platforms to their users. Instead, Lo said, it helps "social media companies identify where their systems are failing" in spotting hate groups.

Plus, it is one of the few avenues available, now that going through the courts is harder than ever. Richard Warman, an Ottawa human rights lawyer and board member of the Canadian Anti-Hate Network, was an early practitioner of reporting content that violated the law. Prior to 2013, section 13 of Canada's human rights act essentially prohibited people from using telephones and the internet to spread hate propaganda against individuals or groups.

Warman filed 16 human rights complaints against hate groups through section 13. All 16 cases were successful.

In 2013, Canada's minority conservative government repealed section 13 under the argument that it was "an infringement on freedom of expression." Today, the only way to prosecute hate speech in Canada is through the criminal code. The code makes advocating genocide and public incitement of hatred towards "identifiable groups illegal on paper." Unfortunately, Warman says it is far more difficult to file complaints through the criminal code due to several factors-such as the lack of dedicated police hate crimes units.

Since the repeal of section 13, Warman says the lack of human rights legislation has allowed for the internet to become a "sewer." "It permits people to open the tops of their skulls and just let the demons out in terms of hate propaganda," he said.

In the United States, prosecuting hate speech is near impossible. The Supreme Court has reiterated that the First Amendment protects hate speech, most recently in 2017 through the case Matal vs. Tam. Additionally, hate crime statutes vary across states.

The terrifying repercussions of online hate have become undeniably clear-from the van attack in Toronto linked to 4chan, to the time propaganda on Facebook helped lead to the Rohingya genocide, the discussion over content moderation has become about preventing openly harmful speech, not defending free speech.

So how can we combat such a mess? Even if reporting doesn't result in platforms taking down content, Warman points out it creates a historical record that can help keep companies accountable should something more sinister happen as a result of the posts.

Kumar offered further reassurance. "For most of their existence, social media platforms have maintained a convenient 'hands-off approach' to content moderation-out of sight, out of mind," she said. "We saw in 2018 that there are greater calls for consultations between social media industry leaders, governments, policymakers, and internet researchers."

In 2018, then Canadian justice minister Jody Wilson-Raybould (now Minister of Veteran Affairs) hinted that the Liberal government may revisit section 13. Twitter addressed promoting "healthy conversation". Mark Zuckerberg promised Facebook would be more proactive about identifying harmful content and about working with governments on regulations. In 2019, Facebook says it will even offer appeals for content its moderators have deemed not in violation of its policies.

You don't have to be a dedicated internet vigilante to do your part. Go report that awful content you come across instead of just scrolling past. Or if you see someone you know on a personal level sharing hate propaganda, Warman points out that you can always take the old-school method of standing up for people. "Just call them out and say, 'Stop being an asshole.'"

Listen to CYBER, Motherboard's new weekly podcast about hacking and cybersecurity.