Senators Introduce Bill That Would Ban Websites From Using Manipulative Consent Forms

Platforms are very good at encouraging users to sign away the rights to their data. However, a new bill introduced to the US Senate might make certain strategies illegal.

The Deceptive Experiences To Online Users Reduction (DETOUR) Act-introduced by US Senators Mark R. Warner (D-VA) and Deb Fischer (R-NE)-would make certain ways that companies try to manipulate users into giving away their data illegal and punishable by the Federal Trade Commission (FTC).

Per the bill, companies would be banned from manipulating adults into signing away their data, or manipulating children into staying on a platform compulsively. The bill also requires platforms to ensure informed consent from users before green-lighting academic studies.

The legislation follows in the footsteps of the European Union's General Data Protection Regulation, which mandates that companies get informed consent from users before collecting their data. California's prospective data privacy law, if passed, would also require companies to be more transparent about the types of information they collect from users. But if passed, the DETOUR Act would be federal law, meaning tech companies may have to make widespread changes to their platform in order to comply.

The DETOUR Act would make it illegal to "design, modify, or manipulate a user interface" in order to obscure, subvert, or impair a user's ability to decide how their data is used. The interface refers to the "style, layout, and text" of a privacy policy. The rigor of default privacy regulations would also be subject to regulation under the DETOUR Act.

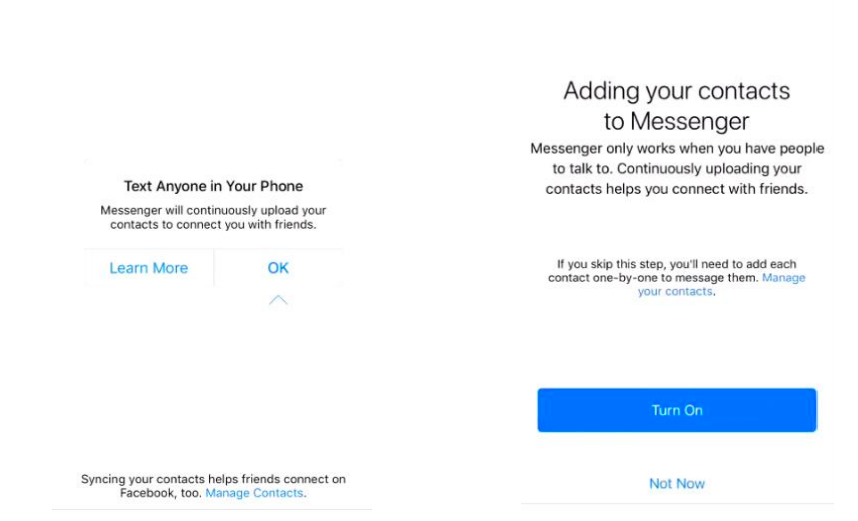

In a white paper published in 2017, Sen. Warner cited an earlier version of Facebook's Messenger app as an example of manipulative interface. An earlier version of the app included bolded text and an arrow pointing to the "OK" button, which would give Facebook access to your contacts. (The current version also included bolded text to give Facebook data access.)

Image: Warner.senate.gov

Image: Warner.senate.gov The DETOUR Act would also ban features that encourage "compulsive usage" for children under 13 years old. This would directly target platforms like YouTube, which has auto-play for both its regular site and for its child-specific YouTube Kids app. A representative for Common Sense Media told Motherboard in a phone call that the organization provided feedback and input to the authors of the bill.

The law would also apply to "behavioral or psychological experiments or studies," such as the ones used by Cambridge Analytica in order to sort users by personality type. Per the bill, any such studies have to get informed consent first, and experimenters would need to make routine disclosures to participants and to the public every 90 days.

If enacted, the DETOUR Act would require tech companies to make their own Independent Review Boards, which would be responsible for making sure they comply with the law. The act would also give the FTC one year to make infrastructure to review tech companies and enforce violations of the law.

Some major tech companies have data use policies that require informed consent for all research-however, historically, that policy has not always been enforced. For instance, Facebook's Data Use Policy requires informed consent. But experts have claimed that human research subjects found on Facebook don't always have a full grasp on research risks, nor are they given a penalization-free opt-out option, or a chance to ask questions. Those are all crucial aspects of informed consent.

For instance, a TechCrunch investigation revealed that Facebook's "Project Atlas" used a research app to give the company access to allthe data on users' phones. In exchange, users would receive $20 per month. The project raised questions of whether Facebook get informed consent from participants, especially minors. (Minors were prompted to get their parents' permission before downloading the research app, but teens could easily lie to get past the prompt.)

The DETOUR Act would not regulate every way that major platforms try to manipulate users into giving away their data.

For instance, consider how notoriously unreadable most privacy policies are. As many scholars have pointed out, privacy policies would take too much time to read for every site you visit, and they're often written in jargon-heavy legal speak. In other words, it's basically impossible to give real informed consent-with a true understanding of the risks involved with giving away your data-with the way that privacy policies are written right now.

The DETOUR act wouldn't be a sweeping solution to unilaterally solve the lack of informed consent on major online platforms, but it would be a step in the right direction.