Scientists Created a 'Frogger'-Playing AI That Explains Its Decisions

Computer scientists have developed an artificially intelligent agent that uses conversational language to explain the decisions it makes.

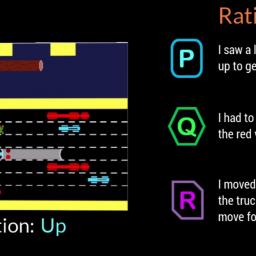

After training the AI system to play the popular arcade game Frogger, and documenting how human players explained the decisions they made while playing the game, the team of researchers developed the agent to generate language in real time to explain the motivations behind its actions.

"What makes us want to use technology is trust that it can do what it's supposed to," Mark Riedl, professor of interactive computing and lead faculty member on the project, told Motherboard over the phone.

As AI technology becomes more integrated into our lives-like in our healthcare systems and criminal justice system-it's imperative to have a greater understanding of how AI software arrives at the decisions it makes, especially when it makes mistakes.

When it comes to neural networks-a kind of AI architecture made up of potentially thousands of computational nodes-their decisions can be inscrutable, even to the engineers that design them. Such systems are capable of analyzing large swaths of data and identifying patterns and connections, and even if the math that governs them is understandable to humans, their aggregate decisions often aren't.

Upol Ehsan, lead researcher and PhD student in the School of Interactive Computing at Georgia Tech along with Riedl and their colleagues at Georgia Tech, Cornell University, and University of Kentucky, think that if average users can receive easy-to-understand explanations for how an AI software makes decisions, it will build trust in the technology to make sound decisions in a variety of applications.

"If I'm sitting in a self-driving car and it makes a weird decision, like change lanes unexpectedly, I would feel more comfortable getting in that car if I could ask it questions about why it was doing what it was doing," Riedl said.

The research was presented at Association for Computing Machinery's Intelligent User Interfaces conference in March, and a conference paper was published online in March as well.

The team chose the game Frogger as a research tool because it provided an environment in which decisions the AI has already made influence its future choices. It's an "ideal stepping stone towards a real world environment," the authors wrote. Explaining this chain of machine reasoning to experts is difficult, and even more so to non-experts.

The team conducted two studies both aimed at finding out which kinds of explanations from an AI software human users would respond best to. But first, they assembled human participants to play Frogger, and had them explain each decision they made out loud-transcribed by a speech-to-text program-providing material to feed into the AI agent for training.

Human spectators then watched the AI agent play Frogger, and were presented with three different explanations for the on-screen actions-one written by a human, one written by the AI and one randomly generated explanation. They were then asked to evaluate the statements on criteria like how much the explanation reminded them of human behavior, how adequately the AI justified its behavior, and how much the explanations helped them to understand the AI's behavior. The human explanations were favored among participants, with the AI's explanations chosen second.

In a second study, participants were only presented with AI-generated explanations, and had to decide if they preferred brief and simple explanations versus longer, more detailed ones. The researchers found that the more detailed explanations were rated higher, signaling that the more context that can be provided, the better.

Riedl told me that greater insight into how humans perceive artificial intelligence technology is crucial if it is ever to be widely adopted.

"This project is more about understanding human perceptions and preferences of these AI systems than it is about building new technologies," said Upsol Ehsan in a press release. "If the power of AI is to be democratized, it needs to be accessible to anyone regardless of their technical abilities. There is a distinct need for human-centered AI design."

Get six of our favorite Motherboard stories every day by signing up for our newsletter.