Machine Learning Identifies Weapons in the Christchurch Attack Video. We Know, We Tried It

This piece is part of an ongoing Motherboard series on Facebook's content moderation strategies. You can read the rest of the coverage here.

29 minutes after the Christchurch terrorist started streaming his attack on Facebook, a Facebook user first reported the stream as potentially violating the site's policies. The video included the killer filming in a first-person perspective shooting innocent civilians. The attacker in all killed 50 people and injured 50 more.

In that instance, Facebook removed the video after New Zealand police alerted the company; it often relies on users flagging content as well. Facebook told Motherboard this week it does have technology that can pick up on images, audio, and text for potential violations in livestreams; however as Guy Rosen, Facebook's VP of Product Management wrote in a post shortly after the attack, the video did not trigger Facebook's automatic detection systems.

But Motherboard found it may have been possible, at least technologically, for the world's biggest social media platform to spot weapons in the video much earlier, just seconds into the attack itself. If surfaced more quickly, Facebook could have potentially stopped the spread of a piece of highly violent propaganda.

Motherboard took a section of the Christchurch attack video, in which the attacker drives to one of the two mosques he targeted, leaves his vehicle, and approaches the building entrance, all with various weapons visible at different points, and fed stills from it into a tool from Amazon called "Rekognition." Rekognition can identify people and faces in images or video, but it can also spot specific items and label them as such. The product is also controversial for its marketing to law enforcement.

Got a tip? You can contact this reporter securely on Signal on +44 20 8133 5190, OTR chat on jfcox@jabber.ccc.de, or email joseph.cox@vice.com.

In some of the Christchurch attack stills, Rekognition spotted a gun or weapon with an over 90 percent confidence rating, according to Motherboard's tests. Theoretically, a tool linked to Rekognition could then take this result and escalate the livestream to a moderator ready for review, rather than waiting for a user to flag it. To keep the experiment focused on the use case at hand, Motherboard processed stills from an archived version of the Christchurch attack video and not a live stream, but a similar methodology could be applied to live video to receive updates in near real-time as well.

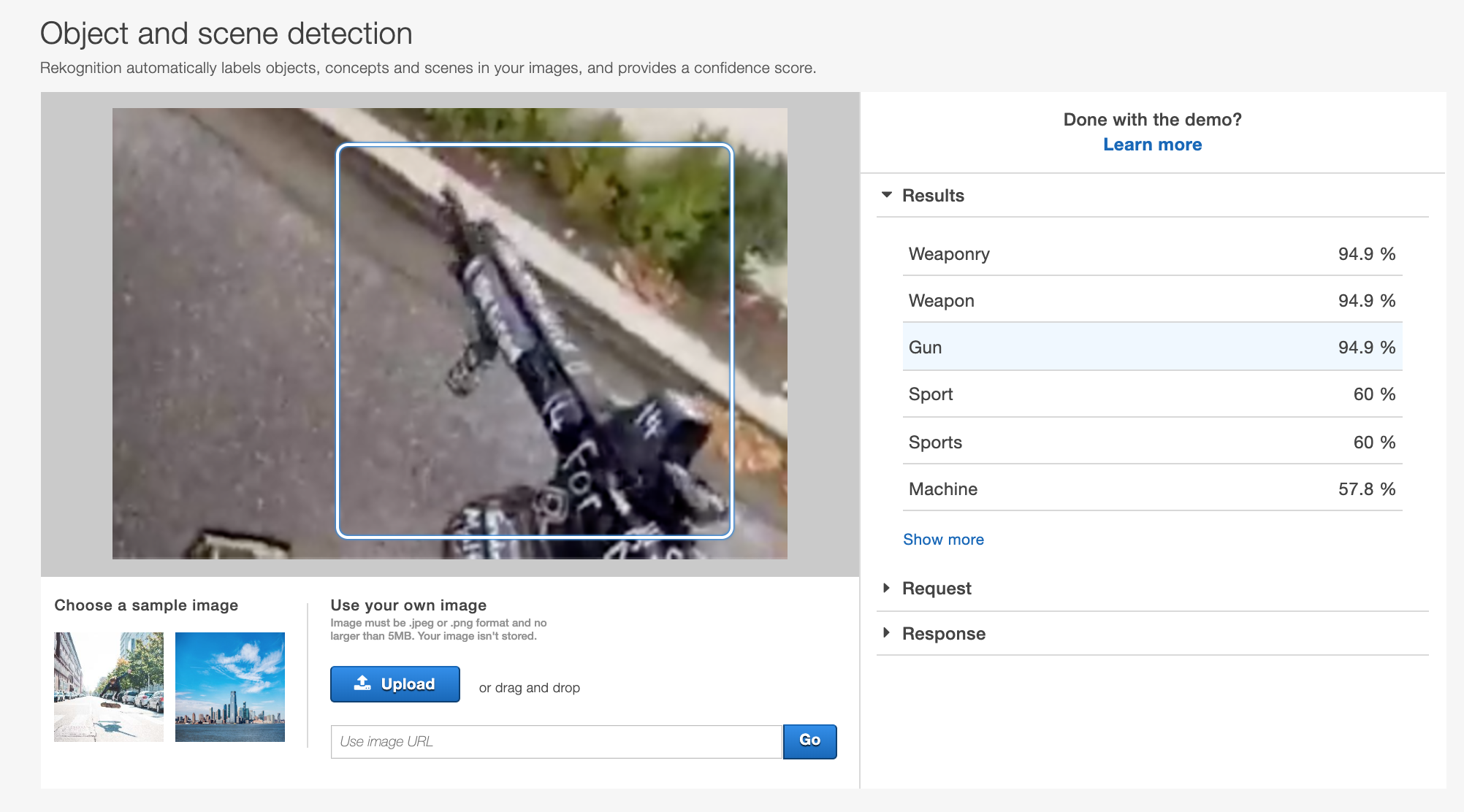

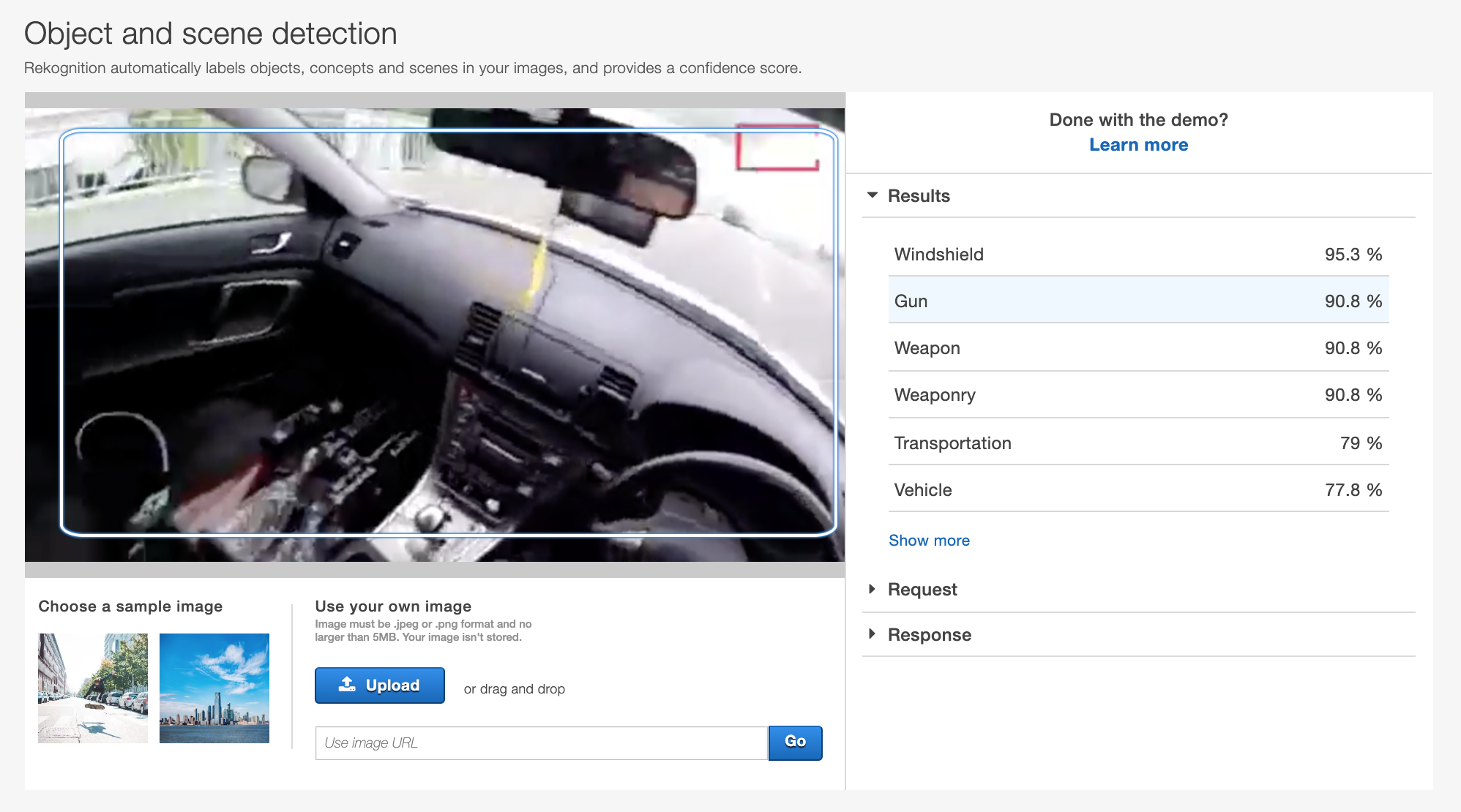

One still included two shotguns in the attacker's passenger seat; Rekognition detected a "gun," "weapon," and "weaponry" with a confidence rating of 90.8 percent. Another still showed the attacker's assault rifle as they exited the vehicle, and Rekognition marked the images with the same labels but with a 94.9 percent confidence rating. (It is worth bearing in mind that we processed the stills after the attack; perhaps Rekognition's own systems have improved at detecting weapons like those in the Christchurch video since its release.)

The result for one of the Christchurch attack videos processed through Rekognition. Image: Screenshot

The result for one of the Christchurch attack videos processed through Rekognition. Image: Screenshot "If Facebook could have that feature with live streams, I think that would change everything where if the software detects weapons, then it should go right to a reviewer and see what the content is," a source with direct knowledge and experience of working on Facebook's content moderation strategies told Motherboard. Motherboard granted some sources in this story anonymity to talk about internal Facebook mechanisms.

A second source, also with direct knowledge and experience of Facebook's moderation strategies, told Motherboard "If the live video has any kind of gun in it, that would be a great thing to send to a full time live moderator. Either automatically or as a last step for the frontline mod."

Obviously this crude test is not supposed to be a fully-fleshed solution that a tech giant would actually deploy. But it still highlights that it is possible to detect weapons in live streams. So what is the issue that is stopping the swift surfacing, and if appropriate, removal, of live streamed gun violence via automated means?

The result for another of the Christchurch attack videos processed through Rekognition. Image: Screenshot

The result for another of the Christchurch attack videos processed through Rekognition. Image: Screenshot The most obvious and immediate problem is, of course, Facebook's scale. With two billion users, flagging any stream that contains a gun to the company's fleet of 15,000 moderators could flood them with content that doesn't violate Facebook's rules. Someone streaming from a shooting range. A gun sporting event. Hunting. Or just personal but legal use of a weapon in someone's backyard. First- or third-person video games may also present an issue, too, which in most contexts do not violate Facebook's policies.

Another is that, although Motherboard's tests showed it is possible to detect weapons with cheap tools, classification systems can still miss or misidentify objects. Rekognition labelled one attack still uploaded by Motherboard as having a 50 percent chance of being related to Call of Duty, the hugely popular first-person military shooter video game. The system had particular issue detecting weapons with the barrel pointing away from the camera, as they are held later in the attacker's first-person perspective. In some instances, Rekognition totally misidentified a gun as another object, such as a train. But Rekognition's successes at spotting weapons are still notable.

"It would get more live streams reviewed as soon as possible."

"Many people have asked why artificial intelligence (AI) didn't detect the video from last week's attack automatically. AI has made massive progress over the years and in many areas, which has enabled us to proactively detect the vast majority of the content we remove. But it's not perfect," Rosen wrote in his post-Christchurch post.

While reporting another story about Instagram users selling weapons, Facebook confirmed to Motherboard the company launched proactive measures to identify content that violates its policies for guns in November 2018. On Tuesday, Facebook told Motherboard these machine learning methods were for surfacing images and text that when combined may indicate content which violates the company's policy around buying, selling, trading, or transferring guns. The system is trained on content the company has already removed for violating those policies, Facebook said.

Specifically for live streams Facebook says it identifies images it has already removed, including for violating its violence and nudity policies. Facebook also scans comments on live streams to spot imminent harm early; the company pointed to suicide cases, for example.

Off-the-shelf image recognition technology, or more advanced versions, clearly can help pick out weapons in live streams, and potentially help tech companies remove violating material quicker. But we may still be far off technologies being able to effectively surface even some of the most violent content in a real-world setting.

The first source with direct experience of Facebook's moderation strategies added, speaking about the benefit of detecting weapons in videos, "It would get more live streams reviewed as soon as possible."

Update: This piece has been updated to include comment from a second source with knowledge and experience of Facebook's content moderation strategies.

Subscribe to our new cybersecurity podcast,CYBER.