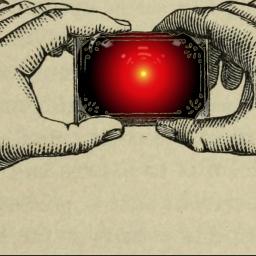

AI is like a magic trick: amazing until it goes wrong, then revealed as a cheap and brittle effect

I used to be on the program committee for the O'Reilly Emerging Technology conferences; one year we decided to make the theme "magic" -- all the ways that new technologies were doing things that baffled us and blew us away.

One thing I remember from that conference is that the technology was like magic: incredible when it worked, mundane once it was explained, and very, very limited in terms of what circumstances it would work under.

Writing in Forbes, Kalev Leetaru compares today's machine learning systems to magic tricks, and boy is the comparison apt: "Under perfect circumstances and fed ideal input data that closely matches its original training data, the resulting solutions are nothing short of magic, allowing their users to suspend disbelief and imagine for a moment that an intelligent silicon being is behind their results. Yet the slightest change of even a single pixel can throw it all into chaos, resulting in absolute gibberish or even life-threatening outcomes."

And just like magicians, the companies and agencies that use machine learning systems won't let you look behind the scenes or examine the props: Facebook won't reveal its false positive rates or allow external auditors for its machine learning system, which is why Instagram's anti-bullying AI is going to be a fucking catastrophe.

Another important parallel: magic tricks depend on the deliberate cultivation of a misapprehension of what's going on. A magician convinces you that they're doing the same trick three times in a row, while really it's three different tricks, so the hypothesis you develop the first time is invalidated when you see the trick "again" a second time. Meanwhile, terms like "artificial intelligence" and "machine learning" and "understanding" deliberately mislead the users of these systems, who anthropomorphize relatively straightforward statistical tools and believe that there's some cognition going on, which "leads us to be less cautious in thinking about the limitations of our code, subconsciously assuming that someone it will 'learn' its way around those limitations on its own without us needing to curate its training data or tweak its algorithms ourselves."

The end result is that like a good magic show, the world of deep learning the public sees is little more than a stage-managed illusion. Collections of simplistic tricks are presented under ideal circumstances in order to convince the public of an AI revolution that exists only in their imaginations.

Driverless car companies sell vehicles upon the idea that in a few short years those cars will be autonomously outperforming the best human drivers, even while those companies recognize internally that today's technology cannot offer such a world.

We freely buy into this illusion of an AI revolution because we want to believe that the intelligent machines that decades of science fiction have foretold have finally arrived.

Today's Deep Learning Is Like Magic - In All The Wrong Ways [Kalev Leetaru/Forbes]

(via Naked Capitalism)