Google aims to change the definition of good photography with Pixel 4’s software-defined camera

Google's new Pixel 4 camera offers a ton of new tricks to improve its photographic chops, and to emphasize the point, it had Professor Mark Levoy, who leads camera technology development at Google Research, up on stage to talk about the Pixel 4's many improvements, including its new telephoto lens, updated Super Res Zoom technology and Live HDR+ preview.

Subject, Lighting, Lens, SoftwareLevoy started by addressing the oft-cited saying among photographers that what's most important to a good photo is first subject, then lighting and followed after that by your hardware: ie., your lens and camera body. He said that he and his team believe that there's a different equation at play now, which replaces that camera body component with something else: Software.

Lens is still important in the equation, he said, and the Pixel 4 represents that with the addition of a telephoto lens to the existing wide angle hardware lens it offers. Levoy also offered the opinion that a telephoto is more useful generally than a wide angle, clearly a dig at Apple's addition of an ultra-wide angle hardware lens to its latest iPhone 11 Pro models.

In this context, that means Google's celebrated "computational photography" approach to its Pixel camera tech, which handles a lot of the heavy lifting involved when it takes a photo from a small sensor, which tend to be bad, and turns that into something pretty amazing.

Levoy said that he calls their approach a "software-defined camera," which most of the time just means capturing multiple photos, and combining data from each in order to produce a better, single final picture.

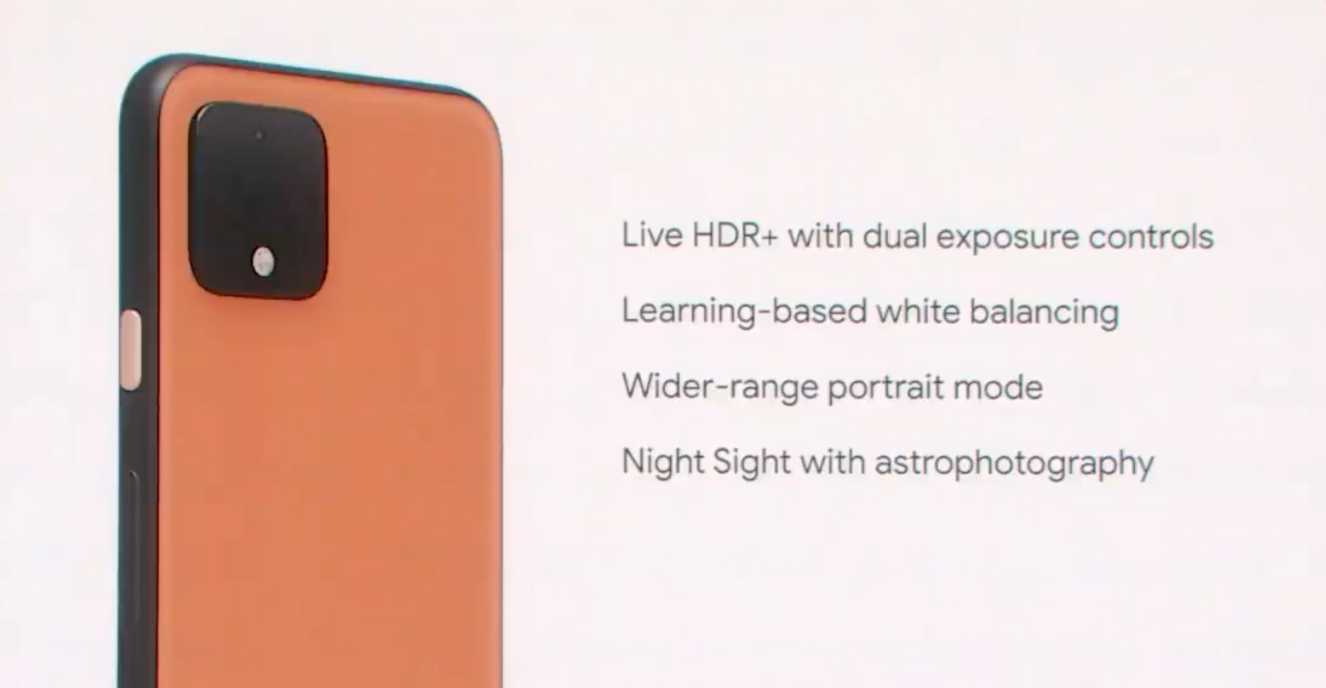

What's new for Pixel 4There are four new features for the Pixel 4 phone powered by computational photography, which include Live HDR with dual exposure controls, which shows you a real-time image of what the final photo will look like with the HDR treatment applied, instead of just giving you a very different looking final shot. It also bakes in exposure controls that allow you to adjust the highlights and shadows in the image on the fly, which is useful if you want bolder highlights or silhouettes from shadows, for instance.

Also new is "Learning-based white balance," which addresses the tricky issue of getting your white balance correct. Levoy said that Google has been using this approach in white-balancing night sight photos since the introduction of that feature with Pixel 3, but now it's bringing it to all photo modes. The result is cooler colors, and particularly in tricky lighting situations when whites tend to be incorrectly exposed as orange or yellow.

The new wide-range portrait mode makes use of info from both the dual-pixel imaging sensors that Pixel 4 uses, as well as the new second lens to derive more depth data and provide an expanded, more accurate portrait mode to separate the subject from the background. It now works on large objects and portraits where the person in focus is standing further back, and it provides better bokeh shape (the shape of the defocused elements int eh background) and better definition of strands of hair and fur, which has always been tricky for software background blur.

Lastly, Night Sight mode gets overall improvements, as well as a new astral photography mode specifically for capturing the night sky and star fields. The astral mode provides great looking night sky images with exposure times that run multiple minutes, but all with automatic settings and computational algorithms that sort out issues like stars moving during that time.

Still more to comeGoogle wanted to emphasize the point that this is a camera that can overcome a lot of the problems faced typically by small sensors, and it brought out heavyweight photography legend Annie Lebowitz to do just that. She showed some of the photos she's been capturing both with Pixel 3 and Pixel 4, and they did indeed look great, although the view from the feed doesn't say quite as much as would print versions of the final photos.

[gallery ids="1897442,1897441,1897440"]

Levoy also said that they plan to improve the camera over time via software updates, so this is just the start for Pixel 4. Based on what we saw on stage, it definitely looks like a step-up from the already excellent Pixel 3, but we'll need more time hand-on to see what it does compared to Apple's much-improved iPhone 11 camera.