Self-Driving Cars Learn About Road Hazards Through Augmented Reality

Photo: University of Michigan Mock City: Mcity is a city in miniature, with roads, traffic signals, and storefronts.

Photo: University of Michigan Mock City: Mcity is a city in miniature, with roads, traffic signals, and storefronts. For decades, anyone who wanted to know whether a new car was safe to drive could simply put it through its paces, using tests established through trial and error. Such tests might investigate whether the car can take a sharp turn while keeping all four wheels on the road, brake to a stop over a short distance, or survive a collision with a wall while protecting its occupants.

But as cars take an ever greater part in driving themselves, such straightforward testing will no longer suffice. We will need to know whether the vehicle has enough intelligence to handle the same kind of driving conditions that humans have always had to manage. To do that, automotive safety-assurance testing has to become less like an obstacle course and more like an IQ test.

One obvious way to test the brains as well as the brawn of autonomous vehicles would be to put them on the road along with other traffic. This is necessary if only because the self-driving cars will have to share the road with the human-driven ones for many years to come. But road testing brings two concerns. First, the safety of all concerned can't be guaranteed during the early stages of deployment; self-driving test cars have already been involved in fatal accidents. Second is the sheer scale that such direct testing would require.

That's because most of the time, test vehicles will be driven under typical conditions, and everything will go as it normally does. Only in a tiny fraction of cases will things take a different turn. We call these edge cases, because they concern events that are at the edge of normal experience. Example: A truck loses a tire, which hops the median and careens into your lane, right in front of your car. Such edge cases typically involve a concurrence of failures that are hard to conceive of and are still harder to test for. This raises the question, How long must we road test a self-driving, connected vehicle before we can fairly claim that it is safe?

Photo: University of Michigan Inside a Robocar: Here is a passenger's view of an autonomous Lincoln MKZ Hybrid.

Photo: University of Michigan Inside a Robocar: Here is a passenger's view of an autonomous Lincoln MKZ Hybrid. The answer to that question may never be truly known. What's clear, though, is that we need other strategies to gauge the safety of self-driving cars. And the one we describe here-a mixture of physical vehicles and computer simulation-might prove to be the most effective way there is to evaluate self-driving cars.

A fatal crash occurs only once in about 160 million kilometers of driving, according to statistics compiled by the U.S. National Highway Traffic Safety Administration [PDF]. That's a bit more than the distance from Earth to the sun (and 10 times as much as has been logged by the fleet of Google sibling Waymo, the company that has the most experience with self-driving cars). To travel that far, an autonomous car driving at highway speeds for 24 hours a day would need almost 200 years. It would take even longer to cover that distance on side streets, passing through intersections and maneuvering around parking lots. It might take a fleet of 500 cars 10 years to finish the job, and then you'd have to do it all over again for each new design.

Clearly, the industry must augment road testing with other strategies to bring out as many edge cases as possible. One method now in use is to test self-driving vehicles in closed test facilities where known edge cases can be staged again and again. Take, as an example, the difficulties posed by cars that run a red light at high speed. An intersection can be built as if it were a movie set, and self-driving cars can be given the task of crossing when the light turns green while at the same time avoiding vehicles that illegally cross in front of them.

Photo: University of Michigan Eyes Everywhere: A rooftop tower carries a rotating lidar; forward-looking lidar units ride at the corners.

Photo: University of Michigan Eyes Everywhere: A rooftop tower carries a rotating lidar; forward-looking lidar units ride at the corners. While this approach is helpful, it also has limitations. Multiple vehicles are typically needed to simulate edge cases, and professional drivers may well have to pilot them. All this can be costly and difficult to coordinate. More important, no one can guarantee that the autonomous vehicle will work as desired, particularly during the early stages of such testing. If something goes wrong, a real crash could happen and damage the self-driving vehicle or even hurt people in other vehicles. Finally, no matter how ingenious the set designers may be, they cannot be expected to create a completely realistic model of the traffic environment. In real life, a tree's shadow can confuse an autonomous car's sensors and a radar reflection off a manhole cover can make the radar see a truck where none is present.

Computer simulation provides a way around the limitations of physical testing. Algorithms generate virtual vehicles and then move them around on a digital map that corresponds to a real-world road. If the data thus generated is then broadcast to an actual vehicle driving itself on the same road, the vehicle will interpret the data exactly as if it had come from its own sensors. Think of it as augmented reality tuned for use by a robot.

Although the physical test car is driving on empty roads, it "thinks" that it is surrounded by other vehicles. Meanwhile, it sends information that it is gathering-both from augmented reality and from its sensing of the real-world surroundings-back to the simulation platform. Real vehicles, simulated vehicles, and perhaps other simulated objects, such as pedestrians, can thus interact. In this way, a wide variety of scenarios can be tested in a safe and cost-effective way.

The idea for automotive augmented reality came to us by the back door: Engineers had already improved certain kinds of computer simulations by including real machines in them. As far back as 1999, Ford Motor Co. used measurements of an actual revving engine to supply data for a computer simulation of a power train. This hybrid simulation method was called hardware-in-the-loop, and engineers resorted to it because mimicking an engine in software can be very difficult. Knowing this history, it occurred to us that it would be possible to do the opposite-generate simulated vehicles as part of a virtual environment for testing actual cars.

Photo: University of Michigan Mission Control: Monitors show all the data that the test car itself sees via its onboard sensors, together with virtual traffic that's simulated in a computer.

Photo: University of Michigan Mission Control: Monitors show all the data that the test car itself sees via its onboard sensors, together with virtual traffic that's simulated in a computer. In June 2017, we implemented an augmented-reality environment in Mcity, the world's first full-scale test bed for autonomous vehicles. It occupies 32 acres on the North Campus of the University of Michigan, in Ann Arbor. Its 8 lane-kilometers (5 lane-miles) of roadway are arranged in sections having the attributes of a highway, a multilane arterial road, or an intersection.

Here's how it works. The autonomous test car is equipped with an onboard device that can broadcast vehicle status, such as location, speed, acceleration, and heading, doing so every tenth of a second. It does this wirelessly, using dedicated short-range communications (DSRC), a standard similar to Wi-Fi that has been earmarked for mobile users. Roadside devices distributed around the testing facility receive this information and forward it to a traffic-simulation model, one that can simulate the testing facility by boiling it down to an equivalent network geometry that incorporates the actions of traffic signals. Once the computer model receives the test car's information, it creates a virtual twin of that car. Then it updates the virtual car's movements based on the movements of the real test car.

Feeding data from the real test vehicle into the computer simulation constitutes only half of the loop. We complete the other half by sending information about the various vehicles the computer has simulated to the test car. This is the essence of the augmented-reality environment. Every simulated vehicle also generates vehicle-status messages at a frequency of 10 hertz, which we forward to the roadside devices, which in turn broadcast it in real time. When the real test car receives that data, its vehicle-control system uses it to "see" all the virtual vehicles. To the car, these simulated entities are indistinguishable from the real thing.

By having vehicles pass messages through the roadside devices-that is, by substituting "vehicle-to-infrastructure" connections for direct "vehicle-to-vehicle" links-real vehicles and virtual vehicles can sense one another and interact accordingly. In the same fashion, traffic-signal status is also synchronized between the real and the simulated worlds. That way, real and virtual vehicles can each "look" at a given light and see whether it is green or red.

Photos: University of Michigan Real and Feigned: A radio transceiver [white object, top] takes data from the car and returns virtual data generated by computers. One virtual object is a train [second from top]. The car brakes to avoid a red-light-running virtual car [third from top]. Many traffic patterns can be generated in a small space [bottom].

Photos: University of Michigan Real and Feigned: A radio transceiver [white object, top] takes data from the car and returns virtual data generated by computers. One virtual object is a train [second from top]. The car brakes to avoid a red-light-running virtual car [third from top]. Many traffic patterns can be generated in a small space [bottom]. The status messages passed between real and simulated worlds include, of course, vehicle positions. This allows actual vehicles to be mapped onto the simulated road network, and simulated vehicles to be mapped into the actual road network. The positions of actual vehicles are represented with GPS coordinates-latitude, longitude, and elevation-and those of simulated vehicles with local coordinates-x, y, and z. An algorithm transforms one system of coordinates into the other.

But that mathematical transformation isn't all that's needed. There are small GPS and map errors, and they sometimes prevent a GPS position, forwarded from the actual test car and translated to the local system of coordinates, from appearing on a simulated road. We correct these errors with a separate mapping algorithm. Also, when the test car stops, we must lock it in place in the simulation, so that fluctuations in its GPS coordinates do not cause it to drift [PDF] out of position in the simulation.

Everything here depends on wireless communication. To ensure that it was reliable, we installed four roadside radios in Mcity, enough to cover the entire testing facility. The DSRC wireless standard, which operates in the 5.9-gigahertz band, gives us high data-transmission rates and very low latency. These are critical to safety at high speeds and during stop-on-a-dime maneuvers. DSRC is in wide use in Japan and Europe; it hasn't yet gained much traction in the United States, although Cadillac is now equipping some of its cars with DSRC devices.

Whether DSRC will be the way cars communicate with one another is uncertain, though. Some people have argued that cellular communications, particularly in the coming 5G implementation, might offer equally low latency with a greater range. Whichever standard wins out, the communications protocols used in our system can easily be adapted to it.

We expect that the software framework we used to build our system will also endure, at least for a few years. We constructed our simulation with PTV Vissim, a commercial package developed in Germany to model traffic flow "microscopically," that is, by simulating the behavior of each individual vehicle.

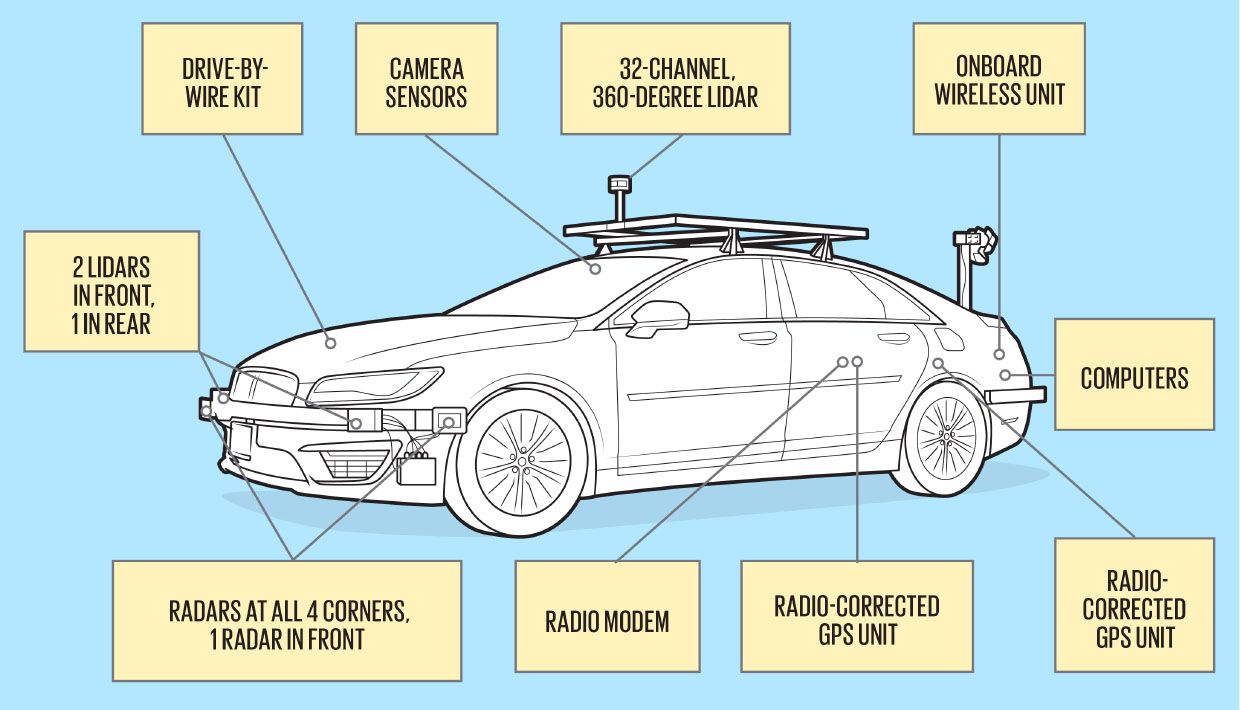

One thing that can be expected to change is the test vehicle, as other companies begin to use our system to put their own autonomous vehicles through their paces. For now, our one test vehicle is a Lincoln MKZ Hybrid, which is equipped with DSRC and thus fully connected. Drive-by-wire controls that we added to the car allow software to command the steering wheel, throttle, brake, and transmission. The car also carries multiple radars, lidars, cameras, and a GPS receiver with real-time kinematic positioning, which improves resolution by referring to a signal from a ground-based radio station.

Festooned with SensorsThe Mcity test car carries lidar (a laser range finder) both for a theater-in-the-round view (provided by the rotating roof tower) and for a look-ahead focus. Radar complements this sense, and GPS-in a highly accurate radio-corrected form-rounds out the armamentarium.

Illustration: Chris Philpot

Illustration: Chris Philpot We have implemented two testing scenarios. In the first one, the system generates a virtual train and projects it into the augmented reality perceived by the test car as the train approaches a mock-up of a rail crossing in Mcity. The point is to see whether the test car can stop in time and then wait for the train to pass. We also throw in other virtual vehicles, such as cars that follow the test car. These strings of cars-actual and virtual-can be formally arranged convoys (known as platoons) or ad hoc arrangements: perhaps cars queuing to get onto an entry ramp.

The second, more complicated testing scenario involves the case we mentioned earlier-running a red light. In the United States, cars running red lights cause more than a quarter of all the fatalities that occur at an intersection, according to the American Automobile Association. This scenario serves two purposes: to see how the test car reacts to traffic signals and also how it reacts to red-light-running scofflaws.

Our test car should be able to tell whether the signal is red or green and decide accordingly whether to stop or to go. It should also be able to notice that the simulated red-light runner is coming, predict its trajectory, and calculate when and where the test car might be when it crosses that trajectory. The test car ought to be able to do all these things well enough to avoid a collision.

Because the computer running the simulation can fully control the actions of the red-light runner, it can generate a wide variety of testing parameters in successive iterations of the experiment. This is precisely the sort of thing a computer can do much more accurately than any human driver. And of course, the entire experiment can be done in complete safety because the lawbreaker is merely a virtual car.

There is a lot more of this kind of edge-case simulation that can be done. For example, we can use the augmented-reality environment to evaluate the ability of test cars to handle complex driving situations, like turning left from a stop sign onto a major highway. The vehicle needs to seek gaps in traffic going in both directions, meanwhile watching for pedestrians who may cross at the sign. The car can decide to make a stop in the median first, or instead simply drive straight into the desired lane. This involves a decision-making process of several stages, all while taking into account the actions of a number of other vehicles (including predicting how they will react to the test car's actions).

Another example involves maneuvers at roundabouts-entering, exiting, and negotiation for position with other cars-without help from a traffic signal. Here the test car needs to predict what other vehicles will do, decide on an acceptable gap to use to merge, and watch for aggressive vehicles. We can also construct augmented-reality scenarios with bicyclists, pedestrians, and other road users, such as farm machinery. The less predictable such alternative actors are, the more intelligence the self-driving car will need.

Ultimately, we would like to put together a large library of test scenarios including edge cases, then use the augmented-reality testing environment to run the tests repeatedly. We are now building up such a library with data scoured from reports of actual crashes, together with observations by sensor-laden vehicles of how people drive when they don't know they're part of an experiment. By putting together disparate edge conditions, we expect to create artificial edge cases that are particularly challenging for the software running in self-driving cars.

Thus armed, we ought to be able to see just how safe a given autonomous car is without having to drive it to the sun and back.

This article appears in the December 2019 print issue as "Augmented Reality for Robocars."

About the AuthorsHenry X. Liu is a professor of civil and environmental engineering at the University of Michigan, Ann Arbor, and a research professor at the University of Michigan Transportation Research Institute. Yiheng Feng is an assistant research scientist in the university's Engineering Systems Group.