The Inside Story of the First Picture of a Black Hole

Photos, clockwise from top: EHT Collaboration; Daniel Michalik/South Pole Telescope; William Montgomerie/JCMT Seeing By Radio: Last year, scientists published images of a black hole called M87* [top]. The data that went into constructing those images came from seven radio telescopes spread over the globe, including the James Clerk Maxwell Telescope, in Hawaii [left]. Another telescope, at the South Pole [right], aided in the calibration of the telescope network and is used for observing other astronomical sources.

Photos, clockwise from top: EHT Collaboration; Daniel Michalik/South Pole Telescope; William Montgomerie/JCMT Seeing By Radio: Last year, scientists published images of a black hole called M87* [top]. The data that went into constructing those images came from seven radio telescopes spread over the globe, including the James Clerk Maxwell Telescope, in Hawaii [left]. Another telescope, at the South Pole [right], aided in the calibration of the telescope network and is used for observing other astronomical sources. Last April, a research team that I'm part of unveiled a picture that most astronomers never dreamed they would see: one of a massive black hole at the center of a distant galaxy. Many were shocked that we had pulled off this feat. To accomplish it, our team had to build a virtual telescope the size of the globe and pioneer new techniques in radio astronomy.

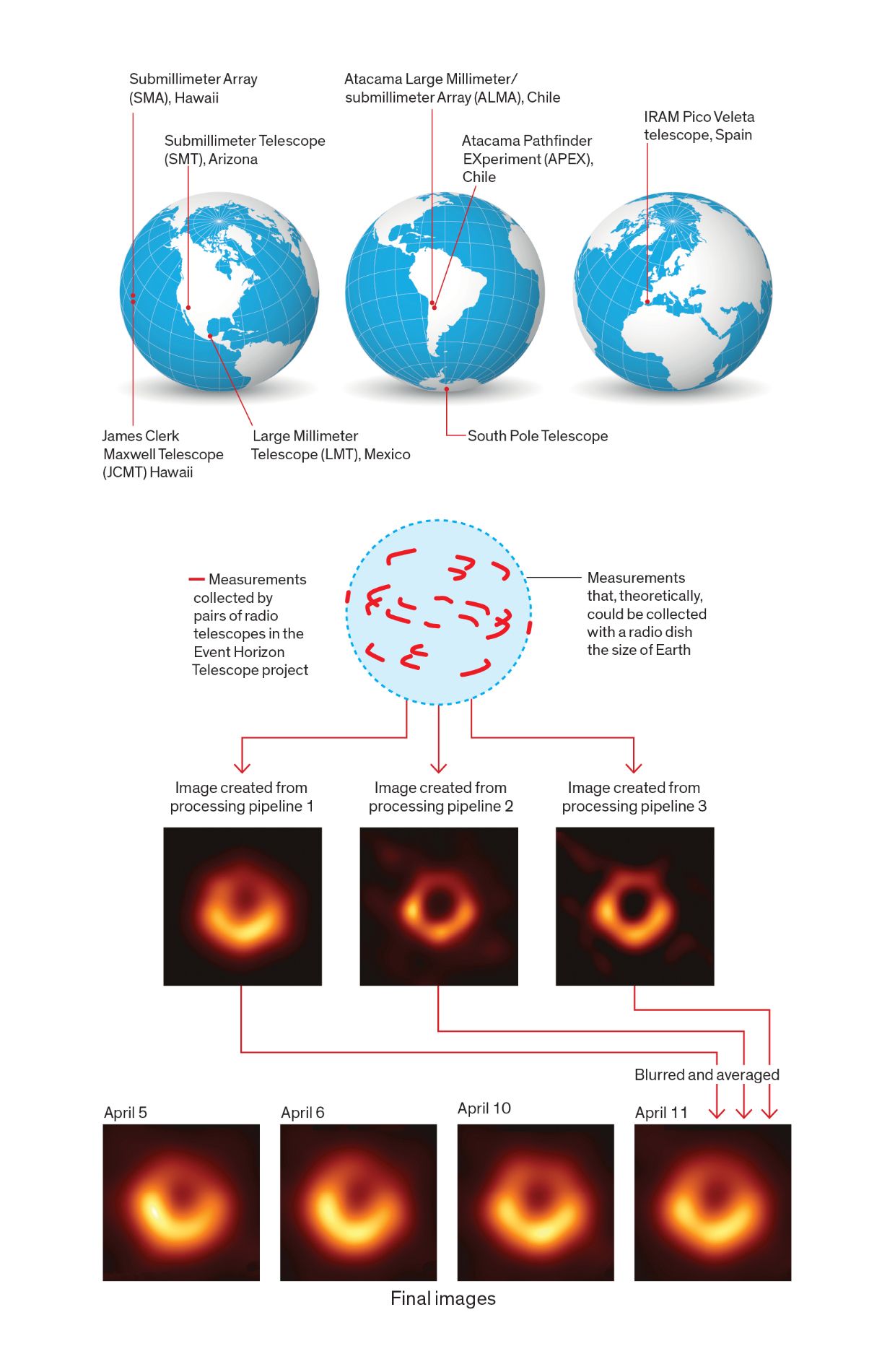

Our group-made up of more than 200 scientists and engineers in 20 countries-combined eight of the world's most sensitive radio telescopes, a network of synchronized atomic clocks, two custom-built supercomputers, and several new algorithms in computational imaging. After more than 10 years of work, this collective effort, known as the Event Horizon Telescope (EHT) project, was finally able to illuminate one of the greatest mysteries of nature.

Within weeks of our announcement (and the publication of six journal articles), an estimated billion-plus people had seen the picture of the light-fringed shadow cast by M87*, a black hole at the center of Messier 87, a galaxy in the Virgo constellation. It is likely among the most labor-intensive scientific images yet created. In recognition of the global teamwork required to combine efforts in black-hole theory and modeling, electronic instrumentation and calibration, and image reconstruction and analysis, the 2020 Breakthrough Prize in Fundamental Physics was awarded to the entire collaboration.

Now we are adding more giant dish antennas to sharpen our view of such objects. Thanks to an infusion of new funding from the U.S. National Science Foundation and others, we have set an ambitious goal: to make a movie of the swirling gravitational monster that forms the black heart of our own Milky Way galaxy.

The existence of black holes was a dubious prediction of the general theory of relativity, which Einstein developed a little over a century ago. Astronomers debated for decades later whether massive stars would create black holes when they collapse under their own weight at the end of their lives. Even more mysterious were supermassive black holes, hypothesized to lurk in the hearts of galaxies. Astronomers observed extraordinarily bright, compact objects and powerful galactic-scale jets beaming from the centers of many galaxies-including Messier 87-as well as stars and gas clouds orbiting hidden central objects. Supermassive black holes could account for such observations, and no other explanation seemed very plausible.

By the turn of the 21st century, astronomers had come to believe that black holes must be common. Most black holes are probably far too small and distant for us ever to observe. These "ordinary" black holes form when a star 10 times as massive as the sun or heavier collapses into something so dense that it bends the very fabric of space-time enough to form a spherical trap. Any matter, light, or radiation crossing the edge of this trap, known as the event horizon, disappears forever.

But the gargantuan black holes that we now think inhabit the centers of most galaxies-like the 4-million-solar-mass Sagittarius A* (Sgr A* for short) cloaked behind a veil of dust in the Milky Way and the 6.5-billion-solar-mass M87*-are different beasts altogether. Fed by matter and energy spiraling in from their host galaxies over eons, these are the most massive objects known, and they create around them the most extreme conditions found anywhere in the universe.

So says the math of general relativity. And the Nobel Prize-winning detection in 2015 of gravitational waves-essentially, a chirp of rippling space-time created by the whirling merger of two ordinary black holes more than a billion light-years away-offered yet another piece of evidence that black holes are real. It took an incredible feat of technology to "hear" the vibrations of that cosmic crash: two laser interferometers, each 4 kilometers on a side and able to detect a change in the lengths of their arms less than 0.01 percent the width of a proton. But hearing is not seeing.

Photos, clockwise from top left: Helge Rottman; Ana Torres Campos; Babak A. Tafreshi/ESO; Junhan Kim/University of Arizona; Jonathan Weintroub; Salvador Sanchez People and Equipment: The scientific collaboration that resulted in the first images of a black hole involved hundreds of people, giant radio telescopes, and supercomputer facilities spread around the world. In addition to the James Clerk Maxwell Telescope and the South Pole Telescope, six more radio telescopes were involved in the effort to produce pictures of a black hole: the Atacama Large Millimeter/submillimeter Array (ALMA), in Chile [top left]; the Large Millimeter Telescope (LMT), in Mexico [top right]; the IRAM Pico Veleta telescope, in Spain [middle left]; the Atacama Pathfinder EXperiment (APEX), in Chile [middle right]; the Submillimeter Array (SMA), in Hawaii [bottom left]; and the Submillimeter Telescope (SMT) [bottom right], in Arizona.

Photos, clockwise from top left: Helge Rottman; Ana Torres Campos; Babak A. Tafreshi/ESO; Junhan Kim/University of Arizona; Jonathan Weintroub; Salvador Sanchez People and Equipment: The scientific collaboration that resulted in the first images of a black hole involved hundreds of people, giant radio telescopes, and supercomputer facilities spread around the world. In addition to the James Clerk Maxwell Telescope and the South Pole Telescope, six more radio telescopes were involved in the effort to produce pictures of a black hole: the Atacama Large Millimeter/submillimeter Array (ALMA), in Chile [top left]; the Large Millimeter Telescope (LMT), in Mexico [top right]; the IRAM Pico Veleta telescope, in Spain [middle left]; the Atacama Pathfinder EXperiment (APEX), in Chile [middle right]; the Submillimeter Array (SMA), in Hawaii [bottom left]; and the Submillimeter Telescope (SMT) [bottom right], in Arizona. Encouraged by the success of initial pilot studies in the late 1990s and early 2000s, an international team of astronomers proposed a bold plan to make an image of a supermassive black hole. They believed that advances in electronics now made it possible to see these bizarre objects for the first time and to open a new window on the study of general relativity. Teams from six continents came together to form the Event Horizon Telescope project.

Creating such a picture would require another giant leap in astronomical interferometry. We would have to virtually link observatories across the planet to function together as one giant virtual telescope. It would have the resolving power of a dish almost as wide as Earth itself. With such an instrument, it was hoped, we could finally see a black hole.

While M87* itself is black, it is backlit by a blinding glow of radiation emanating from the material swirling around it. Friction and magnetic forces heat that matter to hundreds of billions of degrees. The result is an incandescent plasma that emits light and radio waves. The massive object bends those rays, and some of them head our way.

A shadow of the black hole, just bigger than the object and its event horizon, is imprinted on that radiation pattern. Measuring the size and shape of this eerie shadow could tell us a lot about M87*, settling arguments about its mass and whether it is a black hole or something more exotic still. Unfortunately, the cores of galaxies are obscured by giant, diffuse clouds of gas and dust, which leave us no hope of seeing M87* with visible light or at the very long wavelengths typically used in radio astronomy.

Those clouds become more transparent, however, at short radio wavelengths of around 1 millimeter; we chose to observe at 1.3 mm. Such radio waves, at the extremely high frequency of 230 gigahertz, also pass through air largely unimpeded, although atmospheric water vapor does attenuate and delay them somewhat in the last few miles of their 55-million-year journey from the periphery of M87* to our radio telescopes on Earth.

The brightness and relative proximity of M87* worked in our favor, but on the other side were some formidable technical challenges, starting with that wobbly signal delay caused by water vapor. Then there was the size problem. Although M87* is ultramassive, it is comparatively small-the shadow this black hole casts is only around the size of our solar system. Resolving it from Earth is like trying to read a newspaper from 5,000 km away. The resolving power of a lens or dish is set by the ratio of the wavelength observed to the instrument's diameter. To resolve M87* using 1.3-mm radio waves, we would need a radio dish 13,000 km across.

Although that might seem like a showstopper, interferometry offers a way around that problem-but only if you can collect pieces of the same radio wave front as it arrives at different times at all our telescopes in far-flung locations, stretching from Hawaii and the Americas to Europe. To do that, we have to digitally time-stamp our measurements and then combine them with enough precision to extract the relevant signals and convert them into an image. This technique is called very-long-baseline interferometry (VLBI), and the members of the Event Horizon Telescope project believed it could be used to image a black hole.

The details proved truly devilish, however. By the time the radio signals from M87* bounce into a receiver connected to one of our 6- to 50-meter dish antennas on Earth, the signal power has dropped to roughly 10-16 W (0.1 femtowatt). That's about a billionth of the strength of the signals a satellite TV dish typically picks up. So the very low signal-to-noise ratio posed one major problem, exacerbated by the fact that our largest single-dish telescope, the 50-meter Large Millimeter Telescope, in Mexico, was still being completed and wasn't yet fully operational when we used it in 2017.

Images: EHT Collaboration Ringing Success: Four teams of researchers, working independently, produced the first images of the M87 black hole. Two of the four imaging teams used traditional algorithms from radio astronomy to produce images of the black hole [top two]. The other two teams used a more modern class of algorithms developed for the Event Horizon Telescope data [bottom two]. While the four images produced by these teams differ in many details, they each show the same fundamental structure-a luminous ring of about 40 microarcseconds in diameter, one that is asymmetrical with the brighter portion at the bottom. The diameter of the black hole shadow is particularly important to astronomers in confirming an earlier estimate of the mass of the object, which appears to be about 6.5 billion times the mass of the sun.

Images: EHT Collaboration Ringing Success: Four teams of researchers, working independently, produced the first images of the M87 black hole. Two of the four imaging teams used traditional algorithms from radio astronomy to produce images of the black hole [top two]. The other two teams used a more modern class of algorithms developed for the Event Horizon Telescope data [bottom two]. While the four images produced by these teams differ in many details, they each show the same fundamental structure-a luminous ring of about 40 microarcseconds in diameter, one that is asymmetrical with the brighter portion at the bottom. The diameter of the black hole shadow is particularly important to astronomers in confirming an earlier estimate of the mass of the object, which appears to be about 6.5 billion times the mass of the sun. The 1.3-mm wavelength, considerably shorter than the norm in VLBI, also pushed the limits of our technology. The receivers we used converted the 230-GHz signals down to a more manageable frequency of around 2 GHz. But to get as much information as we could about M87*, we recorded both right- and left-hand circular polarizations at two frequencies centered around 230 GHz. As a result, our instrumentation had to sample and store four separate data streams pouring in at the prodigious rate of 32 gigabits per second at each of the participating telescopes.

Interferometry works only if you can precisely align the peaks in the signal recorded at each pair of telescopes, so the short wavelength also required us to install hydrogen-maser atomic clocks at each site that could sample the signal with subpicosecond accuracy. We used GPS signals to time-stamp the observations.

On four nights in April 2017, everything had to come together. Seven giant telescopes (some of them multidish arrays) pointed at the same minuscule point in the sky. Seven maser clocks locked into sync. A half ton of helium-filled, 6- to 8-terabyte hard drives started spinning. I along with a few dozen other bleary-eyed scientists sat at our screens in mountaintop observatories hoping that clouds would not roll in. Because of the way interferometry works, we would immediately lose 40 percent of our data if cloud cover or technical issues forced even one of the telescopes to drop out.

But the heavens smiled on us. By the end of the week, we were preparing 5 petabytes of raw data for shipment to MIT Haystack Observatory and the Max Planck Institute for Radio Astronomy, in Germany. There, researchers, using specially designed supercomputers to correlate the signals, aligned data segments in time. Then, to counter the phase-distorting influence of turbulent atmosphere above each telescope, we used purpose-built adaptive algorithms to perform even finer alignment, matching signals to within a trillionth of a second.

Now we faced another giant challenge: distilling all those quadrillions of bytes of data down to kilobytes of actual information that would go into an image we could show the world.

Nowhere in those petabytes of data were numbers we could simply plot as a picture. The "lens" of our telescope was a tremendous amount of software, which drew heavily on open-source packages and now is available online so that anyone can replicate or improve on our results.

Radio interferometry is relatively straightforward when you have many telescopes close together, aimed at a bright source, and observing at long wavelengths. The rotation of Earth during the night causes the baselines connecting pairs of the telescopes to sweep through a range of angles and effective lengths, filling in the space of possible measurements. After the data is collected, you line up the signals, extract a two-dimensional spatial-frequency pattern from the variations in amplitude and phase among them, and then do an inverse Fourier transform to convert the 2D frequency pattern into a 2D picture.

VLBI is a lot harder, especially when observing with just a handful of dishes at a short wavelength, as we were for M87*. Perfect calibration of the system was impossible, though we used an eighth telescope at the South Pole to help with that. Most problematic were differences in weather, altitude, and humidity at each telescope. The atmospheric noise scrambles the phase of the incoming signals.

Crunching Numbers Into Pictures The measurements taken to construct these images of a black hole came from seven radio telescopes spread around the world. An eighth (at the South Pole) aided in the calibration of these measurements. Newly developed algorithms and supercomputers were used to correlate the observed signals to make measurements and reconstruct the images from these measurements. Images: EHT Collaboration

Images: EHT Collaboration The problem we faced in observing with just seven telescopes and scrambled phases is a bit like trying to make out the tune of a duet played on a piano on which most of the keys are broken and the two players start out of sync with each other. That's hard-but not impossible. If you know what songs typically sound like, you can often still work out the tune.

It also helps that the noise scrambles the signal in an organized way that allows us to exploit a terrific trick called closure quantities. By multiplying correlated data from each pair in a trio of telescopes in the right order, we are able to cancel out a big chunk of the noise, though at the cost of adding some complexity to the problem.

The longest and shortest baselines in our telescope network set the limits of our resolution and field of view, and they were limited indeed. In effect, we could reconstruct a picture 160 microarcseconds wide (equivalent to 44 billionths of a degree on the sky) with roughly 20 microarcseconds of resolution. Literally an infinite number of images could fit such a data pattern. Somehow we would have to pick-and decide how confident to be in our choice.

To avoid fooling ourselves, we created lots of images from the M87* data, in lots of different ways, and developed a rigorous process-well beyond what is typically done in radio astronomy-to determine whether our reconstructions were reliable. Every step of this process, from initial correlation to final interpretation, was tested in multiple ways, including by using multiple software pipelines.

Before we ever started collecting data, we created a computer simulation of our telescope network and all the various sources of error that would affect it. Then we fed into this simulation a variety of synthetic images-some derived from astrophysical models of black holes and others we had completely made up, including one loosely based on Frosty the Snowman.

Next, we asked various groups to reconstruct images from the synthetic observations generated by the simulation. So we turned images into observations and then let others turn those back into images. The groups all produced pictures that were fuzzy and a bit off, but in the ballpark. The similarities and differences among those pictures taught us which aspects of the image were reliable and which spurious.

In June 2018, researchers on the imaging team split into four squads to work in complete isolation on the real data we had collected in 2017. For seven weeks, each squad worked incommunicado to make the best picture it could of M87*.

Two squads primarily used an updated version of an iterative procedure, known as the CLEAN algorithm, which was developed in the 1970s and has since been the standard tool for VLBI. Radio astronomers trust it, but in cases like ours, where the data is very sparse, the image-generation process often requires a lot of manual intervention.

Drawing on my experience with image reconstruction in other fields, my collaborators and I developed a different approach for the other two squads. It is a kind of forward modeling that starts with an image-say, a fuzzy blob-and uses the observational data to modify this starting guess until it finds an image that looks like an astronomical picture and has a high probability of producing the measurements we observed.

I'd seen this technique work well in other contexts, and we had tested it countless times with synthetic EHT data. Still, I was stunned when I fed the M87* data into our software and, within minutes, an image appeared: a fuzzy, asymmetrical ring, brighter on the bottom. I couldn't help worrying, though, that the other groups might come up with something quite different.

On 24 July 2018, a group of about 40 EHT members reconvened in a conference room in Cambridge, Mass. We each put up our best images-and everyone immediately started clapping and laughing. All four were rings of about the same diameter, asymmetrical, and brighter on the bottom.

We knew then that we were going to succeed, but we still had to demonstrate that we hadn't all just injected a human bias favoring a ring into our software. So we ran the data through three separate imaging pipelines and performed image reconstruction with a wide range of prototype images of the kind that we worried might fool us. In one of the imaging pipelines, we ran hundreds of thousands of simulations to systematically select roughly 1,500 of the best settings.

At the end, we took a high-scoring image from each of the three pipelines, blurred them to the same resolution, and took the average. The resulting picture made the front page of newspapers around the world.

Photo: Chi-Kwan Chan On the Same Page: The author reveals initial results of the four imaging teams, which to their great satisfaction produced similar images.

Photo: Chi-Kwan Chan On the Same Page: The author reveals initial results of the four imaging teams, which to their great satisfaction produced similar images. A decade or two from now, astronomers will no doubt look back at this first snapshot of a black hole and consider it a milestone, but they'll also smile at how indistinct and uncertain-and unmoving-it is compared with what they will probably be able to do. Although we are confident in the asymmetry of the ring and its size-roughly 40 microarcseconds in diameter-the fine structure in that image should be taken with a grain of salt.

But we did see a ring and the shadow of the black hole! That in itself is astonishing.

Measurements of that shadow add a lot of weight to the argument that M87* has a mass equal to 6.5 billion suns, consistent with the estimate astronomers had set by measuring the speed of stars circling the black hole. (In contrast, estimates made from the complicated effects of M87* on nearby gas were much lower: around 3.5 billion solar masses.) The size of the ring is also large enough to rule out speculation that M87* is not a supermassive black hole but rather a wormhole or a naked singularity-even stranger objects that appear to be consistent with general relativity but have never been observed.

Perhaps equally important, our initial success gives us good reason to believe that with further improvements to both the telescope network and the software, we will be able to image Sgr A* at the center of the Milky Way. Our nearest supermassive black hole is only a few hundred times as bright as the sun, and it is less than a thousandth as massive as M87*. But because it is 2,000 times closer than M87*, it would appear a little larger to us than M87*.

The biggest challenge in imaging Sgr A* is the speed at which it evolves. Blobs of plasma orbit M87* every couple of days, whereas those around Sgr A* complete an orbit every few minutes. So our goal is not to snap a still image of Sgr A* but to make a crude movie of it spinning like a billion-degree dervish at the center of the galaxy. This could be the next milestone in our quest to further constrain Einstein's theory of gravity-or point to physics beyond it.

And there could be practical spinoffs. The methods we are developing to make movies of Sgr A* are strikingly similar to those needed to make a medical MRI of a child squirming in a scanner or to image subterranean movements during an earthquake.

For future observations, we expect to use 11 or more telescopes-including a 12-meter dish in Greenland, an array of a dozen 15-meter dishes in the French Alps, and one of Caltech's radio dishes in Owens Valley, Calif.-to increase the number of baselines. We have also doubled the data-sampling rate from 32 Gb/s to 64 Gb/s by expanding the range of radio frequencies we record, which will strengthen our signals and eventually allow us to connect smaller dishes to the network. Together, these upgrades will boost the amount of data we collect by an order of magnitude, to about 100 petabytes a session.

And if all continues to go well, we hope that in the years or decades ahead the reach of our computational telescope will grow beyond the bounds of Earth itself, to include space-based radio telescopes in orbit. Adding fast-moving spacecraft into our existing VLBI network would be a tremendous challenge, of course. But for me, that is part of the appeal.

This article appears in the February 2020 print issue as "Portrait of a Black Hole."

About the AuthorKatherine (Katie) Bouman is an assistant professor in the departments of electrical engineering and of computing and mathematical sciences at Caltech.