Twitter’s manipulated media policy will remove harmful tweets & voter suppression, label others

Twitter today is announcing the official version of its "deepfake" and manipulated media policy, which largely involves labeling tweets and warning users of manipulated, deceptively altered or fabricated media - not, in most cases, removing them. Tweets containing manipulated or synthetic media will only be removed if they're likely to cause harm, the company says.

However, Twitter's definition of "harm" goes beyond physical harm, like threats to a person's or group's physical safety or the risk of mass violence or civil unrest. Also included in the definition of "harm" are any threats to the privacy or the ability of a person or group to freely express themselves or participate in civic events.

That means the policy covers things like stalking, unwanted or obsessive attention and targeted content containing tropes, epithets or material intended to silence someone. And notably, given the impending U.S. presidential election, it also includes voter suppression or intimidation.

An initial draft of Twitter's policy was first announced in November. At the time, Twitter said it would place a notice next to tweets sharing synthetic and manipulated media, warn users before they shared those tweets and include informational links explaining why the media was believed to be manipulated. This, essentially, is now confirmed as the official policy but is spelled out in more detail.

Twitter says it collected user feedback ahead of crafting the new policy using the hashtag #TwitterPolicyFeedback and gathered more than 6,500 responses as a result. The company prides itself on engaging its community when making policy decisions, but given Twitter's slow to flat user growth over the years, it may want to try consulting with people who have so far refused to join Twitter. This would give Twitter a wider understanding as to why so many have opted out and how that intersects with its policy decisions.

The company also says it consulted with a global group of civil society and academic experts, such as Witness, the U.K.-based Reuters Institute and researchers at New York University.

Based on feedback, Twitter found that a majority of users (70%) wanted Twitter to take action on misleading and altered media, but only 55% wanted all media of this sort removed. Dissenters, as expected, cited concerns over free expression. Most users (90%) only wanted manipulated media considered harmful to be removed. A majority (75+%) also wanted Twitter to take further action on the accounts sharing this sort of media.

Unlike Facebook's deepfake policy, which ignores disingenuous doctoring like cuts and splices to videos and out-of-context clips, Twitter's policy isn't limited to a specific technology, such as AI-enabled deepfakes. It's much broader.

"Things like selected editing or cropping or slowing down or overdubbing, or manipulation of subtitles would all be forms of manipulated media that we would consider under this policy," confirmed Yoel Roth, head of site integrity at Twitter.

"Our goal in making these assessments is to understand whether someone on Twitter who's just scrolling through their timeline has enough information to understand whether the media being shared in a tweet is or isn't what it claims to be," he explained.

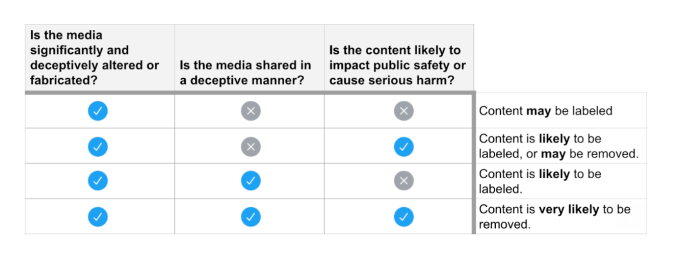

The policy utilizes three tests to decide how Twitter will take action on manipulated media. It first confirms the media itself is synthetic or manipulated. It then assesses if the media is being shared in a deceptive manner. And finally, it evaluates the potential for harm.

Media is considered deceptive if it could result in confusing others or leading to misunderstandings, or if it tries to deceive people about its origin - like media that claims it's depicting reality, but is not.

This is where the policy gets a little messy, as Twitter will have to examine the further context of this media, including not only the tweet's text, but also the media's metadata, the Twitter's user's profile information, including websites linked in the profile that are sharing the media, or websites linked in the tweet itself. This sort of analysis can take time and isn't easily automated.

If the media is determined also to cause serious harm, as described above, it will be removed.

Twitter, though, has left itself a lot of wiggle room in crafting the policy, using words like "may" and "likely" to indicate its course of action in each scenario. (See rubric below).

For example, manipulated media "may be" labeled, and manipulated and deceptive content is "likely to be" labeled. Manipulated, deceptive and harmful content is "very likely" to be removed. This sort of wording gives Twitter leeway to make policy exceptions, without actually breaking policy as it would if it used stronger language like "will be removed" or "will be labeled."

That said, Twitter's manipulated media policy doesn't exist in a vacuum. Some of the worst types of manipulated media, like non-consensual nudity, were already banned by the Twitter Rules. The new policy, then, isn't the only thing that will be considered when Twitter makes a decision.

Today, Twitter is also detailing how manipulated media will be labeled. In the case where the media isn't removed because it doesn't "cause harm," Twitter will add a warning label to the tweet along with a link to additional explanations and clarifications, via a landing page that offers more context.

A fact-checking component will also be a part of this system, led by Twitter's curation team. In the case of misleading tweets, Twitter aims to present facts from news organizations, experts and others who are talking about what's happening directly in line with the misleading tweets.

Twitter will also show a warning label to people before they retweet or like the tweet, may reduce the visibility of a tweet and may prevent it from being recommended.

One drawback to Twitter's publish-in-public platform is that tweets can go viral and spread very quickly, while Twitter's ability to enforce its policy can lag behind. Twitter isn't proactively scouring its network for misinformation in most cases - it's relying on its users reporting tweets for review.

And that can take time. Twitter has been criticized over the years for its failures to respond to harassment and abuse, despite policies to the contrary, and its struggle to remove bad actors. In other words, Twitter's intentions with regard to manipulated media may be spelled out in this new policy, but Twitter's real-world actions may still be found lacking. Time will tell.

We know that some Tweets include manipulated photos or videos that can cause people harm. Today we're introducing a new rule and a label that will address this and give people more context around these Tweets pic.twitter.com/P1ThCsirZ4

- Twitter Safety (@TwitterSafety) February 4, 2020

"Twitter's mission is to serve the public conversation. As part of that, we want to encourage healthy participation in that conversation. Things that distort or distract from what's happening threaten the integrity of information on Twitter," said Twitter VP of Trust & Safety, Del Harvey. "Our goal is really to provide people with more context around certain types of media they come across on Twitter and to ensure they're able to make informed decisions around what they're seeing," she added.