Meet Haru, the Unassuming Big-Eyed Robot Helping Researchers Study Social Robotics

This is a guest post. The views expressed here are solely those of the author and do not represent positions of IEEE Spectrum or the IEEE.

Honda Research Institute's (HRI) experimental social robot Haru was first introduced at the ACM/IEEE Human Robot Interaction conference in 20181. The robot is designed as a platform to investigate social presence and emotional and empathetic engagement for long-term human interaction. Envisioned as a multimodal communicative agent, Haru interacts through nonverbal sounds (paralanguage), eye, face, and body movements (kinesics), and voice (language). While some of Haru's features connect it to a long lineage of social robots, others distinguish it and suggest new opportunities for human-robot interaction.

Haru is currently in its first iteration, with plans underway for future development. Current research with Haru is conducted with core partners of the Socially Intelligent Robotics Consortium (SIRC), described in more detail below, and it concentrates on its potential to communicate across the previously mentioned three-way modality (language, paralanguage, and kinesics). Long term, we hope Haru will drive research into robots as a new form of companion species and as a platform for creative content.

Image: Honda Research Institute Communication with Haru

Image: Honda Research Institute Communication with Haru The first Haru prototype is a relatively simple communication device-an open-ended platform through which researchers can explore the mechanisms of multi-modal human-robot communication. Researchers deploy various design techniques, technologies, and interaction theories to develop Haru's basic skillset for conveying information, personality, and affect. Currently, researchers are developing Haru's interaction capabilities to create a new form of telepresence embodiment that breaks away from the traditional notion of a tablet-on-wheels, while also exploring ways for the robot to communicate with people more directly.

Haru as a mediatorHaru's unique form factor and its developing interaction modalities enable its telepresence capabilities to extend beyond what is possible when communicating through a screen. Ongoing research with partners involves mapping complex and nuanced human expressions to Haru. One aspect of this work developing an affective language that takes advantage of Haru's unique LCD screen eyes and LED mouth, neck, and eye motions, and rotating base to express emotions of varying type and intensity. Another ongoing research is the development of perception capabilities for Haru to recognize different human emotions and will allow Haru to mimic the remote person's affective cues and behaviors.

From a practical telepresence perspective, using Haru in this way can, on the one hand, add to the enjoyment and clarity with which affect can be conveyed and read at a distance. On the other hand, Haru's interpretation of emotional cues can help align the expectations between sender and receiver. For example, Haru could be used to enhance communication in a multi-cultural context by displaying culturally appropriate social cues without the operator controlling it. These future capabilities are being put into practice through the development of "robomoji/harumoji"-a hardware equivalent of emoji with Haru acting as a telepresence robot that transmits a remote person's emotions and actions.

Haru as a communicatorBuilding upon the work on telepresence, we will further develop Haru as a communicative agent through the development of robot agency2 consistent with its unique design and behavioral repertoire, and possibly with its own character. Ongoing research involves kinematic modeling and motion design and synthesis. We are also developing appropriate personalities for Haru with the help of human actors, who are helping us create a cohesive verbal and nonverbal language for Haru that expresses its capabilities and can guide users to interact with it in appropriate ways.

Haru will in this case autonomously frame its communication to fit that of its co-present communication partner. In this mode, the robot has more freedom to alter and interpret expressions for communication across the three modalities than in telepresence mode. The goal will be to communicate so that the receiving person not only understands the information being conveyed, but can infer Haru's own behavioral state and presence. This will ideally make the empathic relation between Haru and the human bi-directional.

Hybrid realismDesigning for both telepresence and direct communication means that Haru must be able to negotiate between times when it is communicating on behalf of a remote person, and times when it is communicating for itself. In the future, researchers will explore how teleoperation can be mixed with a form of autonomy, such that people around Haru know whether it is acting as a mediator or as an agent as it switches between "personalities." Although challenging, this will open up new exciting opportunities to study the effect of social robots embodying other people, or even other robots.

Image: Honda Research Institute Robots as a companion species

Image: Honda Research Institute Robots as a companion species The design concept of Haru focuses on creating an emotionally expressive embodied agent that can support long-term, sustainable human-robot interaction. People already have these kinds of relationships with their pets. Pets are social creatures people relate to create bonds without sharing the same types of perception, comprehension, and expression. This bodes well for robots such as Haru, which similarly may not perceive, comprehend, or express themselves in fully human-like ways, but which nonetheless can encourage humans to empathize with them. This may also prove to be a good strategy for grounding human expectations while maximizing emotional engagement.

Recent research3,4 has shown that in some cases people can develop emotional ties to robots. Using Haru, researchers can investigate whether human-robot relations can become even more affectively rich and meaningful through informed design and better interaction strategies, as well as through more constant and varied engagement with users.

Photo: Evan Ackerman/IEEE Spectrum Haru is sad.

Photo: Evan Ackerman/IEEE Spectrum Haru is sad. Ongoing research with partners involve interaction-based dynamic interpretation of trust, design and maximization of likable traits through empathy, and investigating how people of different ages and cognitive abilities make sense of and interact with Haru. To carry out these tasks, our partners have been deploying Haru in public spaces such as in a children's science museum, an elementary school, and an intergenerational daycare institution.

In the later stages of the project, we hope to look at how a relationship with Haru might position the robot as a new kind of companion. Haru is not intended to be a replacement for a pet or a person, but rather an entity that people can bond and grow with in a new way-a new "companion species"5. Sustained interaction with Haru will also allow us to explore how bonding can result in a relationship and a feeling of trust. We argue that a trusting relationship requires the decisional freedom not to trust and therefore, would be one in which neither human nor robot are always subordinate to each other, but rather one that supports a flexible bi-directional relationship enabling cooperative behavior and growth.

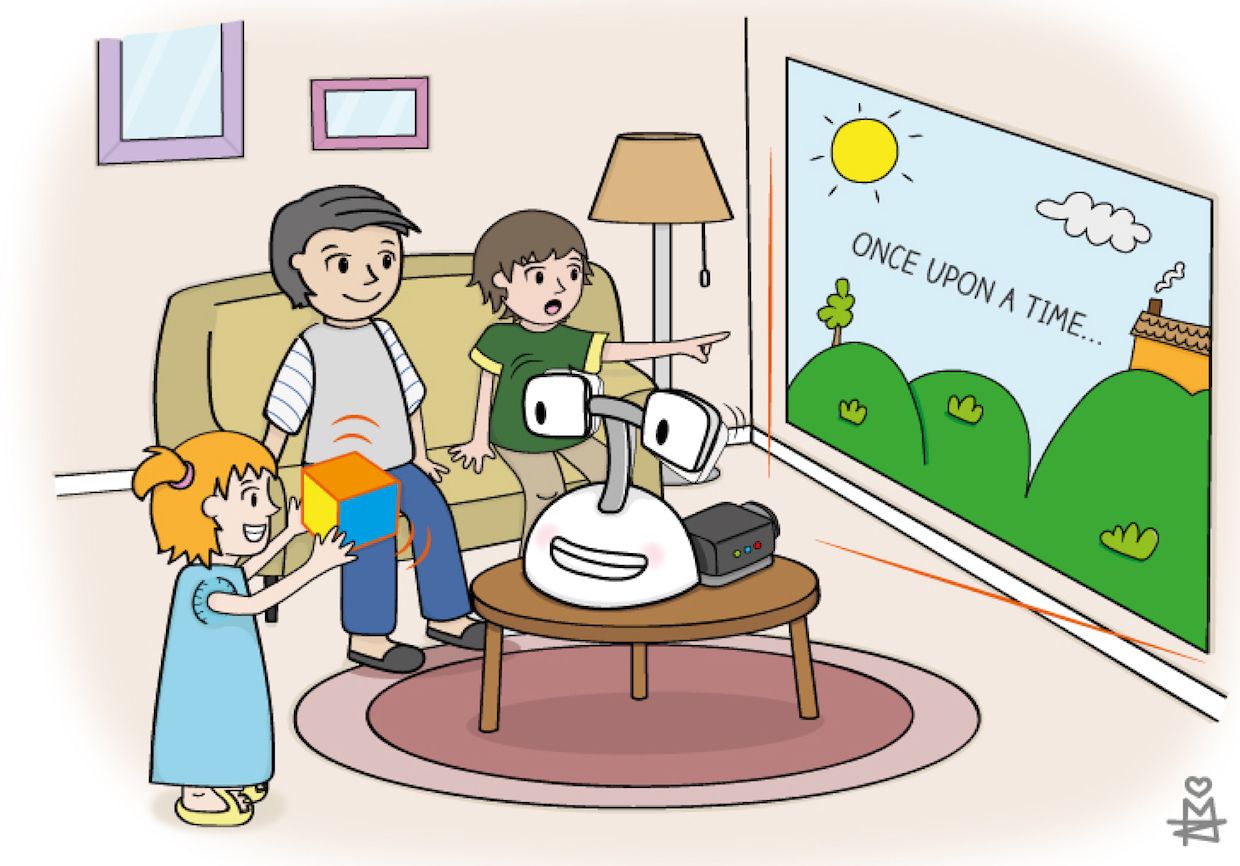

Image: Honda Research Institute Robots as a platform for creative content

Image: Honda Research Institute Robots as a platform for creative content Social robots should not be designed as mere extensions to speech-centric smart devices; we need to develop meaningful and unique use cases suited to the rich modalities robots offer. For Haru, such use cases must be rooted in its rich three-way communicative modality and its unique physical and emotional affordances. Researchers plan to maximize Haru's communication and interaction potential by developing social apps and creative content that convey information and evoke emotional responses that can enhance people's perceptions of the robot as a social presence.

The Haru research team (consisting of both HRI Japan and Socially Intelligent Robotics Consortium partners) will develop apps that meet societal needs through Haru's emotional interactions with people. We envision three main pillars of research activity: entertainment and play, physically enriched social networking, and mental well-being. Entertainment and play will encompass interactive storytelling, expressive humor, and collaborative gaming, among others. Physically enriched social networking will build upon the telepresence capability of Haru. Mental well-being will explore the beneficial role of Haru's physical manifestation of emotion and affection for coaching.

Numerous smartphone apps6 explore this application in digital and unimodal communication. We believe the much richer expressiveness and physical presence will give Haru exciting opportunities to qualitatively improve such services. Current research with partners involves collaborative problem solving and developing interactive applications for entertainment and play. Haru is currently able to play rock-paper-scissors and tower of Hanoi with people, which are being tested in an elementary school setting. Another capability that is being developed for Haru is storytelling play, in which the robot can collaborate with people to create a story.

Haru's research team also sees the importance of both automated and curated creative multimedia content to complement such social applications. Content drives the success of smartphones and gaming consoles, but has not yet been widely considered for robots. The team will therefore investigate how content can contribute to social human-robot interaction. Haru, by delivering creative content using its three-way communication modality, will blur the line between the physical and the animated, reinforcing its presence alongside the content it conveys.

Content drives the success of smartphones and gaming consoles, but has not yet been widely considered for robots.In the context of Haru, creativity will be cooperative. Through sustained interaction, Haru will be able to motivate, encourage, and illustrate alternatives in creative tasks (e.g. different potential storylines in storytelling or game play) depending on the cognitive state of its interaction partner. The mixture of digital visualization (with a mini projector, for example) and physical interaction opens up new opportunities for cooperative creativity. Ongoing research with partners involves automatic affect generation for Haru, models for generating prose content for story telling in different genre, and affectively rich audio and text-to-speech to support social apps.

Finally, Haru will be a networked device embedded into a personal or office based digital infrastructure. On one hand, Haru can serve as a physical communication platform for other robots (e.g. robotic vacuum cleaners that typically have no significant communication channels), and on the other hand, Haru can use the additional physical capabilities of other devices to complement its own limitations.

Photo: Evan Ackerman/IEEE Spectrum Haru sees you! Research with Haru

Photo: Evan Ackerman/IEEE Spectrum Haru sees you! Research with Haru Realizing the design and development goals for Haru is an enormous task, only possible through collaboration of a multi-disciplinary group of experts in robotics, human-robot interaction, design, engineering, psychology, anthropology, philosophy, cognitive science, and other fields. To help set and achieve the goals described above, over the past two years academic researchers in Japan, Australia, the United States, Canada, and Europe have been spending their days with Haru.

This global team of roboticists, designers, and social scientists call themselves the Socially Intelligent Robotics Consortium (SIRC) and are working together to expand and actualize the potential of human-robot communication. Under the umbrella of the consortium, Haru has been adopted as a common platform enabling researchers to share data, research methods, results, and evaluation tools. As a partnership, it is possible to tackle the complex problem of developing empathic robotic mediators, companions, and content platforms by working on independent tasks under a common theme.

Haru offers the opportunity to experiment with social robots as a new kind of trustful companion, not just based on functionality, but on emotional connection and the sense of unique social presence.Social robotics is a promising area of research, but in a household crowded with smart devices and appliances, it is important to re-think the role of social robots to find their niche amidst competing and overlapping products while keeping users engaged over months and years instead of days and weeks. The development of Haru offers the opportunity to experiment with flexible and varied ways to communicate and interact with social robots as a new kind of trustful companion, not just based on functionality, but on emotional connection and the sense of unique social presence.

Research with Haru will explore exciting applications driven by interaction, cooperation, creativity and design, suspending people's disbelief so that each experience with Haru is a step in a shared journey, demonstrating how people and robots might grow and find common purpose and enjoyment together in a hybrid human-robot society.

Dr. Randy Gomez is a senior scientist at Honda Research Institute Japan. He oversees the embodied communication research group, which explores the synergy between communication and interaction in order to create meaningful experiences with social robots.

References

1. Gomez R., Szapiro D., Galindo K. & Nakamura K., "Haru: Hardware Design of an Experimental Tabletop Robot Assistant," HRI 2018: 233-240.

2. Gunkel D., "Robot Rights," The MIT Press, 2018.

3. Haraway, "The Companion Species Manifesto: Dogs, People, and Significant Otherness," Prickly Paradigm Press, 2003.

4. Weiss A., Wurhofer, D., & Tscheligi, M, "I love this dog-children's emotional attachment to the robotic dog AIBO," International Journal of Social Robotics, 2009.

5. Sabanovic S., Reeder, S. M., & Kechavarzi, B, "Designing robots in the wild: In situ prototype evaluation for a break management robot," Journal of Human-Robot Interaction, 2014.

6. Inkster B., Shubhankar S., & Vinod S. , "An empathy-driven, conversational artificial intelligence agent (Wysa) for digital mental well-being: real-world data evaluation mixed-methods study," JMIR mHealth and uHealth, 2018.