How the Digital Camera Transformed Our Concept of History

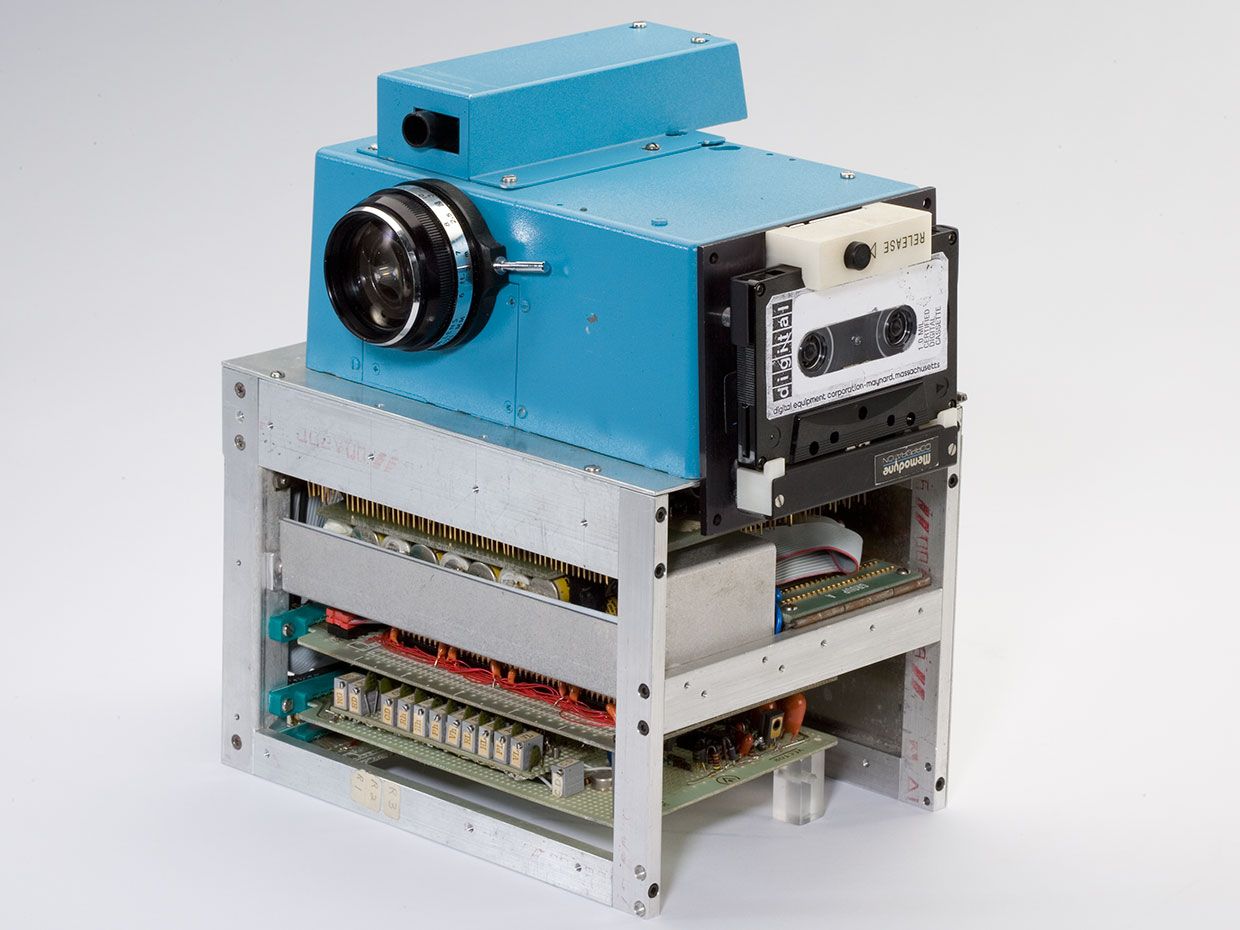

Photo: George Eastman Museum This 1975 digital camera prototype used a 100-by-100-pixel CCD to capture images. Digital photography didn't enter the mainstream for another 20 years.

Photo: George Eastman Museum This 1975 digital camera prototype used a 100-by-100-pixel CCD to capture images. Digital photography didn't enter the mainstream for another 20 years. For an inventor, the main challenge might be technical, but sometimes it's timing that determines success. Steven Sasson had the technical talent but developed his prototype for an all-digital camera a couple of decades too early.

A CCD from Fairchild was used in Kodak's first digital camera prototypeIt was 1974, and Sasson, a young electrical engineer at Eastman Kodak Co., in Rochester, N.Y., was looking for a use for Fairchild Semiconductor's new type 201 charge-coupled device. His boss suggested that he try using the 100-by-100-pixel CCD to digitize an image. So Sasson built a digital camera to capture the photo, store it, and then play it back on another device.

Sasson's camera was a kluge of components. He salvaged the lens and exposure mechanism from a Kodak XL55 movie camera to serve as his camera's optical piece. The CCD would capture the image, which would then be run through a Motorola analog-to-digital converter, stored temporarily in a DRAM array of a dozen 4,096-bit chips, and then transferred to audio tape running on a portable Memodyne data cassette recorder. The camera weighed 3.6 kilograms, ran on 16 AA batteries, and was about the size of a toaster.

After working on his camera on and off for a year, Sasson decided on 12 December 1975 that he was ready to take his first picture. Lab technician Joy Marshall agreed to pose. The photo took about 23 seconds to record onto the audio tape. But when Sasson played it back on the lab computer, the image was a mess-although the camera could render shades that were clearly dark or light, anything in between appeared as static. So Marshall's hair looked okay, but her face was missing. She took one look and said, Needs work."

Sasson continued to improve the camera, eventually capturing impressive images of different people and objects around the lab. He and his supervisor, Garreth Lloyd, received U.S. Patent No. 4,131,919 for an electronic still camera in 1978, but the project never went beyond the prototype stage. Sasson estimated that image resolution wouldn't be competitive with chemical photography until sometime between 1990 and 1995, and that was enough for Kodak to mothball the project.

Digital photography took nearly two decades to take offWhile Kodak chose to withdraw from digital photography, other companies, including Sony and Fuji, continued to move ahead. After Sony introduced the Mavica, an analog electronic camera, in 1981, Kodak decided to restart its digital camera effort. During the '80s and into the '90s, companies made incremental improvements, releasing products that sold for astronomical prices and found limited audiences. [For a recap of these early efforts, see Tekla S. Perry's IEEE Spectrum article, Digital Photography: The Power of Pixels."]

Photos: John Harding/The LIFE Images Collection/Getty Images Apple's QuickTake, introduced in 1994, was one of the first digital cameras intended for consumers.

Photos: John Harding/The LIFE Images Collection/Getty Images Apple's QuickTake, introduced in 1994, was one of the first digital cameras intended for consumers. Then, in 1994 Apple unveiled the QuickTake 100, the first digital camera for under US $1,000. Manufactured by Kodak for Apple, it had a maximum resolution of 640 by 480 pixels and could only store up to eight images at that resolution on its memory card, but it was considered the breakthrough to the consumer market. The following year saw the introduction of Apple's QuickTake 150, with JPEG image compression, and Casio's QV10, the first digital camera with a built-in LCD screen. It was also the year that Sasson's original patent expired.

Digital photography really came into its own as a cultural phenomenon when the Kyocera VisualPhone VP-210, the first cellphone with an embedded camera, debuted in Japan in 1999. Three years later, camera phones were introduced in the United States. The first mobile-phone cameras lacked the resolution and quality of stand-alone digital cameras, often taking distorted, fish-eye photographs. Users didn't seem to care. Suddenly, their phones were no longer just for talking or texting. They were for capturing and sharing images.

Photo: David Duprey/AP In 2005, Steven Sasson posed with his 1975 prototype and Kodak's latest digital camera offering, the EasyShare One. By then, camera cellphones were already on the rise.

Photo: David Duprey/AP In 2005, Steven Sasson posed with his 1975 prototype and Kodak's latest digital camera offering, the EasyShare One. By then, camera cellphones were already on the rise. The rise of cameras in phones inevitably led to a decline in stand-alone digital cameras, the sales of which peaked in 2012. Sadly, Kodak's early advantage in digital photography did not prevent the company's eventual bankruptcy, as Mark Harris recounts in his 2014 Spectrum article The Lowballing of Kodak's Patent Portfolio." Although there is still a market for professional and single-lens reflex cameras, most people now rely on their smartphones for taking photographs-and so much more.

How a technology can change the course of historyThe transformational nature of Sasson's invention can't be overstated. Experts estimate that people will take more than 1.4 trillion photographs in 2020. Compare that to 1995, the year Sasson's patent expired. That spring, a group of historians gathered to study the results of a survey of Americans' feelings about the past. A quarter century on, two of the survey questions stand out:

During the last 12 months, have you looked at photographs with family or friends?

During the last 12 months, have you taken any photographs or videos to preserve memories?

In the nationwide survey of nearly 1,500 people, 91 percent of respondents said they'd looked at photographs with family or friends and 83 percent said they'd taken a photograph-in the past year. If the survey were repeated today, those numbers would almost certainly be even higher. I know I've snapped dozens of pictures in the last week alone, most of them of my ridiculously cute puppy. Thanks to the ubiquity of high-quality smartphone cameras, cheap digital storage, and social media, we're all taking and sharing photos all the time-last night's Instagram-worthy dessert; a selfie with your bestie; the spot where you parked your car.

So are all of these captured moments, these personal memories, a part of history? That depends on how you define history.

For Roy Rosenzweig and David Thelen, two of the historians who led the 1995 survey, the very idea of history was in flux. At the time, pundits were criticizing Americans' ignorance of past events, and professional historians were wringing their hands about the public's historical illiteracy.

Instead of focusing on what people didn't know, Rosenzweig and Thelen set out to quantify how people thought about the past. They published their results in the 1998 book The Presence of the Past: Popular Uses of History in American Life (Columbia University Press). This groundbreaking study was heralded by historians, those working within academic settings as well as those working in museums and other public-facing institutions, because it helped them to think about the public's understanding of their field.

Little did Rosenzweig and Thelen know that the entire discipline of history was about to be disrupted by a whole host of technologies. The digital camera was just the beginning.

For example, a little over a third of the survey's respondents said they had researched their family history or worked on a family tree. That kind of activity got a whole lot easier the following year, when Paul Brent Allen and Dan Taggart launched Ancestry.com, which is now one of the largest online genealogical databases, with 3 million subscribers and approximately 10 billion records. Researching your family tree no longer means poring over documents in the local library.

Similarly, when the survey was conducted, the Human Genome Project was still years away from mapping our DNA. Today, at-home DNA kits make it simple for anyone to order up their genetic profile. In the process, family secrets and unknown branches on those family trees are revealed, complicating the histories that families might tell about themselves.

Finally, the survey asked whether respondents had watched a movie or television show about history in the last year; four-fifths responded that they had. The survey was conducted shortly before the 1 January 1995 launch of the History Channel, the cable channel that opened the floodgates on history-themed TV. These days, streaming services let people binge-watch historical documentaries and dramas on demand.

Today, people aren't just watching history. They're recording it and sharing it in real time. Recall that Sasson's MacGyvered digital camera included parts from a movie camera. In the early 2000s, cellphones with digital video recording emerged in Japan and South Korea and then spread to the rest of the world. As with the early still cameras, the initial quality of the video was poor, and memory limits kept the video clips short. But by the mid-2000s, digital video had become a standard feature on cellphones.

As these technologies become commonplace, digital photos and video are revealing injustice and brutality in stark and powerful ways. In turn, they are rewriting the official narrative of history. A short video clip taken by a bystander with a mobile phone can now carry more authority than a government report.

Maybe the best way to think about Rosenzweig and Thelen's survey is that it captured a snapshot of public habits, just as those habits were about to change irrevocably.

Digital cameras also changed how historians conduct their researchFor professional historians, the advent of digital photography has had other important implications. Lately, there's been a lot of discussion about how digital cameras in general, and smartphones in particular, have changed the practice of historical research. At the 2020 annual meeting of the American Historical Association, for instance, Ian Milligan, an associate professor at the University of Waterloo, in Canada, gave a talk in which he revealed that 96 percent of historians have no formal training in digital photography and yet the vast majority use digital photographs extensively in their work. About 40 percent said they took more than 2,000 digital photographs of archival material in their latest project. W. Patrick McCray of the University of California, Santa Barbara, told a writer with The Atlantic that he'd accumulated 77 gigabytes of digitized documents and imagery for his latest book project [an aspect of which he recently wrote about for Spectrum].

So let's recap: In the last 45 years, Sasson took his first digital picture, digital cameras were brought into the mainstream and then embedded into another pivotal technology-the cellphone and then the smartphone-and people began taking photos with abandon, for any and every reason. And in the last 25 years, historians went from thinking that looking at a photograph within the past year was a significant marker of engagement with the past to themselves compiling gigabytes of archival images in pursuit of their research.

Photos: Allison Marsh The author's English Mastiff, Tildie, is eminently photographable (in the author's opinion).

Photos: Allison Marsh The author's English Mastiff, Tildie, is eminently photographable (in the author's opinion). So are those 1.4 trillion digital photographs that we'll collectively take this year a part of history? I think it helps to consider how they fit into the overall historical narrative. A century ago, nobody, not even a science fiction writer, predicted that someone would take a photo of a parking lot to remember where they'd left their car. A century from now, who knows if people will still be doing the same thing. In that sense, even the most mundane digital photograph can serve as both a personal memory and a piece of the historical record.

An abridged version of this article appears in the July 2020 print issue as Born Digital."

Part of a continuing series looking at photographs of historical artifacts that embrace the boundless potential of technology.

About the AuthorAllison Marsh is an associate professor of history at the University of South Carolina and codirector of the university's Ann Johnson Institute for Science, Technology & Society.