New Records for AI Training

The most broadly accepted suite of seven standard tests for AI systems released its newest rankings Wednesday, and GPU-maker Nvidia swept all the categories for commercially-available systems with its new A100 GPU-based computers, breaking 16 records. It was, however, the only entrant in some of them.

The rankings are by MLPerf, a consortium with membership from both AI powerhouses like Facebook, Tencent, and Google and startups like Cerebras, Mythic, and Sambanova. MLPerf's tests measure the time it takes a computer to train a particular set of neural networks to an agreed upon accuracy. Since the previous round of results, released in July 2019, the fastest systems improved by an average of 2.7x, according to MLPerf.

MLPerf was created to help the industry separate the facts from fiction in AI," says Paresh Kharya, senior director of product management for data center computing at Nvidia. Nevertheless, most of the consortium members have not submitted training results. Alibaba, Dell, Fujitsu, Google, and Tencent were the only others competing in the commercially- or cloud-available categories. Intel had several entries for systems set to come to market within the next six months.

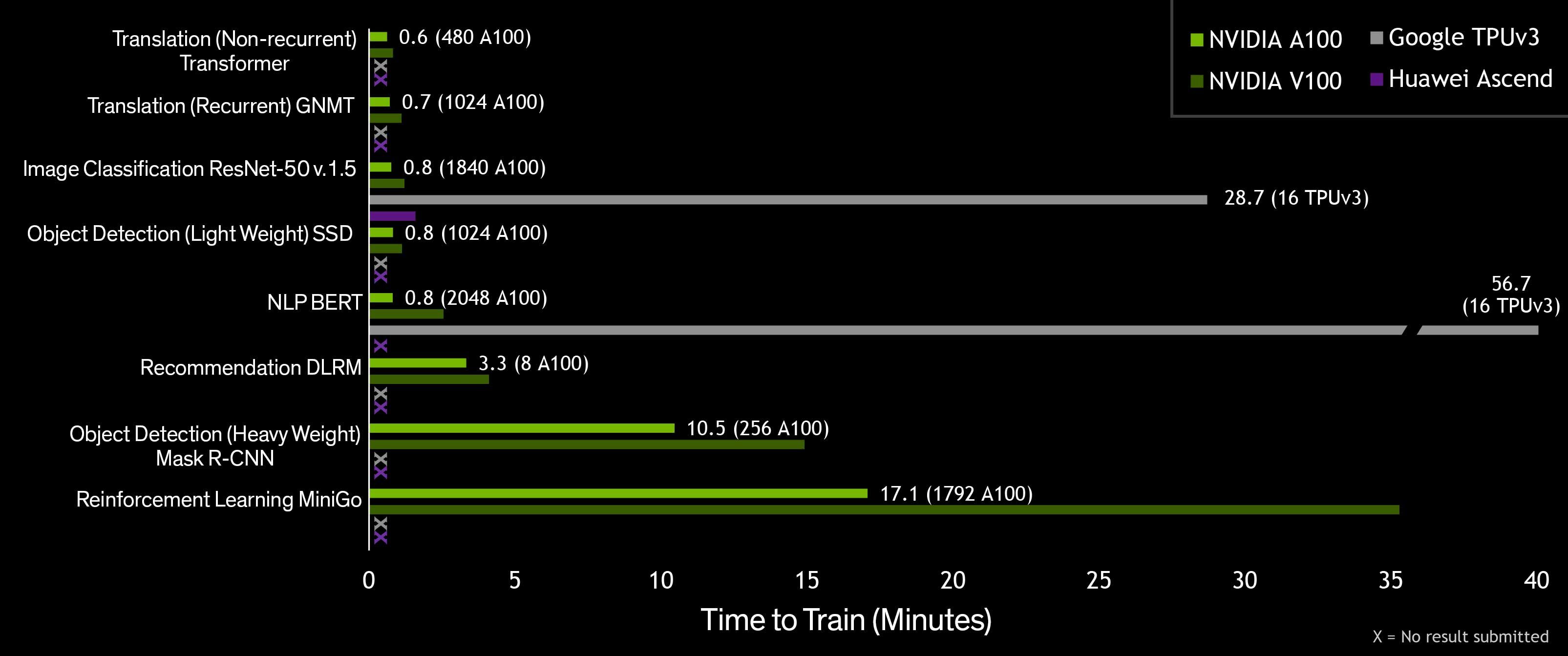

Image: Nvidia Among the commercial and cloud-available systems, Nvidia's A100 DGX SuperPOD [bright green] trained neural networks the fastest.

Image: Nvidia Among the commercial and cloud-available systems, Nvidia's A100 DGX SuperPOD [bright green] trained neural networks the fastest. In this, the third round of MLPerf training results, the consortium added two new benchmarks and substantially revised a third, for a total of seven tests. The two new benchmarks are called BERT and DLRM.

BERT, for Bi-directional Encoder Representation from Transformers, is used extensively in natural language processing tasks such as translation, search, understanding and generating text, and answering questions. It is trained using Wikipedia. At 0.81 minutes Nvidia had the fastest training time amongst the commercially available systems for this benchmark, but an internal or R&D Google system nudged past it with a 0.39 minute training run.

DLRM, for Deep Learning Recommendation Model, is representative of the recommender systems used in online shopping, search results, and social media content ranking. It's trained using a terabyte-sized set of click logs supplied by Criteo AI Lab. That dataset contains the click logs of four billion user and item interactions over a 24-day period. Though, Nvidia stood alone amongst the commercially-available entrants for DLRM with a 3.3-minute training run, a system internal to Google won this category with a 1.2-minute effort.

Besides adding DLRM and BERT, MLPerf upped the difficulty level for the Mini-Go benchmark. Mini-Go uses a form of AI called reinforcement learning to learn to play go on a full-size 19 x 19 board. Previous versions used smaller boards. It's the hardest benchmark," says Kharya. Mini-Go has to simultaneously play the game of Go, process the data from the game, and train the network on that data. Reinforcement learning is hard because it's not using an existing data set," he says. You're basically creating the dataset as you go along."

Image: Nvidia On a per processor-basis Nvidia had a good run as well.

Image: Nvidia On a per processor-basis Nvidia had a good run as well. According to Jonah Alben Nvidia's vice president of GPU engineering, RL is increasingly important in robotics, because it could allow robots to learn new tasks without the risk of damaging people or property.

Nvidia's only other competition on Mini-Go were from a not-yet commercial system from Intel, which came in at 409 minutes, and from an internal system at Google, which took just under 160 minutes.

Nvidia tested all its benchmarks using the Selene supercomputer, which is made from the company's DGX SuperPOD computer architecture. The system ranks 7th in the Top500 supercomputer list and is the second most powerful industrial supercomputer on the planet.